White Paper: Correcting the Record

Corrections as an antidote to disinformation

April 16, 2020

Back to Disinfo Hub

Corrections as an antidote to disinformation

April 16, 2020

Back to Disinfo Hub

A new academic study proves that providing social media users who have seen false or misleading information with corrections from fact-checkers can decrease belief in disinformation1 [For simplicity, in this white paper we use “disinformation” and “false news” interchangeably to mean verifiably false or misleading information that has the potential to cause public harm, for example by undermining democracy or public health.] by an average of 50 percent, and by as much as 61 percent.

These significant, and empirically proven, reductions in the belief of false information provide urgent insight on how platforms and regulators can defend social media users against the growing threat of online disinformation and misinformation by “correcting the record.”

This research2 [Note: This white paper explains the findings of the research conducted by Dr. Wood and Dr. Porter. The academic study will be published independently by the authors.], commissioned by Avaaz, was conducted by Dr. Ethan Porter of George Washington University and Dr. Tom Wood of Ohio State University, who are leading experts in the study of corrections of false information.

In order to test the effectiveness of corrections, a hyper-realistic visual model of Facebook was designed to mimic the user experience on the platform. Then a representative sample of the American population, consisting of 2,000 anonymous participants, chosen and surveyed independently by YouGov’s Academic, Political, & Public Affairs Research branch, were randomly shown up to 5 pieces of false news that were based on real, independently fact-checked examples of false or misleading content being shared on Facebook.

Through a randomized model, some of the users, after seeing the false news, were shown corrections. Some users saw only the false or misleading content, and some saw neither. Then the surveyed participants answered questions designed to test whether they believed the false news.

In addition to the conclusion that corrections can effectively counter American social media users’ belief in disinformation, cutting it in half, the study also found that:

The average drop in belief in false or misleading information after seeing a correction was 50% for Republicans and 47% for Democrats.

61.5% fewer people believed a false news story that claimed President Trump said Republicans are the dumbest group of voters.

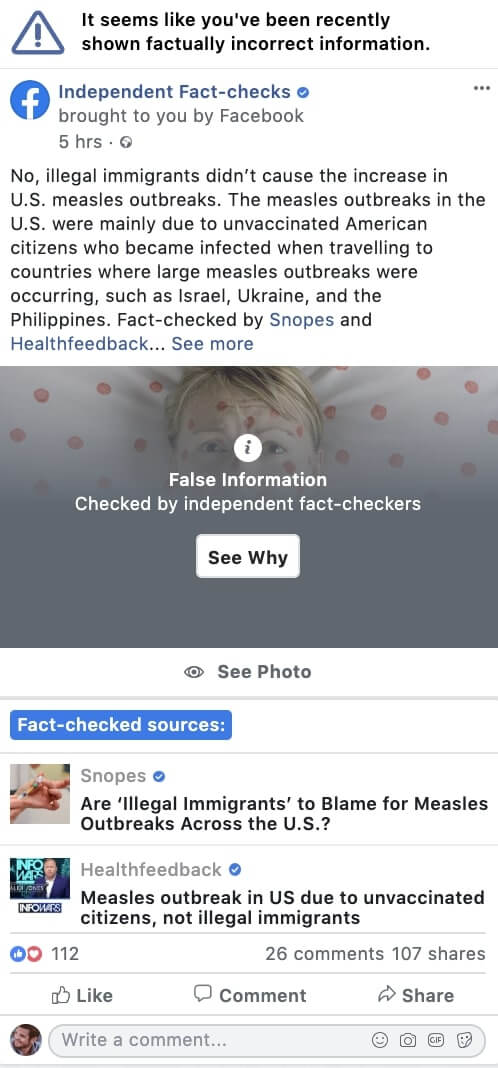

50.7% fewer people believed a false news story that claimed the measles outbreaks were caused by illegal immigrants after being exposed to a correction versus those that saw only the fake item.

50% fewer people believed a false news story that claimed Greta is the highest paid activist in the world and had made millions from her activism.

42.4% fewer people believed a false news story that claimed that a photo of a female Somali soldier circulating on social media showed US Representative Ilhan Omar at a terrorist training camp in Somalia.

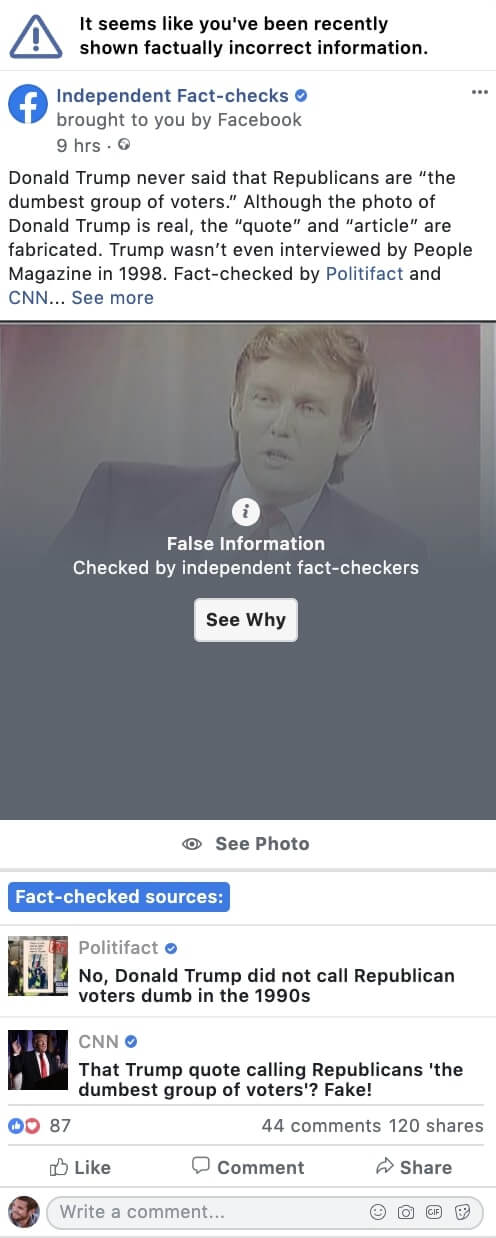

42% fewer people believed a false news story that claimed that installing 5G towers required a hazmat suit.

Out of 1,000 Americans who participated in the study, 68.4% agree or strongly agree that Facebook should inform users when they have been targeted with disinformation, and provide them with corrections from independent fact-checkers.

60.8% agree or strongly agree that social media companies should be required by law to protect their users from disinformation, with only 18.1% disagreeing.

Disinformation today threatens democracies and lives around the world. In the United States, reports indicate that American voters face an increasing threat of disinformation meant to sow mistrust and division ahead of the 2020 elections. Platform users across the world are being targeted with coronavirus health misinformation that is triggering panic and complicating the task of health workers seeking to contain the disease. Businesses and consumers are facing financial losses due to online defamation and disinformation campaigns that disrupt the marketplace.

So far, Facebook and other major social media platforms have adopted piecemeal fact-checking efforts, and are not yet providing retroactive corrections to all users who have seen or interacted with a piece of disinformation or misinformation content. As the following white paper details, this research indicates that platforms should vigorously ramp up these efforts. It shows that “Correct the Record,” which means alerting and providing independently fact-checked corrections to all users who have seen or interacted with verifiably false or misleading information, is one of the most effective defenses available against coordinated disinformation campaigns and lies going viral.

Platforms must show their seriousness by quickly deploying this solution. Governments must also act to regulate disinformation and ensure that “Correct the Record” is deployed in the face of misleadingly harmful content that can weaken democracies and put lives at risk.

The dramatic rise of disinformation has confounded policymakers who are caught between concern for our democracies and commitment to the freedom of expression. Policymakers may be paralyzed, but malicious actors continue to exploit disinformation to degrade democratic discourse. Avaaz has recently released reports documenting disinformation aimed at influencing the US 2020 election that has reached 158 million views. We saw co-ordinated action to sway elections in Europe. Our research on anti-vaccination misinformation has also shown that 57% of Brazlians who did not vaccinate their children had cited a reason that is understood to be factually inaccurate, according to the World Health Organization.

Through investigation, advocacy and research, Avaaz has sought to not only highlight the scale of the threat, but to provide platform executives and policymakers around the world with effective solutions to the disinformation “infodemic” that is flooding millions of social media users.

Avaaz’s initial research, as well as our engagement with experts on this topic from around the world, emphasised to us that offering sophisticated corrections to users targeted with disinformation would significantly reduce the impact of disinformation, and help build resilience to this phenomenon. However, platforms initially refused to apply this solution at the scale needed, and advocated against it with policymakers, using the excuse that there is not enough research showing that corrections work in a social media environment. Moreover, platforms often refused to release their own internal data and research on this topic.

To test whether the now-debunked claim that retroactive corrections were not effective in a social media context, Avaaz designed a platform that closely resembled the Facebook interface, and began internally testing different designs for how corrections could be implemented with users from around the world. Our initial findings proved promising, and allowed us to hone a design for corrections that was highly impactful.

Nonetheless, to ensure the integrity and independence of these findings, and that they could be replicated in an anonymous environment, we shared our results with academic experts in the field, who were interested in implementing an independent study, focusing on the US population.

Leading researchers, Dr. Ethan Porter of George Washington University and Dr. Tom Wood of The Ohio State University, conducted the academic study.

To test “Correct the Record,” Avaaz built the first-ever, hyper-realistic visual model of Facebook and, the researchers used YouGov’s gold-standard research platform to then survey 2,000 anonymous participants who were shown up to 5 pieces of false news. The distribution of false or misleading posts was kept random: some users were given corrections, some saw only the false news, and some saw neither. Then they answered questions designed to test whether they believed the lies.

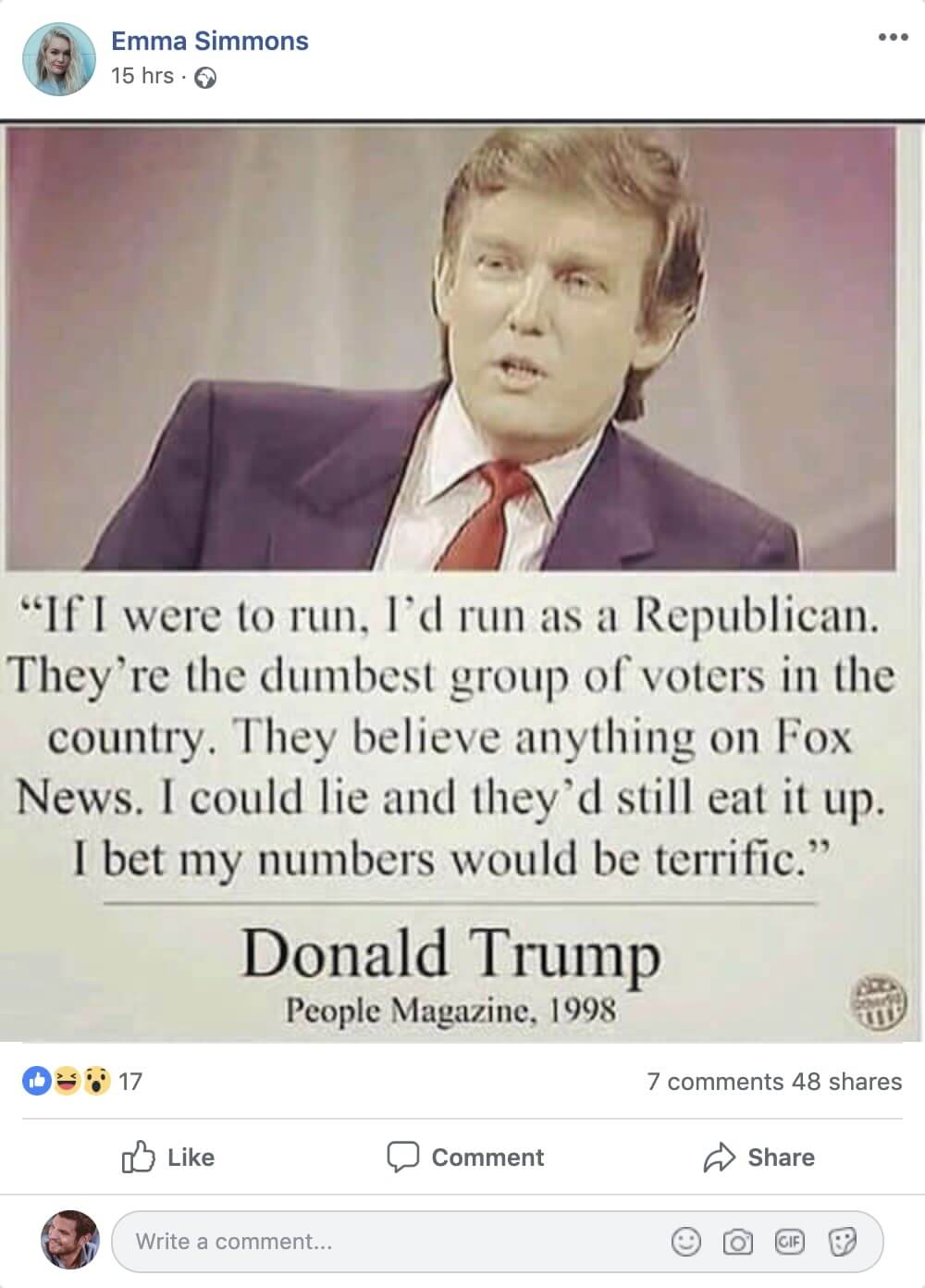

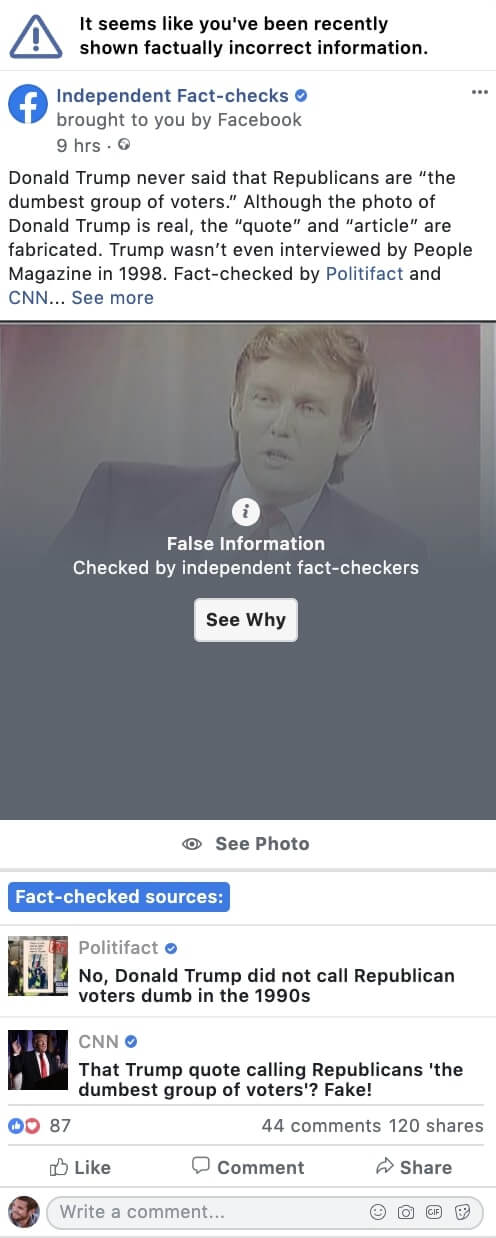

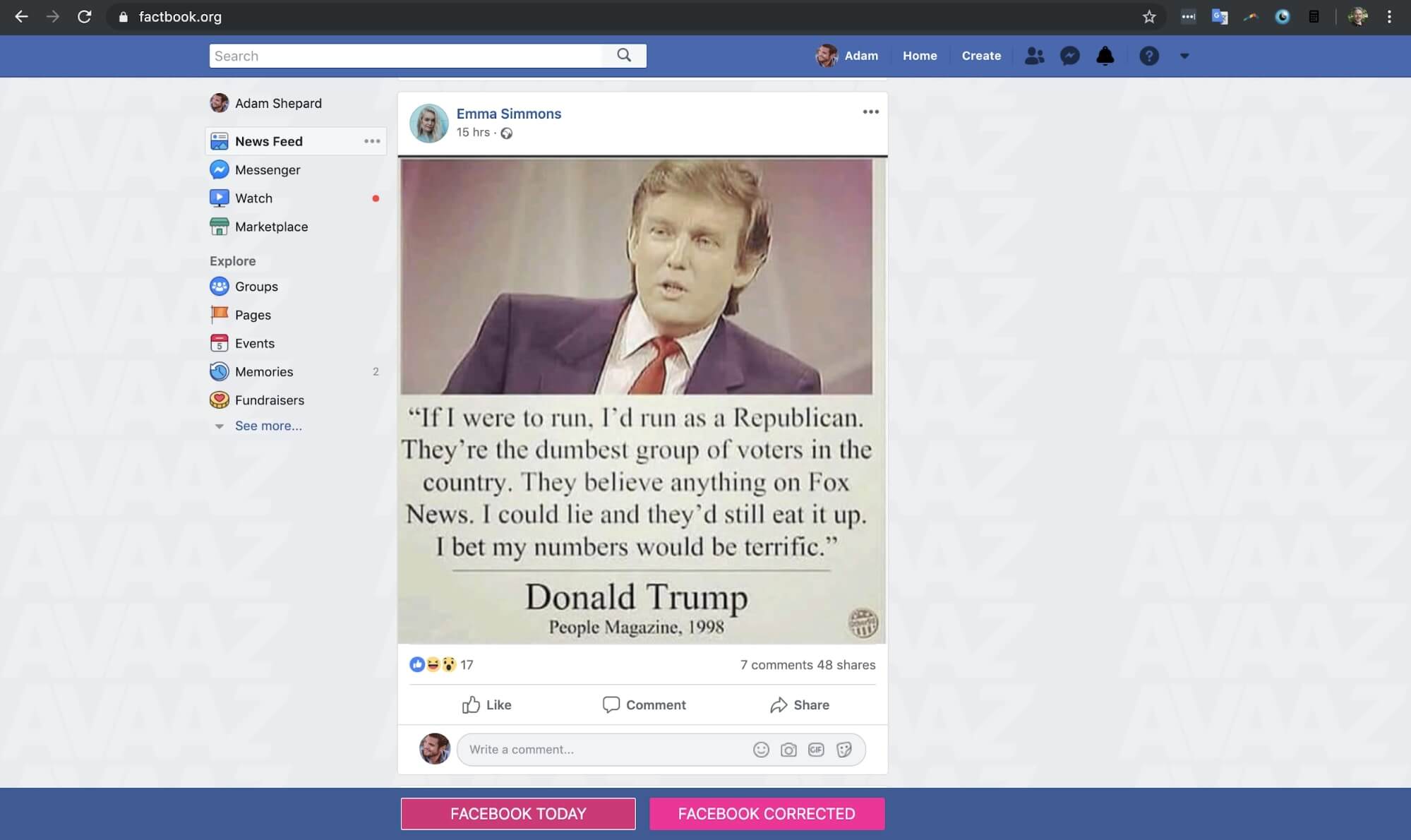

Figure 1: Hyper-realistic visual model of Facebook interface to test corrections (visit Factbook.org to learn more)

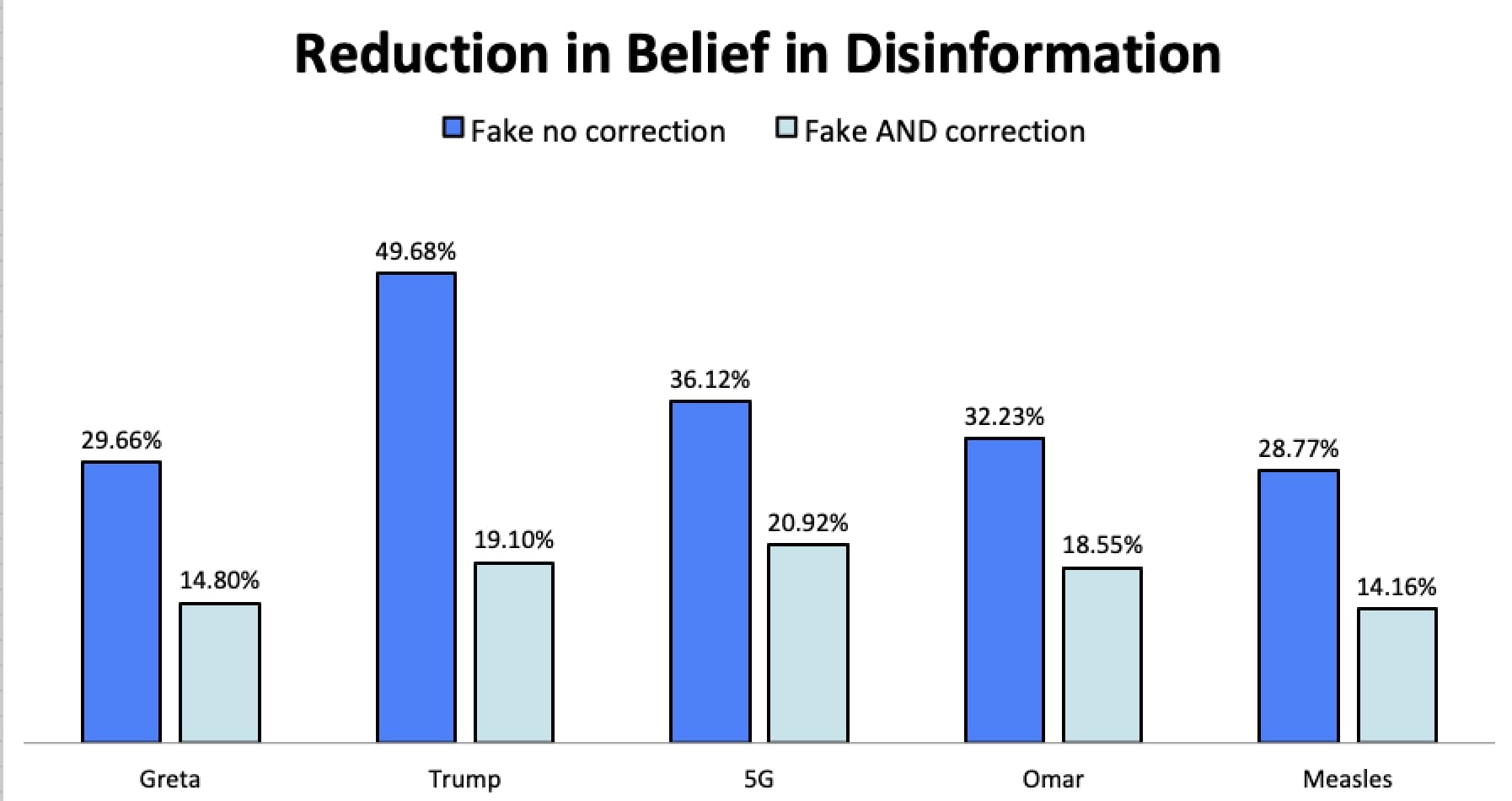

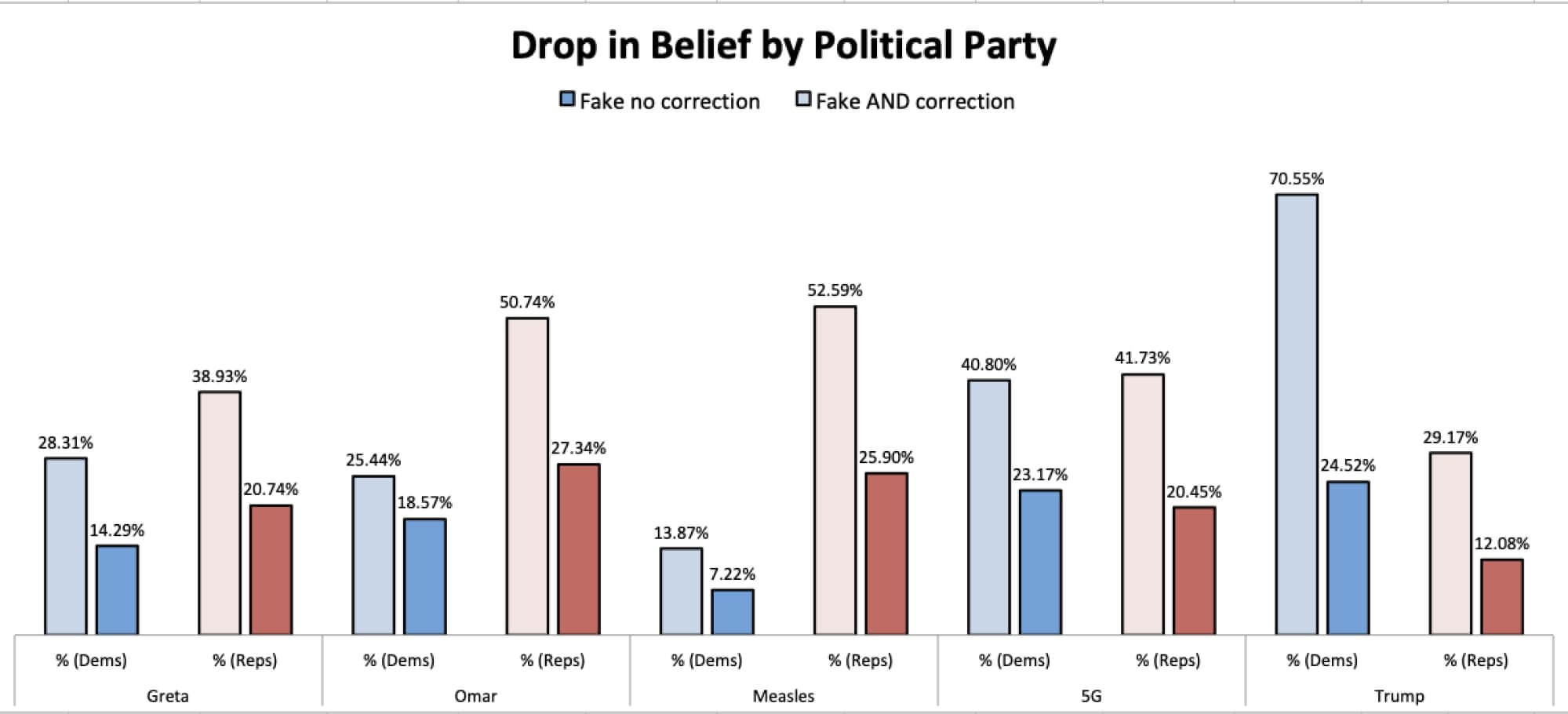

The results of the study are definitive: on average, “Correct the Record” reduces belief in disinformation by half (49.4%). Corrections also worked on partisan content -- the highest improvement, 61%, came on a fabricated quote from President Trump about “Republicans being dumb.” Significantly, corrections work across party affiliation and political ideology. The average drop in belief in disinformation after seeing a correction was 50% for Republicans, 47% for Democrats, 40% for liberals, and 57% for conservatives.

The final results further emphasise that social media platforms can cut the effects of and belief in disinformation in half by “Correcting the Record” -- showing every single user exposed to viral disinformation corrections from independent fact-checkers.

Given the urgency of upcoming elections in the United States and around the world, and a number of health crises that can cause mass harm, “Correct the Record” is currently one of the strongest defenses we have against the coordinated disinformation campaigns threatening our democracies.

And this solution is popular -- people across Germany, France, Spain, and Italy (87%); the UK (74%); and the USA (68%), support it in large numbers. And most of them believe we need regulation to protect our societies from online harms like disinformation, fake news and data misuse. -- 76% or more of citizens across Germany, France, Spain and Italy; 81% in the UK, and 61% in the USA.

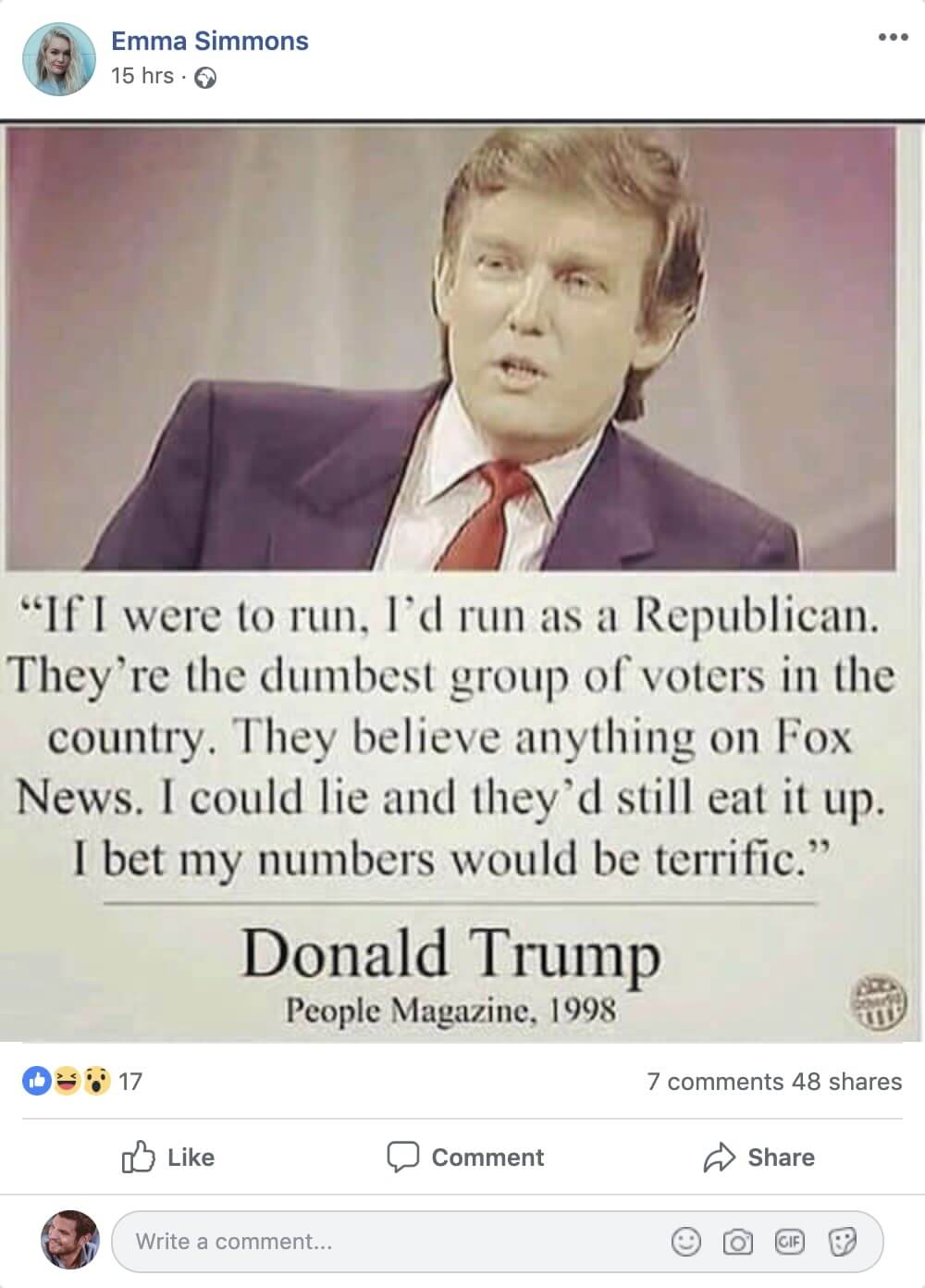

“Correct the Record” requires platforms to distribute corrections from independent fact-checkers to every single person exposed to disinformation.

For example:

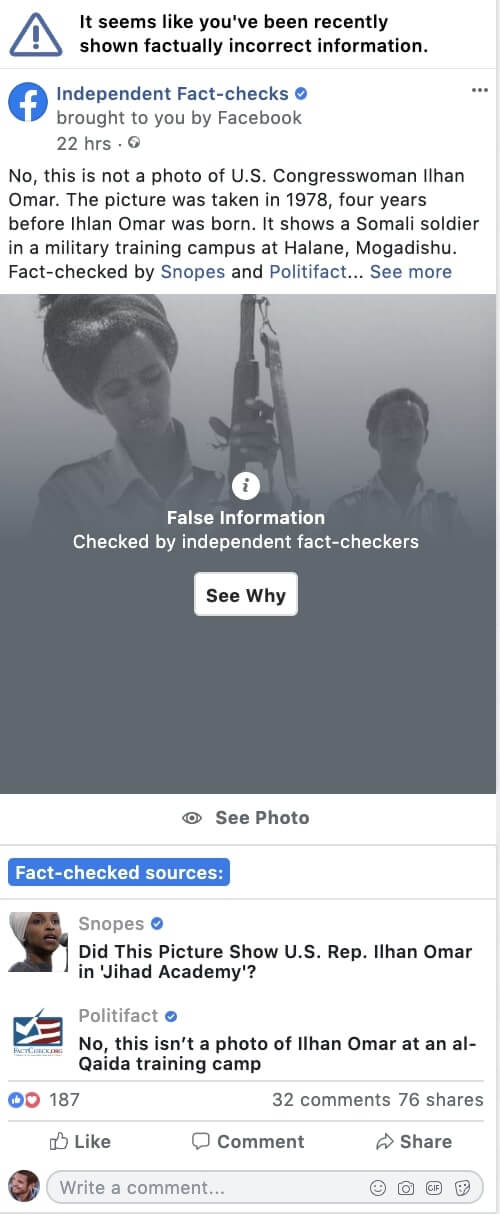

Everyone who read, shared, commented on, liked, or otherwise interacted with these posts..

...would also see something like this when independent fact-checkers confirm the content is false:

This study sought to analyze whether showing users, who had previously seen and read false or misleading content, a correction (before the false content had been labeled) would significantly decrease belief in the false news.

First, five different pieces of false news that were actual examples taken from Facebook and independently fact-checked by third-party fact-checkers were chosen that reflect a number of disinformation themes spreading on social media.

Secondly, corrections were designed for each piece of false or misleading content, based on the fact-checks provided by independent fact-checkers.

The five items were:

A doctored magazine cover calling Greta Thunberg the highest-paid activist in the world;

A meme falsely attributing to Donald Trump a quote that “Republicans are the dumbest group of voters” and that he could get them to vote for him;

An old photo of a female soldier in Somalia’s regular military, with a caption implying it was Representative Ilhan Omar in an Al Qaeda training camp;

A meme implying that installing 5G towers is so dangerous that workers need to wear hazmat suits; and,

A false news story claiming that illegal immigrants were responsible for measles outbreaks in the US.

Third, Avaaz designed Factbook.org, a website that closely mimics user experience on Facebook, and filled the newsfeed with a mixture of false and misleading content as well as normal non-disinformation stories, such as pictures of dogs and cats, or other real news stories. The filler content Avaaz put in the newsfeed was also taken from real Facebook posts, and slightly edited to ensure the anonymity of the posters.

The fourth step was ensuring that the academics involved could test the impact of corrections on an independent sample of American citizens. Hence, YouGov’s academic surveying tools were used (details of sampling methods are provided in the methodology section below).

If corrections are implemented for all users who view disinformation, the majority of users will see corrections some time after they were exposed to the original false or misleading content, as this content spreads, and sometimes goes viral before platforms flag it and fact-checkers fact-check it. To account for that we split the model into two timelines. In the first, participants see false news (plus some filler items such as food or travel images). In the second, participants see corrections as well as a few more filler items. Finally, we made sure the test was fully randomized. Specifically:

On the first timeline, the participants saw between zero and five fake items, plus three filler items. The number of fake items; which ones, if any, were shown; and the order in which they were shown were all completely random.

On the second timeline, the participants saw corrections corresponding to the fake items he or she saw on the first timeline, plus three different filler items. The number of corrections was again randomly generated, from zero up to the total number of fake items seen on the first timeline.

The design therefore produced three randomly-selected groups for each survey question:

Participants who saw neither the fake item nor the correction.

Participants who saw the fake item but not the correction.

Participants who saw both the fake item and the correction.

In summary, 2,000 participants were randomly shown no item at all, only the false news item (but not a correction), or saw both the false news and a correction. All users were then asked to answer survey questions on whether the content they read was true or false.

The results of the study are stark, and difficult to dispute: corrections radically reduce belief in disinformation by an average of nearly half (49.4%) and as much as 61%. The subset group that saw the false news and correction performed significantly better than the group that only saw the disinformation. This is true even for polarising political content about figures like President Trump or Representative Ilhan Omar:

Strikingly, the effects remained strong across both party affiliation and ideology.

Measles: 50.7% fewer people believed that measles outbreaks were caused by illegal immigrants after being exposed to a correction versus those that saw only the fake item.

Greta: 50% fewer people believed that Greta is the highest paid activist in the world and had made millions from her activism.

Omar: 42.4% fewer people believed that a photo circulating on social media showed US Rep. Ilhan Omar at a terrorist training camp in Somalia.

5G: 42% fewer people believed that installing 5G towers required a hazmat suit.

Trump: 61.5% fewer people believed that Trump said Republicans are the dumbest group of voters.

Overall, “Correct the Record” produced a 49.4% reduction in belief in disinformation.

The average drop in belief in false news after seeing a correction:

Democrats: 47%

Republicans: 50%

Liberals: 40%

Conservatives: 57%

More detail on each piece of false news, and the breakdown of responses by party and ideology, can be found at the end of the report, in the section “Results -- Extended Detail”.

In addition to the main survey, we asked half the participants (1,000 people) two survey questions, and the answers show that Americans overwhelmingly support both “Correct the Record” and legislation to require platforms to protect their users from disinformation:

68.4% agree or strongly agree that Facebook should inform users when they have been targeted with disinformation and misleading information, and provide those exposed to that content with independently fact-checked corrections;

60.8% agree or strongly agree that social media companies should be required by law to protect their users from disinformation.

After significant pressure from Avaaz and policymakers, Facebook announced in October 2019 that it would insert clearer warning labels on posts verified by its fact-checking partners to be disinformation -- but would only show the labels on false or misleading content to users who see or share that post after the content has been debunked by fact-checkers.

However, the steps taken by the platforms, mentioned above, are far from sufficient and only impact a very small percentage of the users who see the disinformation content. For example, the current model of corrections applied by Facebook and Instagram ignore the majority of users who see the malicious disinformation content before a correction is applied. Disinformation content can go viral fast, often due to coordinated inauthentic behavior3 ["Coordinated inauthentic behavior" is defined by Facebook as "when groups of people or pages work together to mislead others about who they are or what they're doing." Nathaniel Gleicher, Facebook Head of Cybersecurity Policy], whereas it could take independent fact-checkers between two days and two weeks from when the disinformation content is flagged to the moment the correction is issued.

Consequently, the current fact-checking model design by Facebook (and a similar model now being tested by Twitter) will only reach a small sliver of the users targeted with disinformation. Thus, efforts by Facebook, Instagram, and Twitter to belatedly label misinformation when it is fact-checked, but not notify users who interacted with the content previously that it has been flagged, are commendable but do not go far enough. The results of this study show that the impact of alerting and providing corrections to all users who have seen or interacted with disinformation would be much more impactful.

Lastly, while a 50 percent reduction in belief in disinformation is significant, it should be seen as a baseline for further optimizations by the platforms. Simply deploying “Correct the Record” officially could increase its effectiveness. During UX testing, over half of the respondents indicated that they did not believe the “corrections” in Avaaz’s Factbook design because they had never heard of Facebook officially implementing corrections - and thus the corrections in our design appeared inauthentic to them. Users in our testing further emphasised that, had they been aware that independent fact-checks were officially being rolled out, they would trust them more.

Moreover, platforms have a significant amount of resources that would allow them to continue to improve on correction designs and notification systems that are more authoritative and easier to digest and remember.

Moreover, platforms have a significant amount of resources that would allow them to continue to improve on correction designs and notification systems that are more authoritative and easier to digest and remember.

Avaaz’s study continues a long line of academic research showing that effective corrections can reduce and even eliminate the effects of disinformation. In one study, for instance, researchers at MIT concluded that “[t]here was a large bipartisan shift in belief post-explanation [...] all members of the political spectrum are capable of substantial belief change when sound non-partisan explanations are presented.”

Another study at the University of Michigan found that “[c]orrections to misinformation were effective, even in the face of emotional experiences and partisan motivations.” Yet a third group from the City University of London noted that “reliance on misinformation was typically halved by corrections, compared to an uncorrected control group…” and that participants had a “good overall recall of the correction message.”

We believe Facebook knows that “Correct the Record” works -- they’ve deployed corrections to defend their own brand. According to reporting by Bloomberg, which Facebook has not denied, the company tracked false and misleading about itself, crafted corrections, and proactively pushed them out to users. To combat toxic memes such as the “copy and paste” hoax, where users are urged to paste a blurb into their status denying Facebook rights to their content, “the social network took active steps to snuff them out. [Facebook] [s]taff prepared messages debunking assertions about Facebook, then ran them in front of users who shared the content, according to documents viewed by Bloomberg News and four people familiar with the matter.” In the US, Facebook even ran a promoted post debunking the “copy and paste” meme.

Researchers are confirming that “Correct the Record” works. Social media platforms are aware that corrections work, often using them to protect their brand. Why, then, has Facebook, in particular, warned of a backfire effect?4 [Facebook shared in a December 2017 announcement the following: "Academic research on correcting misinformation has shown that putting a strong image, like a red flag, next to an article may actually entrench deeply held beliefs – the opposite effect to what we intended."]

This argument goes back to a study published in 2010 Brendan Nyhan (University of Michigan) and Jason Reifler (Georgia State University) found that a group of college students shown corrections debunking false news stories which confirmed the presence of weapons of mass destruction (WMDs) in Iraq came to believe even more strongly that WMDs were in fact found (they weren’t). This study was not conducted in a social media context, or using a platform that imitated newsfeeds. Though many have tried, using many different approaches, issues, and settings, our investigations into the topic indicate that no researcher has been able to duplicate Nyhan and Reifler’s findings.

Among the many publications of Avaaz’s collaborators Dr. Ethan Porter and Dr. Tom Wood is a seminal paper where they created 52 scenarios specifically designed to elicit backfire -- including Nyhan’s and Reifler’s about WMDs in Iraq. Studying 10,000 people across those 52 scenarios produced not one single instance of backfire. In 2019, Nyhan, Reifler, Porter, and Wood, all together, co-authored a paper that should finally put the backfire effect to rest.

Platforms must work with fact-checkers to “Correct the Record” by distributing independent, third-party corrections to every single individual who saw the false information in the first place. Newspapers publish corrections on their own pages, television stations on their own airwaves; platforms should do the same on their own channels.

This study shows that “Correct the Record” would tackle disinformation while preserving freedom of expression, as “Correct the Record” only adds factually corrected information, and does not require the platforms to delete any content.

1- Define: The obligation to correct the record would be triggered where:

Independent fact checkers verify that content is false or misleading;

A significant number of people - e.g., 10,000 - viewed the content.

2 - Detect: Platforms must:

Proactively use technology such as AI to detect potential disinformation with significant reach that could be flagged for fact-checkers;

Deploy an accessible and prominent mechanism for users to report disinformation;

Provide independent fact checkers with access to content that has reached a wide audience - e.g., 10,000 or more people.

3 - Verify: Platforms must work with independent, third-party verified fact-checkers to robustly determine whether reported content is disinformation.

4 - Alert: Each user exposed to verified disinformation should be notified using the platform’s most visible and effective notification standard.

5 - Correct: Each user exposed to disinformation should receive a correction from the fact-checkers that is of at least equal prominence to the original content, and that follows best practices.

Online platforms should also, within a reasonable time after receiving a report from an independent fact-checker regarding disinformation with significant reach, take steps to curtail its further spread such as downgrading its reach in users’ news feeds.

Furthermore, policymakers must act to regulate disinformation and require “Correct the Record” for malicious disinformation content that may cause harm.

The vast majority of people want “Correct the Record”. In Germany, France, Spain, and Italy, 87% of people tell us they would approve of “Correct the Record”. 74% of UK citizens are in favor of the idea, and 68% of Americans.

The world is ready to regulate disinformation. To protect their people, governments have brought every major new industry in modern history under regulation -- even when it seemed impossible. Railroads. Radio. Telephones. Television. Automobiles. Airlines. And in every case the industry has not just survived but thrived. For the sake of our people, our democracies, and our planet, the time has come again for our leaders to step up and govern the seemingly ungovernable.

And it’s not only Avaaz and our members who believe that -- 76% or more of citizens across Germany, France, Spain and Italy think online platforms should be regulated. 81% of UK citizens agree, as do 61% of Americans, with only 18% saying they are against regulations.

The European Union has sounded the alarm about disinformation. And the rest of the world is starting to catch up. The U.N. has called for action to counter the global threat of disinformation. The U.S. Federal Election Commission recently convened a high-level policy discussion on protecting U.S. elections. Parliamentarians from all over the world have joined forces to develop new defenses for their democracies. Brazil’s Congress has launched a massive investigation into disinformation in the 2018 election. France, the UK, and Germany have passed or are developing laws that regulate social media.

As the evidence mounts, and with this study providing clear proof that corrections can work, we urge regulators to design legislation that has “Correct the Record” baked into it.

The Model: Factbook.org

Question: If you’re not Facebook, how do you figure out how well corrections will work in the Facebook environment?

Answer: You build your own Facebook.

To prepare for the formal study, Avaaz spent months building, testing, and refining a model that replicates the look and feel of Facebook. We invested hundreds of hours of developer time, and tested the results with thousands of members in Europe and the US. We conducted User Experience (UX) focus groups in Berlin and New York, with nationals of several countries, to identify and iron out points of friction in the design. The aim was to build a tool that would offer participants in the formal study an experience that was as close to the real Facebook as it’s possible to get in a research setting. A demo of the result, now populated with the pieces of false news and corrections selected for the study, can be found at Factbook.org.5 [All the profiles on our model were constructed using made-up names and pictured from stock photo collections.]

Example of the Factbook interface:

The Survey: Design

To design the formal study, Avaaz teamed up with two of the leading researchers in the field of disinformation and corrections: Dr. Ethan Porter from George Washington University and Dr. Tom Wood from The Ohio State University.

First, we chose 5 actual pieces of false news from Facebook, content that had been debunked by at least two independent fact checkers. Then we designed a correction for each piece of false news. The final choices were:

1. Trump thinks Republicans are “the dumbest group of voters in the country.”

2. Workers installing 5G towers have to wear hazmat suits.

3. A photo shows Ilhan Omar in a terrorist training camp in Somalia.

4. Greta Thunberg has made millions from her climate activism.

5. Measles outbreaks in the US were caused by illegal immigration.

If corrections are retroactively implemented, most users in the real world will see corrections some time after they were exposed to the original disinformation. To account for that we split the model into two timelines. In the first, participants see false news (plus some filler items such as food or travel images). In the second, participants see corrections as well as a few more filler items. Finally, we made sure the test was fully randomized. Specifically:

On the first timeline, the participants saw between zero and five fake items, plus three random filler items. The number of fake items; which ones, if any, were shown; and the order in which they were shown were all completely random.

On the second timeline, the participants saw corrections corresponding to the fake items he or she saw on the first timeline, plus three different filler items. The number of corrections was again randomly generated, from zero up to the total number of fake items seen on the first timeline.

The design therefore produced three randomly-selected groups for each survey question:

Participants who saw neither the fake item nor the correction.

Participants who saw the fake item but not the correction.

Participants who saw both the fake item and the correction.

At the end, each participant -- even ones who had not seen the corresponding piece of false news -- was asked to choose whether a statement capturing the false or misleading information was a) true; b) probably true; c) probably false; d) false; or, e) not sure:

Because 5G towers emit harmful radiation, workers installing the poles need to wear hazmat suits.

Greta Thunberg is the highest paid activist in the world.

Congresswoman Ilhan Omar was photographed at an Al-Qaeda training camp in Somalia.

Illegal immigrants caused the recent measles outbreaks across the U.S..

Donald Trump called Republicans “the dumbest group of voters” in a 1998 interview with People Magazine.

The participants were recruited and the study was conducted on YouGov’s gold-standard academic research platform. YouGov describes its method for sampling and weighting the responses as follows:

The respondents were matched to a sampling frame on gender, age, race, and education. The frame was constructed by stratified sampling from the full 2016 American Community Survey (ACS) 1-year sample with selection within strata by weighted sampling with replacements (using the person weights on the public use file).

The matched cases were weighted to the sampling frame using propensity scores. The matched cases and the frame were combined and a logistic regression was estimated for inclusion in the frame. The propensity score function included age, gender, race/ethnicity, years of education, and region. The propensity scores were grouped into deciles of the estimated propensity score in the frame and post-stratified according to these deciles.

The weights were then post-stratified on 2016 Presidential vote choice, and a four-way stratification of gender, age (4-categories), race (4- categories), and education (4-categories), to produce the final weight.

Isn’t CTR a challenge to freedom of expression? How is CTR different than the "bad bills" (e.g. Singapore) out there?

CTR is a win-win for democracy and freedom of expression: content is not removed - it is simply corrected. It adds the truth, but leaves the lies alone. Protection mechanisms are also necessary to safeguard free expression.

Centrally, our proposal ensures that an independent fact-checking method is in place. We do not believe that, as pushed by the government of Singapore for example, governments should be the arbiters of what is true and what is false. Instead, independent, third-party fact-checkers, vetted based on robust standards such as those used by the International Fact-Checking Network, should take on this responsibility. Fact-checkers should then be peer-reviewed at relevant intervals to ensure they have maintained their independence. Users should also have an opportunity to request a review of fact-checked content if they feel the fact-checkers got it wrong.

CTR also differs from the “authoritarian bills” in that we do not propose any criminal liability. Our principles treat social media users as potential targets and victims of disinformation, and we intend to protect them.

Does CTR make governments / platforms the arbiters of the truth?

Neither governments nor the platforms would be involved in deciding if content is disinformation. That’s why independent, third-party fact checkers are such an important part of CTR.

What about satire, humor, and legitimate political opinions?

Following the European Code of Practice, CTR would exempt satire, humor, and opinion. No content will be deleted. Only viral and/or deliberately false and misleading content that can cause societal harm such as influencing elections through voter suppression, spreading hate, maliciously sowing public discord through coordinated inauthentic behavior, or pre-empting a health crisis will be corrected.

Most ‘fake’ content isn’t false, but rather exaggerating, suggestive, generalizing, misleading, or manipulative. How do corrections work in those cases?

Corrections should seek to undo the damage done, therefore they should be proportional to the harm and targeted at exactly what’s wrong with the content. Professional fact-checkers are experts at defining just exactly what’s wrong with a particular piece of content. Is it outright false? Does it use the truth but twist it out of context? Does it use a fake image, or a real image that doesn’t relate to the content? Is it aimed at perpetuating belief in already-debunked facts? These standards already exist and are constantly being refined by fact checkers the world over. We call for sophisticated alerts and fact-checks that not only tell users a piece of content is false, but also helps them understand why it is false.

Disinformation is trending downwards.

Platforms that make this claim have provided no data to back it up. Transparency is severely lacking. Avaaz research shows that democratic elections are still drowning in disinformation (e.g. see our reports “Far Right Networks of Deception” and “US2020: Another Facebook Disinformation Election?”). Similarly, the scale and reach of social media platforms is so significant that even targeted disinformation to tens of thousands of users can have a significant impact on election results. More importantly, we believe that CTR would disincentive disinformation actors and help ensure that the trend of disinformation moves in a downward trajectory.

The platforms are already taking action against disinformation. Why is CTR so important?

CTR is the only solution that counteracts the effects of disinformation that has gotten out into the world. Catching disinformation before it’s circulated is ideal but no such system will ever be perfect.

Prevention is important, but we need treatment too. Education and resilience are crucial, but they take time we don’t have. Avaaz documented networks of far-right deception that had reached 750,000,000 views in three months ahead of the European elections, and our research only focused on six countries, indicating that our findings were only the tip of the Iceberg. The millions or tens of millions of people who saw it still, today, don’t know they were misled. Only CTR solves that problem.

Platforms are already showing corrections and/or flags, aren’t they doing CTR already?

The fact that Facebook and others are implementing limited versions of CTR in some markets and/or on some specific topics (such as anti-vaxx disinformation) shows that CTR is technically feasible and effective. However, in order to fully address the threat of disinformation, these corrections need to be shown to everyone who has been exposed to false information, not just someone who is, for example, commenting or sharing after the content has been fact checked. This is not currently happening, and as a result the large majority of people who see false news never see a correction.

Not enough fact checkers to realise CTR.

Avaaz has spoken to some of the most highly-cited and prominent fact checking organizations in the world, and they assure us that the sector could scale rapidly. Once CTR creates demand, the fact-checking sector will grow. Big donors and individual philanthropists are likely ready to support these efforts at scale. Moreover, as fact-checking sources are distributed via CTR, their pages will receive more visitors and publicity that can ensure increased revenue.

Furthermore, other crowdsourced tools for fact-checking are currently being tested by Wikipedia and Twitter - and could offer community based solutions.

Additionally, there are a wide range of misinformation topics such as anti-vaccination misinformation, climate denial misinformation, detected Russian disinformation spread by the IRA, and more where one or two simple corrections can be used millions of times on millions of posts. Moreover, during urgent electoral moments, for example, when disinformation is spread just days before a vote, fact-checkers are often quick to correct - and at these critical moments ensuring that these corrections reach millions is pivotal.

Lastly, one should not only look at the existing fact-checking resources and say that scalable fact-checking does not work. Instead, just as these platforms scaled up from a few users to reaching billions of people around the world, we believe that fact-checking can expand and become more effective through innovative solutions.

Corrections don’t work and may backfire.

Multiple peer-reviewed studies have demonstrated that effective corrections can reduce and even eliminate the effects of disinformation. Studies trying to replicate the often discussed “backfire effect” - where corrections entrenched false beliefs - have instead found the opposite to be true. Meanwhile, researchers are converging best practices for effective corrections.

Effective corrections should emphasise facts (avoid repetition of the myth), contain a simple and brief rebuttal, and affirm users’ identity and worldview.

There will likely always be a small minority of ideologues on topics such as anti-vaccinations or flat-earthers or white-supremacists- but this small minority is not the target of Correcting the Record. Instead, the vast majority of users who are not ideologues but who are flooded with disinformation meant to recruit them to these conspiracy theories are the ones who benefit most from CTR, and who can be inoculated against attempts to misinform them.

In 2019, Nyhan, Reifler, (the author's of the much-cited "backfire" effect paper and our research collaborators -- Porter, and Wood -- , all together, co-authored a paper that should finally put the backfire effect to rest.

False news is older than social media - it has always existed, and will always exist.

We’re not facing a new problem, but this old problem has found a new playground that has a huge and rapid reach and therefore a bigger impact.

Research, including study at MIT, found that falsehoods on social media are amplified and can spread quickly. Moreover, our societies have developed solutions to old forms of disinformation and false news, but malicious actors have largely found that social media is a fertile ground that can be abused to go around these protections. Furthermore, social media platforms provide an aura of trust and nudge users to interactions and outrage that make users much more vulnerable to information warfare tactics. That is why it is central that platforms and governments expand protections by implementing corrections.

Want to learn more - Please email our media team at: media@avaaz.org

Endnotes

Tell Your Friends