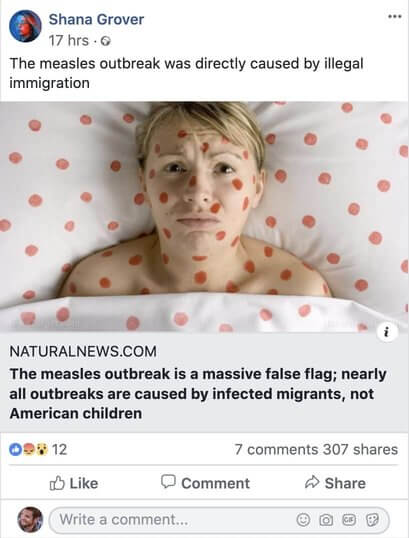

Health misinformation is a global public health threat.1 Studies have shown that anti-vaccination communities prosper on Facebook,2 that the social media platform acts as a ‘vector’ for conspiracy beliefs that are hindering people from protecting themselves during the COVID-19 outbreak,3 and that bogus health cures thrive on the social media platform.4

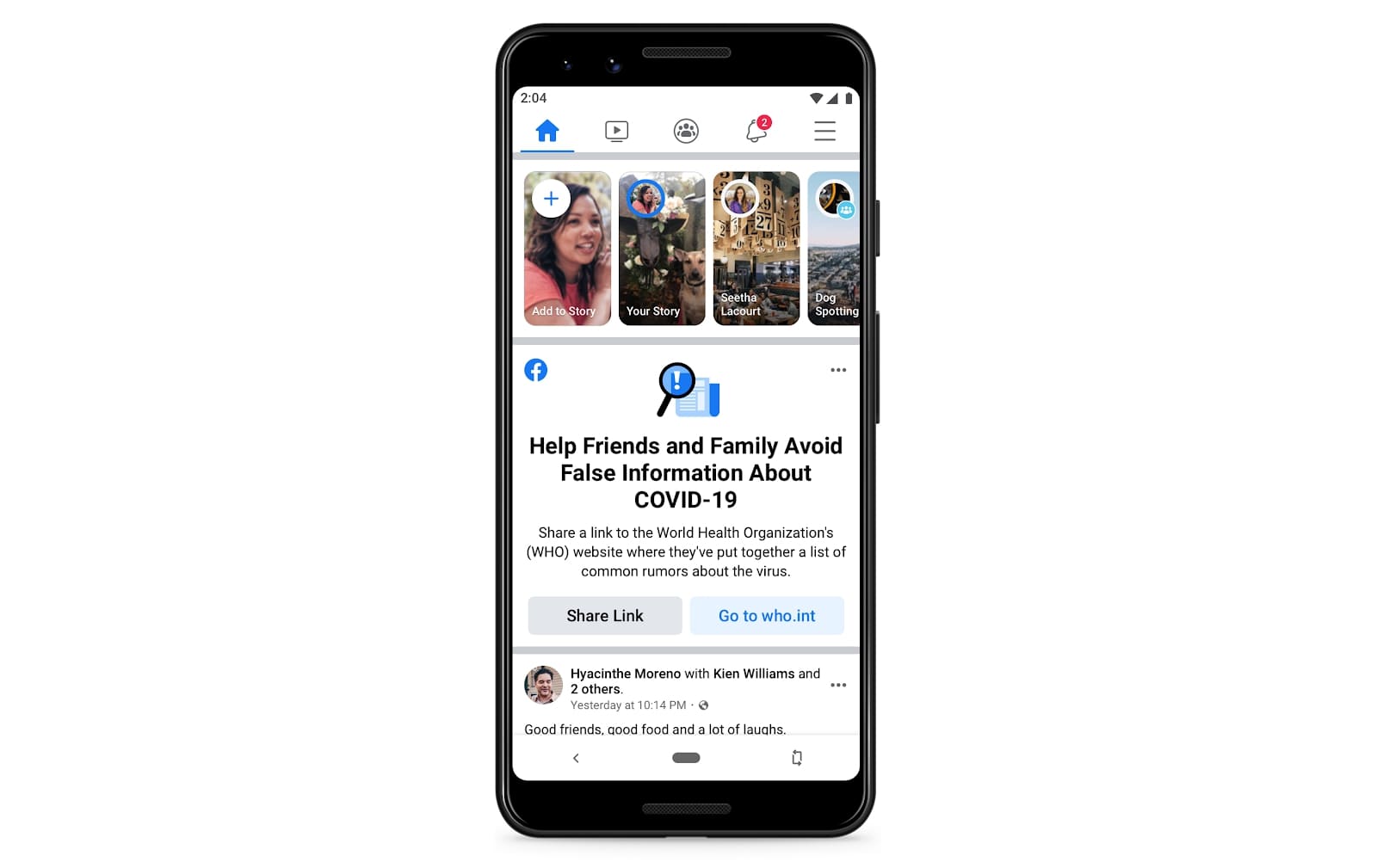

Facebook itself has promised to keep people “safe and informed” about the coronavirus,5 and well before the pandemic, acknowledged that “misleading health content is particularly bad for our community”.6

Until now, however, little has been published about the type of actors pushing health misinformation widely on Facebook and the scope of their reach. This investigation is one of the first to measure the extent to which Facebook’s efforts to combat vaccine and health misinformation on its platform have been successful, both before and during its biggest test yet: the coronavirus pandemic. It finds that even the most ambitious among Facebook’s strategies are falling short of what is needed to effectively protect society.

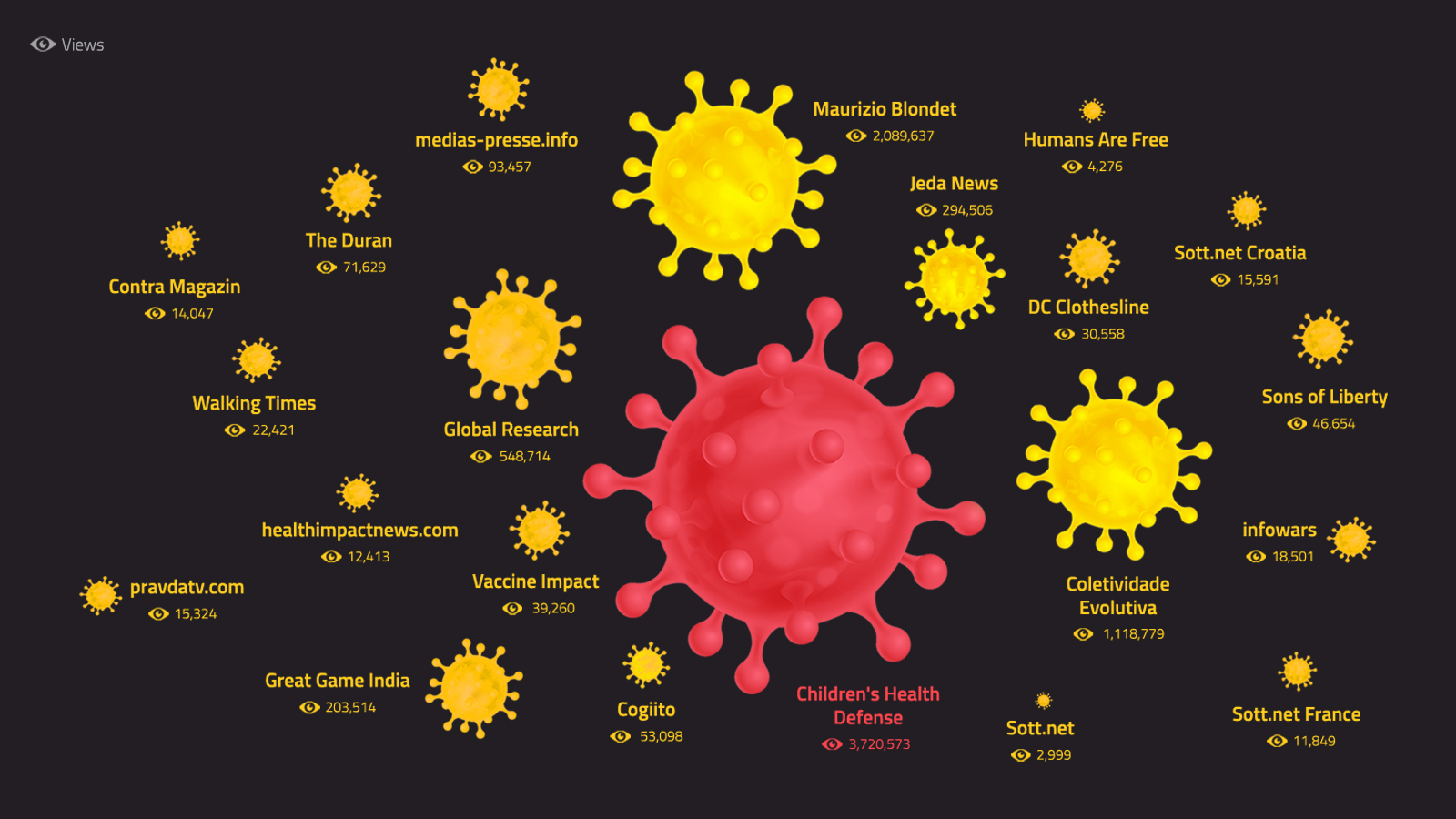

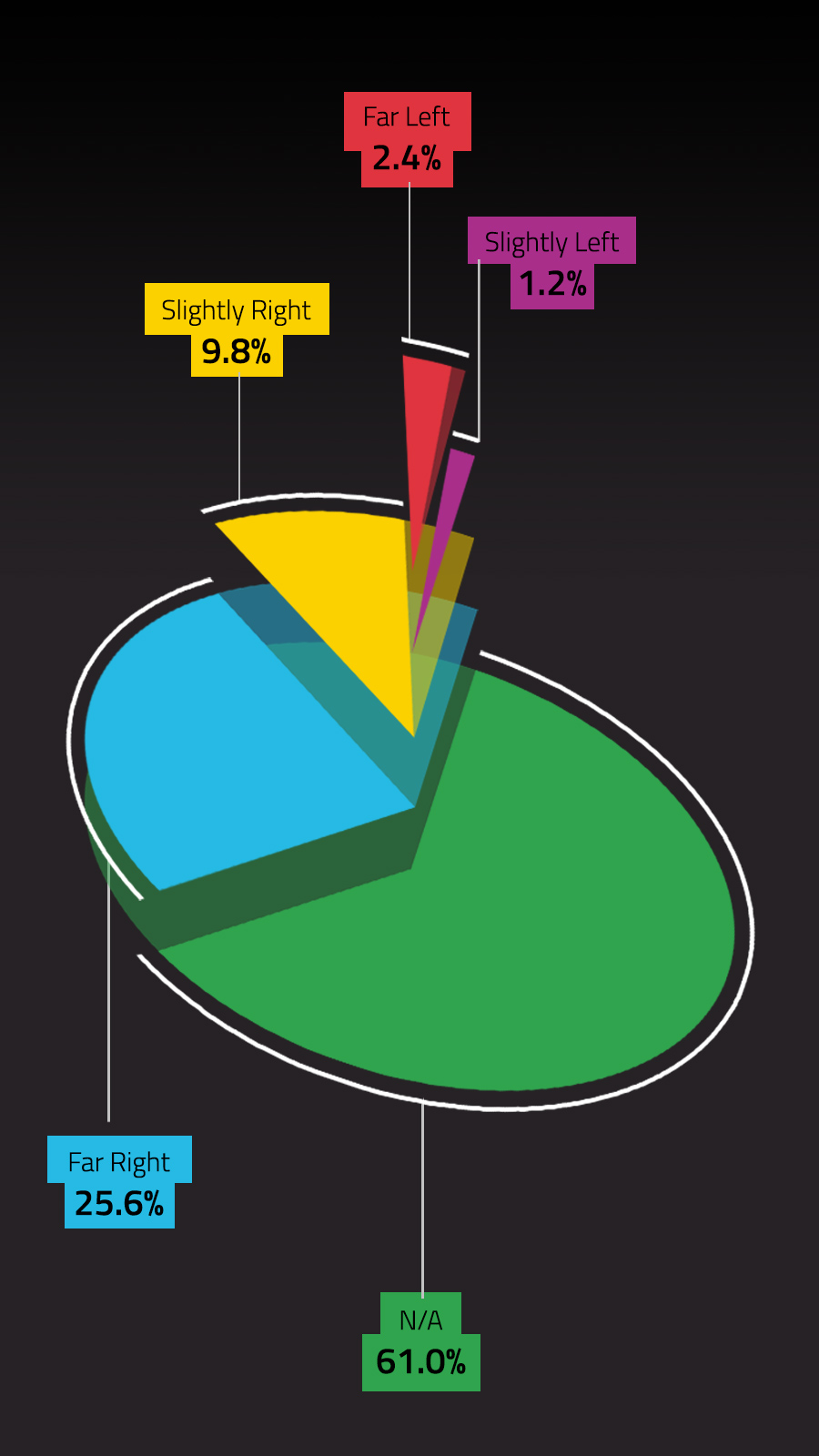

In this report, Avaaz uncovers global health misinformation spreading networks on Facebook that reached an estimated 3.8 billion views in the last year spanning at least five countries — the United States, the UK, France, Germany, and Italy.7 Many of these networks, made up of both websites and Facebook pages, have spread vaccination and health misinformation on the social media platform for years. However, some did not appear to have had any focus on health until Feb. 2020 when they started covering the COVID-19 pandemic.

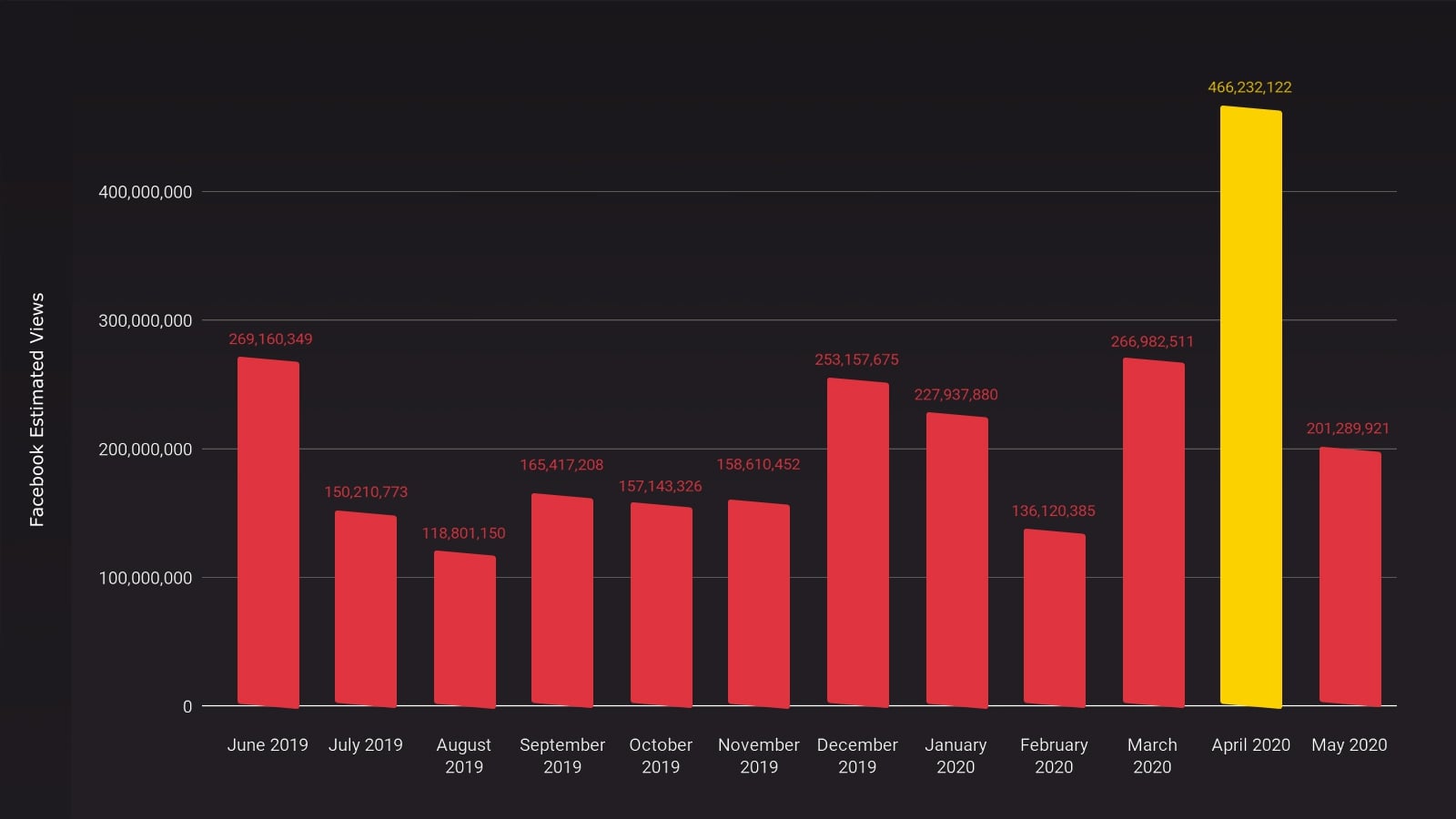

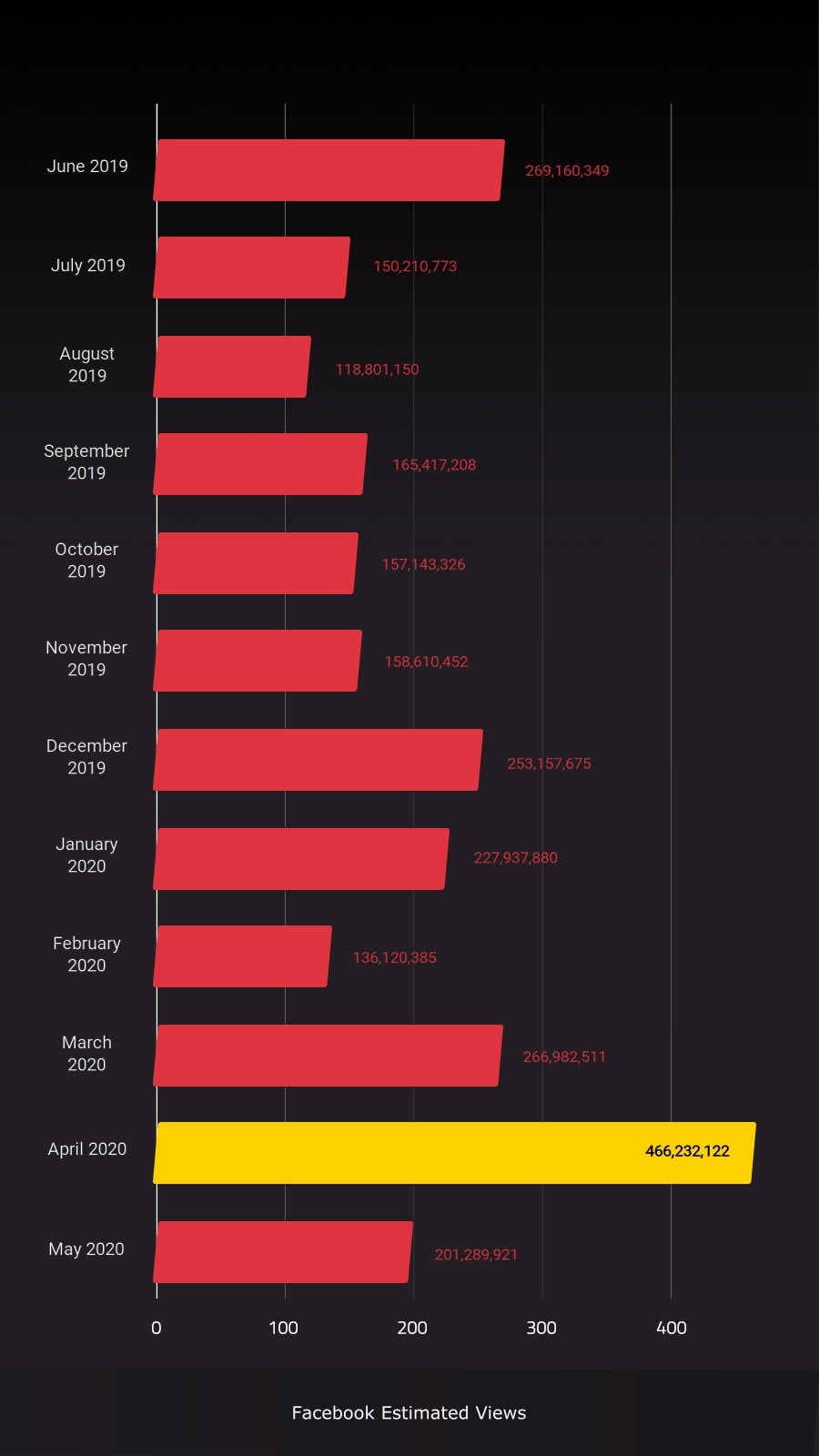

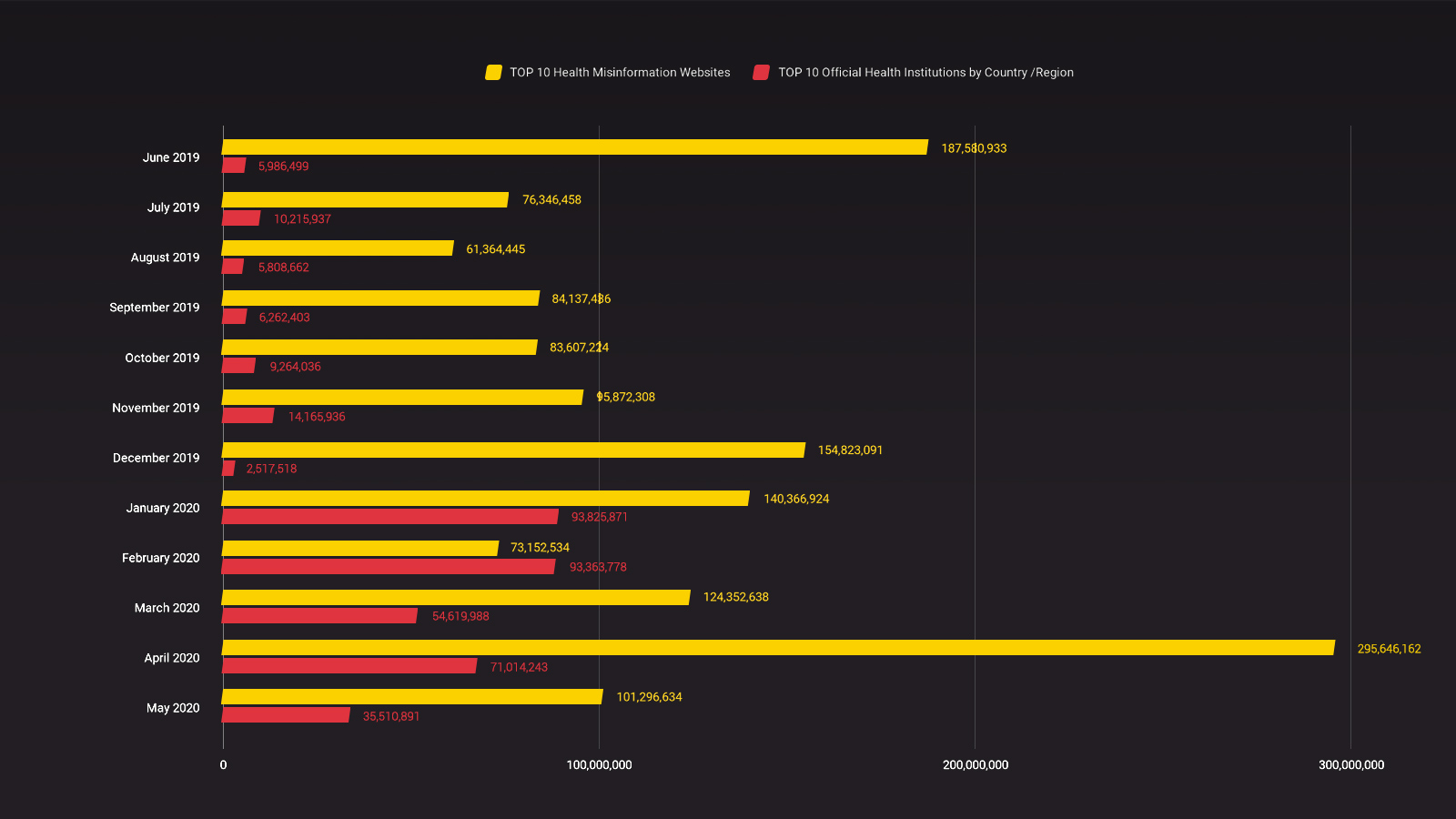

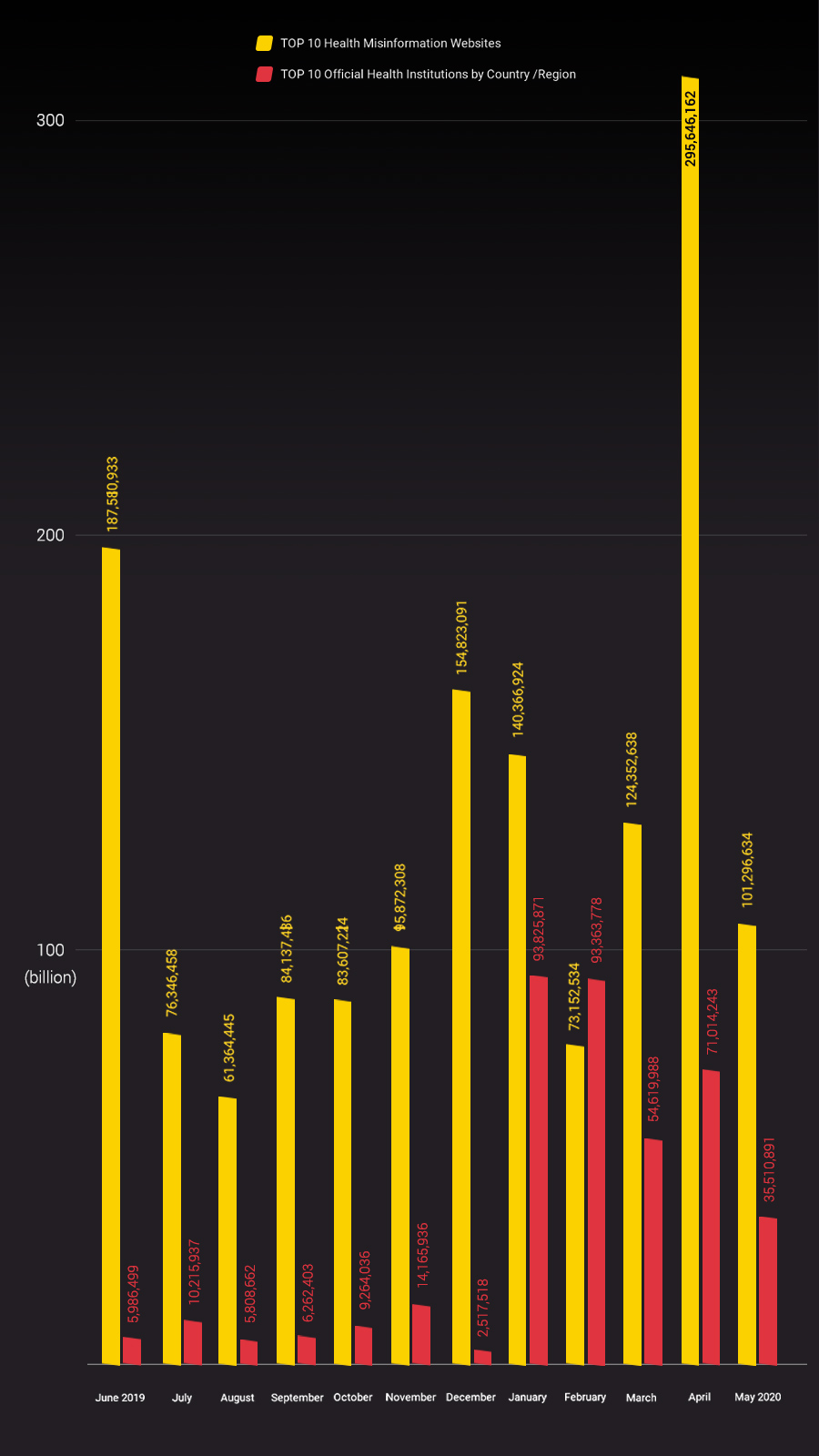

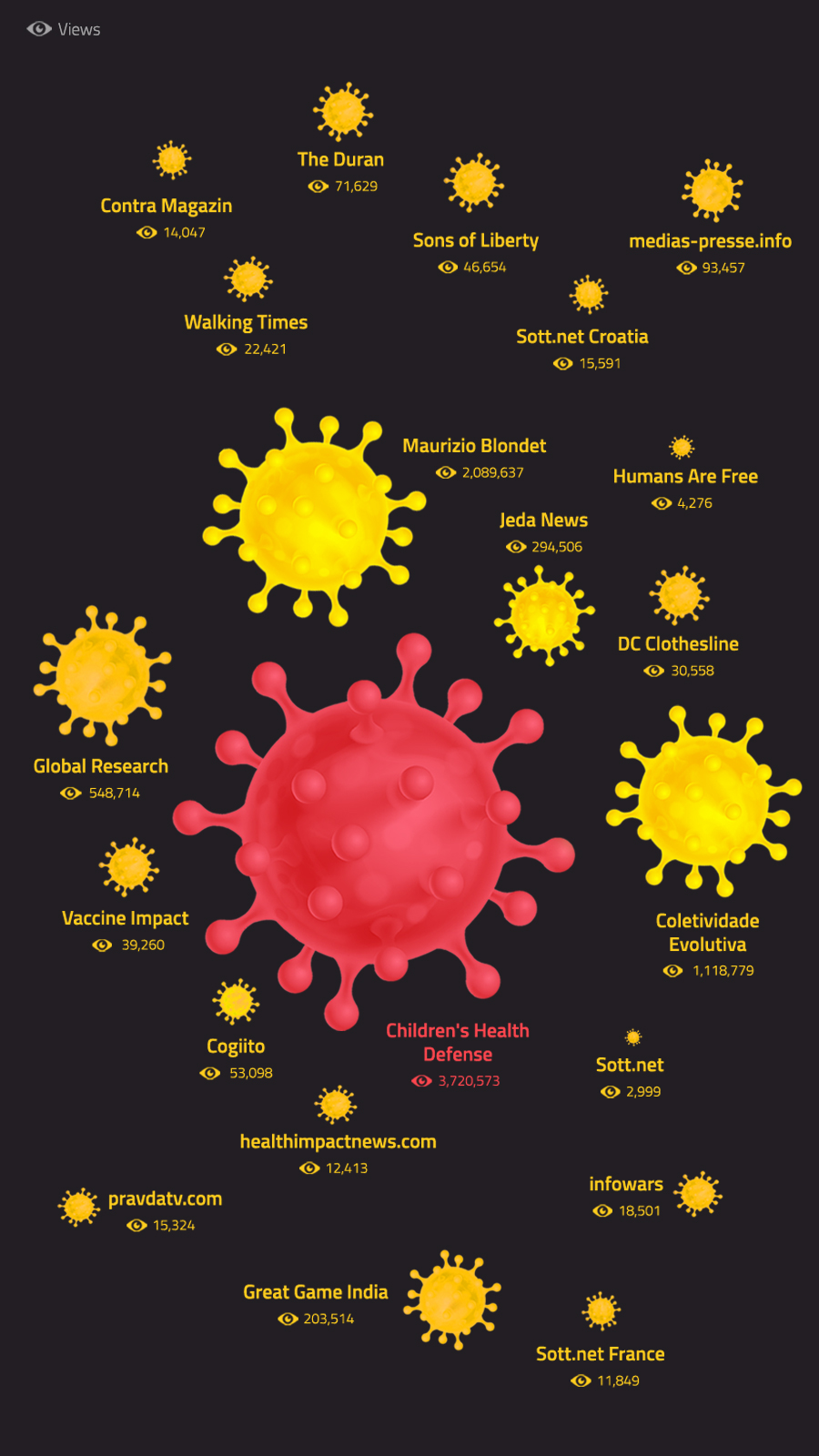

In Section 1, we take a closer look at the global health misinformation networks, and show how 82 websites spreading health misinformation racked up views during the COVID-19 pandemic to a peak of 460 million estimated views on Facebook in April 2020. These websites had all been flagged by NewsGuard for repeatedly sharing factually inaccurate information,8 many of them before the pandemic.

We compared this to content from leading health institutions and found that during the month of April, when Facebook was pushing reliable information through the COVID-19 information centre, content from the top 10 websites spreading health misinformation reached four times as many views on Facebook as equivalent content from the websites of 10 leading health institutions,9 such as the WHO and CDC.10

This section also uncovers one of the main engines spreading health misinformation on Facebook: public pages — they account for 43% of all views to the top websites we identified spreading health misinformation on the platform.11 The top 42 Facebook pages alone generated an estimated 800 million views.

The findings in this section bring to the forefront the question of whether or not Facebook’s algorithm amplifies misinformation content and the pages spreading misinformation. The scale at which health misinformation spreading networks appear to have outpaced authoritative health websites, despite the platform’s declared aggressive efforts to moderate and downgrade health misinformation and boost authoritative sources, suggests that Facebook’s moderation tactics are not keeping up with the amplification Facebook’s own algorithm provides to health misinformation content and those spreading it.

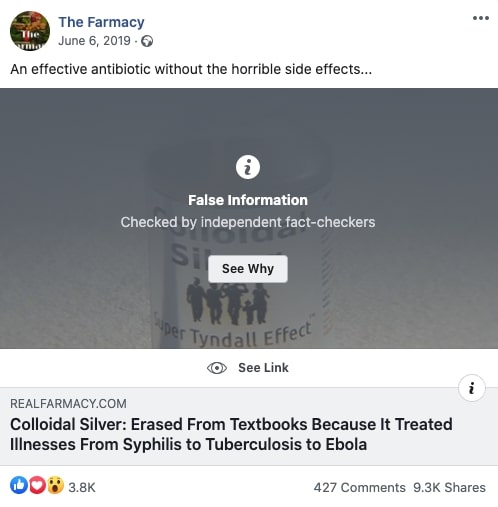

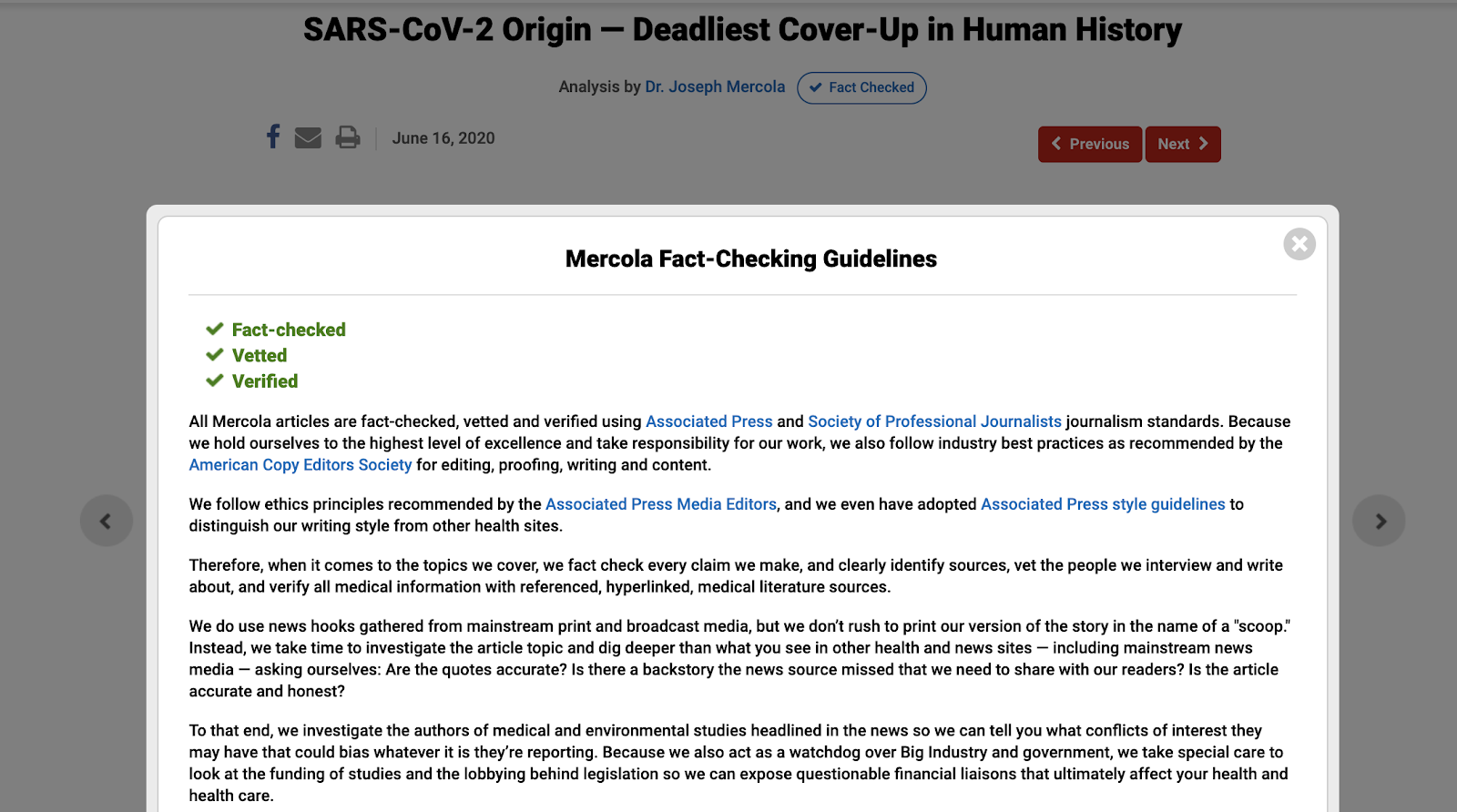

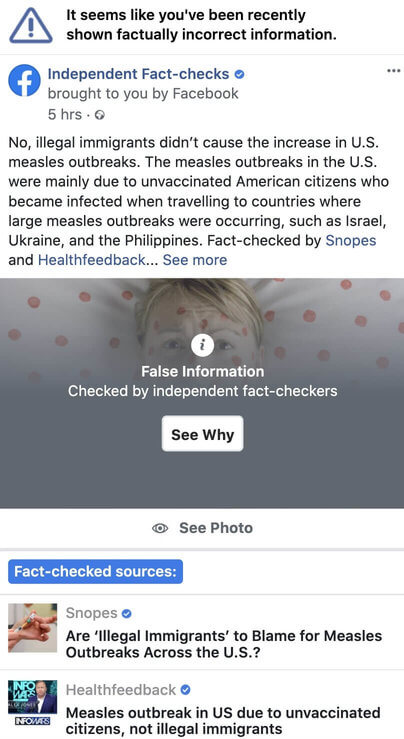

In order to assess Facebook’s response to misinformation content spreading on its platform, we analysed a sample set of 174 pieces of health misinformation published by the networks uncovered in this report, and found only 16% of articles and posts analysed contained a warning label from Facebook. And despite their content being fact-checked, the other 84% of articles and posts Avaaz analysed remain online without warnings. Facebook had promised to issue “strong warning labels” for misinformation flagged by fact-checkers and other third party entities.12

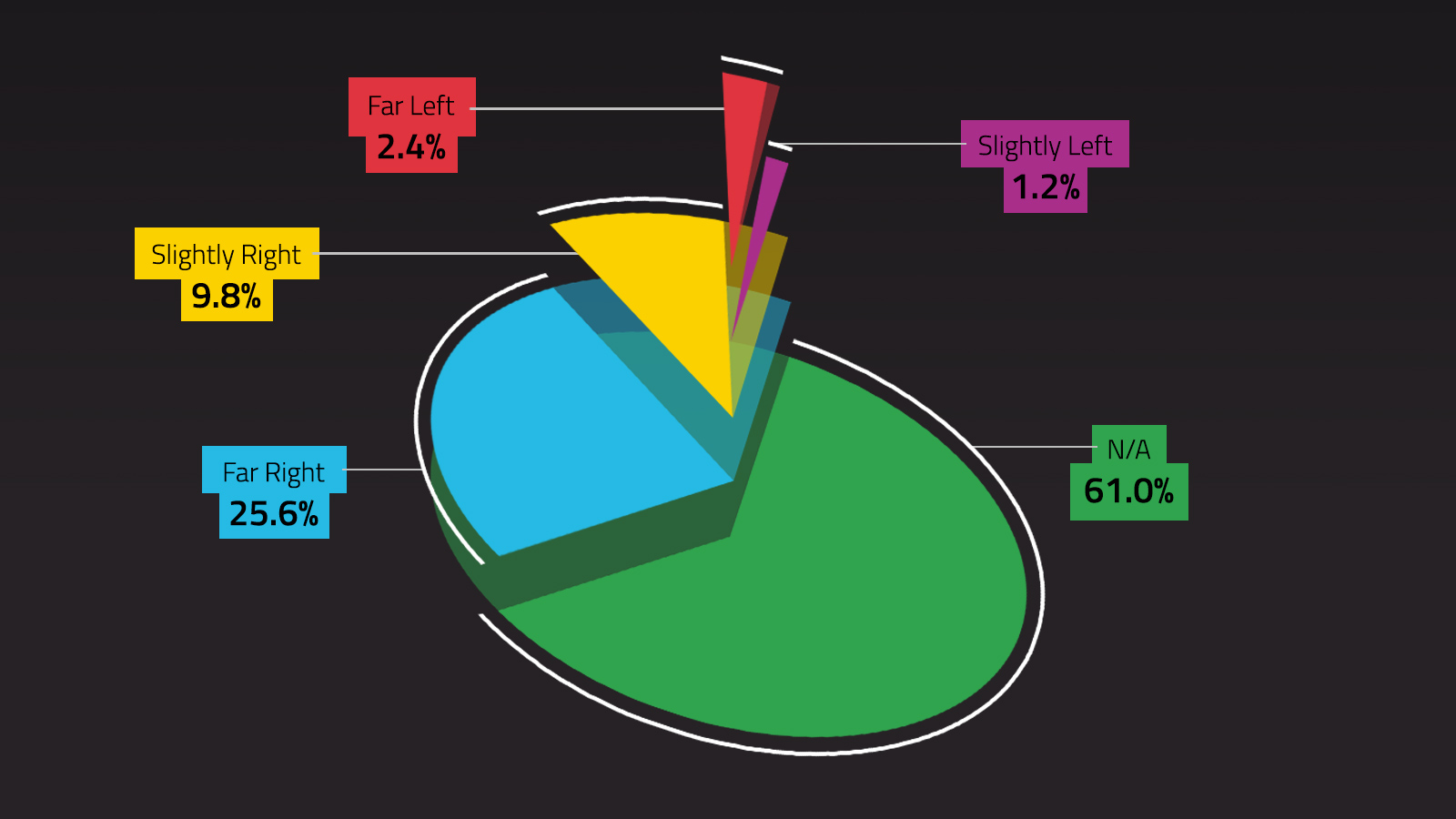

In Section 2, we look at the type of content spread by the global health misinformation networks. We also examine how many of these seemingly independent websites and Facebook pages act as networks, republishing each other's content and translating it across languages to make misinformation content reach the largest possible audience. In this way, they are often able to circumvent Facebook’s fact-checking process.

Some of the most egregious health fakes identified in this report include:

-

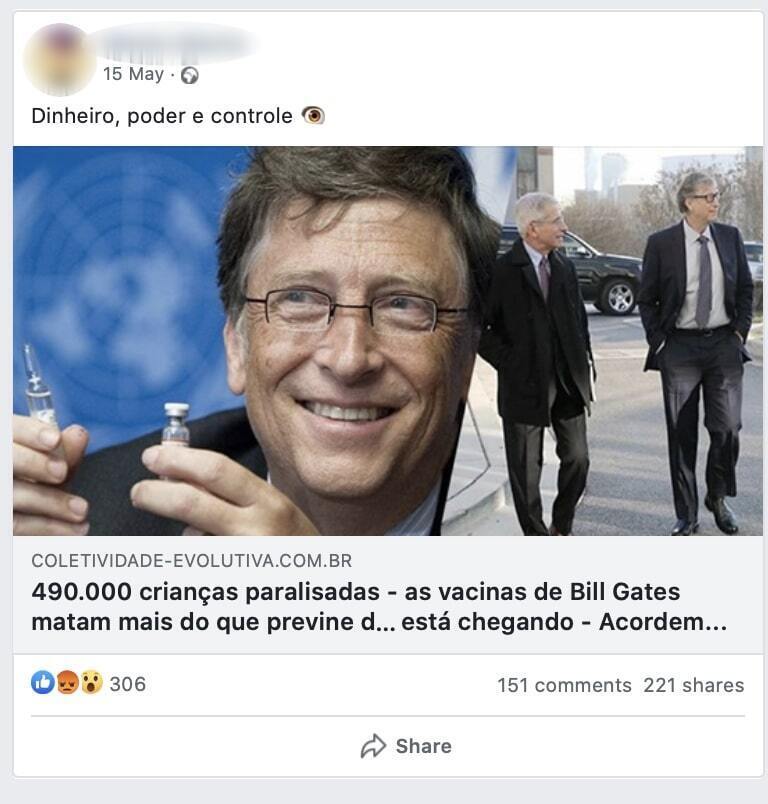

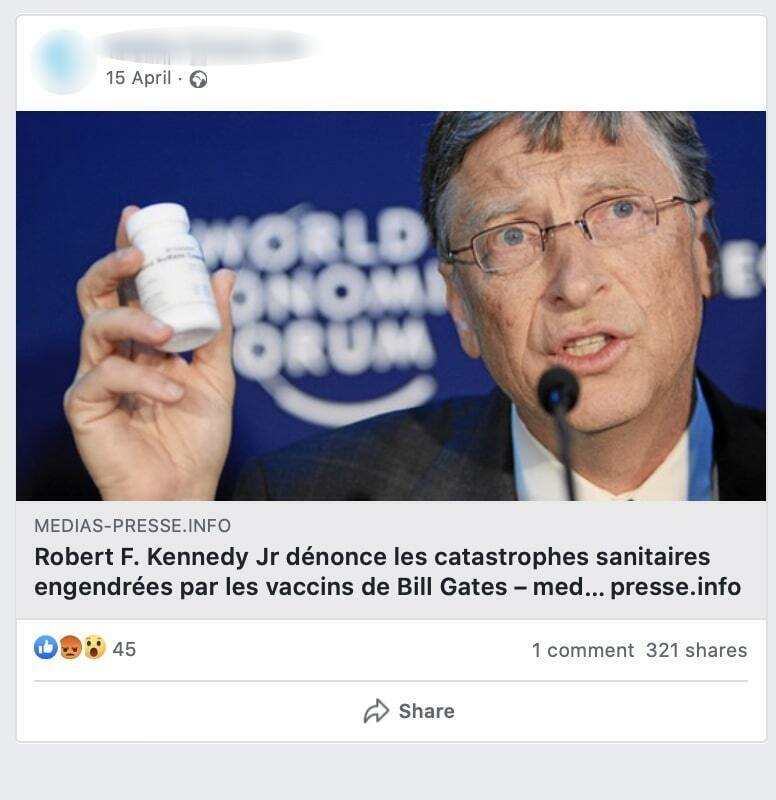

An article alleging that a global explosion in polio is predominantly caused by vaccines, and that a Bill Gates-backed polio vaccination programme led to the paralysis of almost half a million children in India. This article had an estimated 3.7 million views on Facebook and was labelled ‘false’ by Facebook. However, once websites in the networks republished the article, either entirely or partially, its clones/republications reached an estimated 4.7 million views. The subsequent articles all appear on the platform without false information labels.

-

An article that claimed that the American Medical Association was encouraging doctors and US hospitals to overcount COVID-19 deaths had an estimated 160.5 million views — the highest number of views recorded in this investigation.

-

Articles containing bogus cures, such as one wrongly implying that the past use of colloidal silver to treat syphilis, tuberculosis or ebola supports its use today as a safe alternative to antibiotics. This article reached an estimated 4.5 million views.

In Section 3 we take a deeper look at some of the most high profile serial health misinformers to better understand their tactics and motives. We cover five case studies:

-

Realfarmacy, which had 581 million views in a year, and is on track to become one of the largest health misinformation spreading networks.

-

The Truth about Cancer, a family business behind a massive wave of anti-vaccination content and Covid-19 conspiracies.

-

GreenMedInfo, a site that misrepresents health misinformation content as academic research.

-

Dr. Mercola, a well-known leading figure in the anti-vaccination movement.

-

Erin at Health Nut News, a lifestyle influencer and megaphone for the anti-vaccination movement and other conspiracy theories.

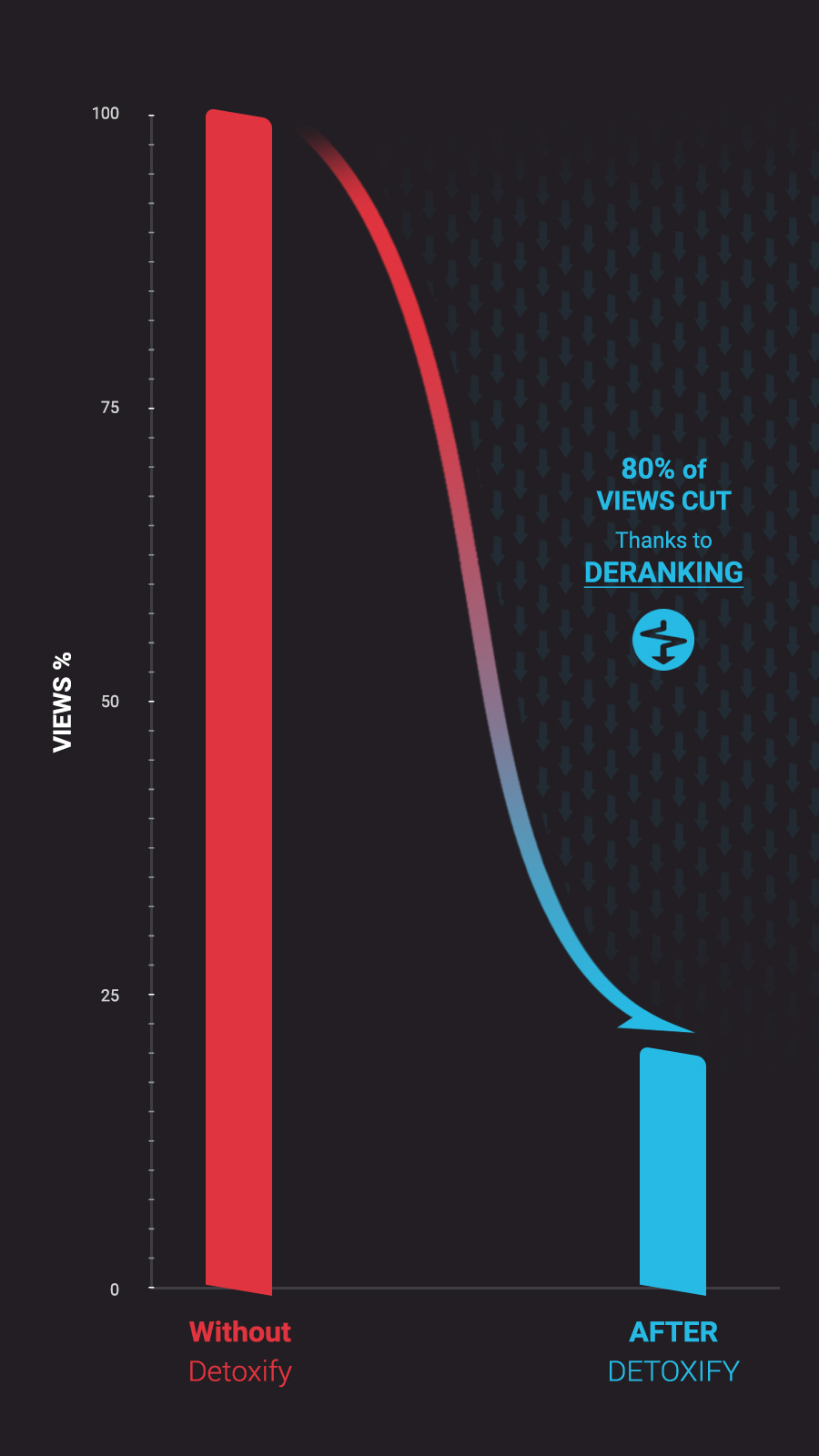

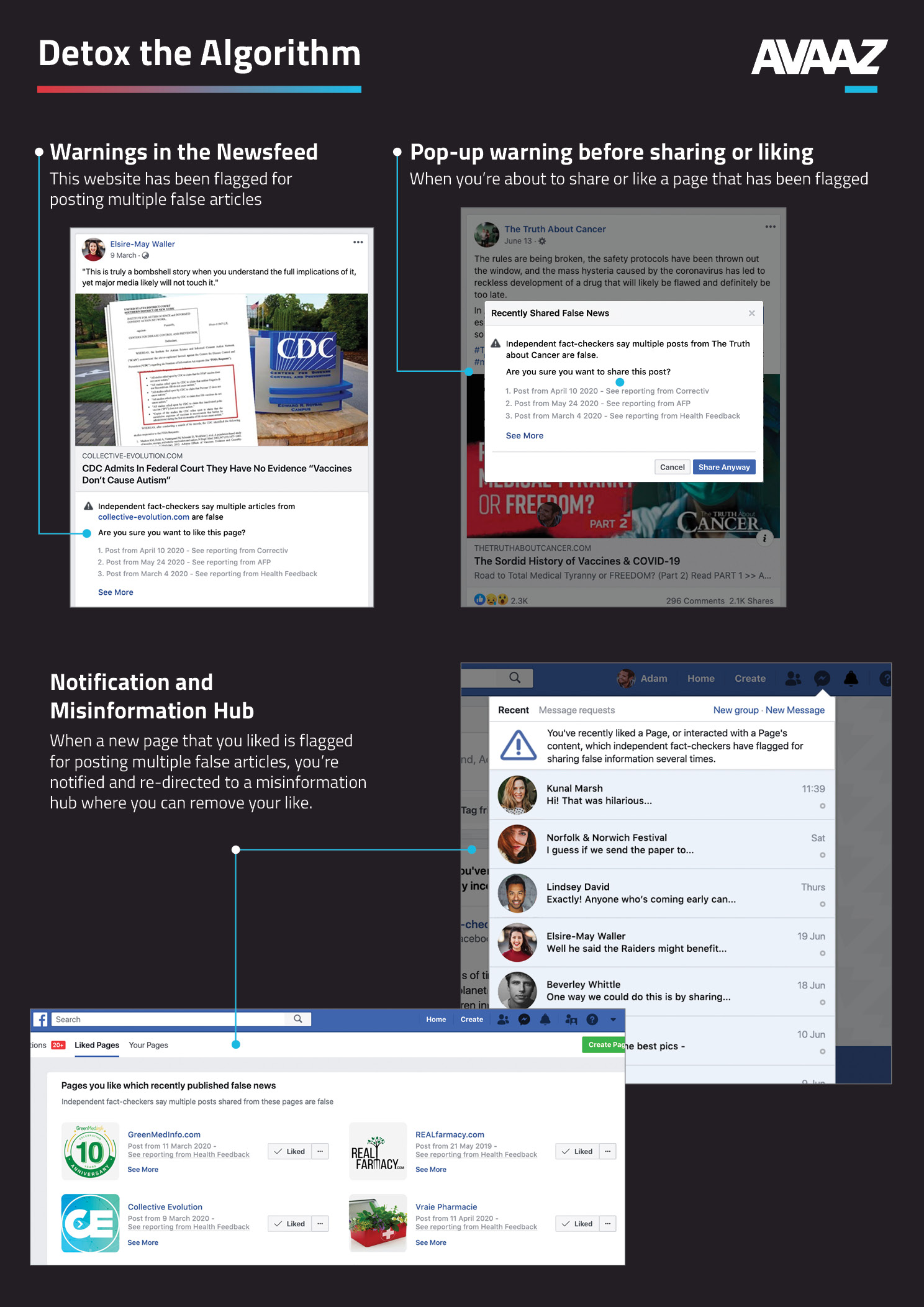

Finally, in Section 4 we present a two-step solution to quarantine this infodemic, which research shows could reduce the belief in misinformation by almost 50% and cut its reach by up to 80%:

-

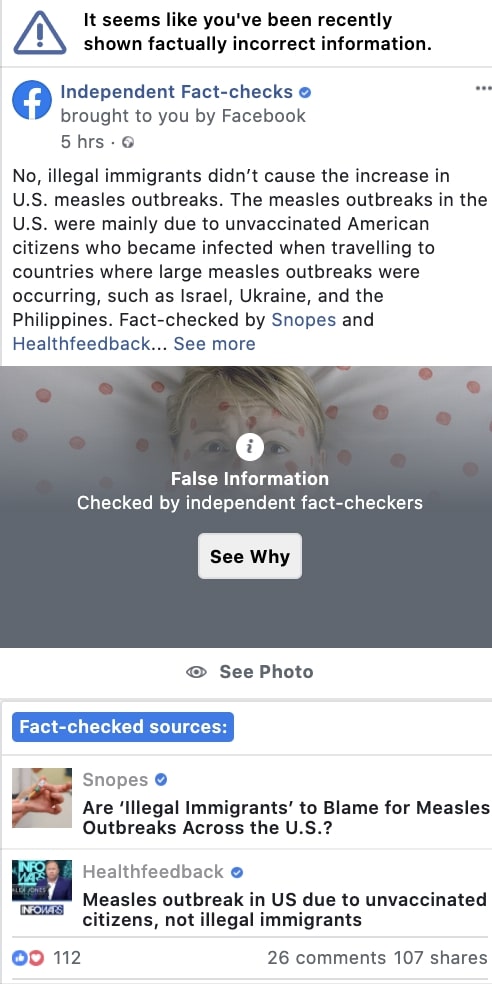

Correct the Record: providing all users who have seen misinformation with independently fact-checked corrections, decreasing the belief in the misinformation by an average of almost 50%; and

-

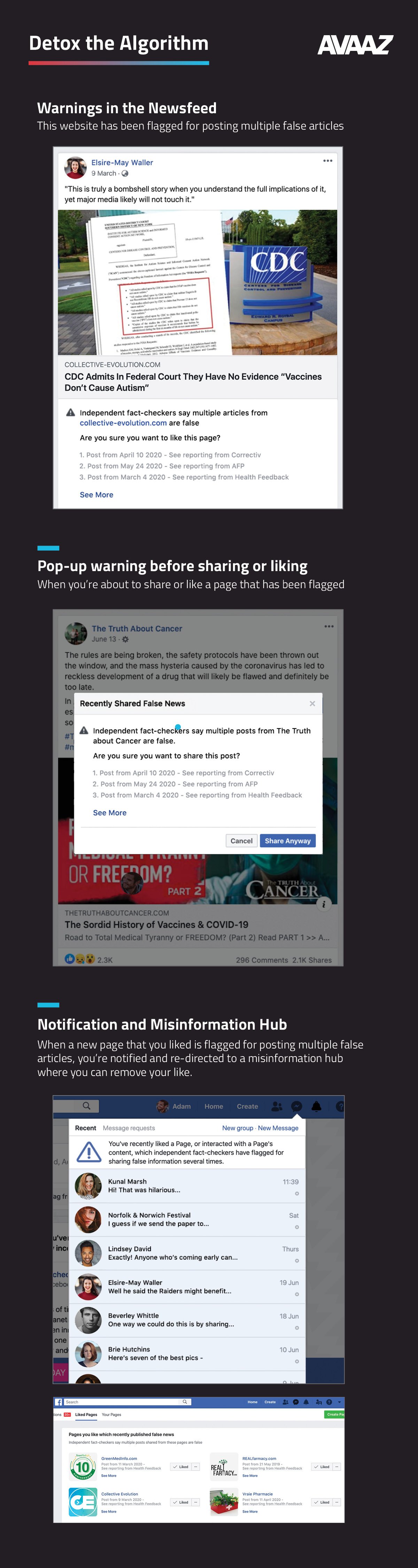

Detox the Algorithm: downgrading misinformation posts and systematic misinformation actors in users' News Feed, decreasing their reach by about 80%.