Findings

Speed of Entry Into the Rabbit Hole

Researcher #1

For this experiment, we tried to put ourselves in the shoes of a new mother who might go to Facebook for information about vaccines. Our first account simply searched for “vaccine” in Facebook’s standard user search, and the speed with which we were led into the anti-vaccine subculture was fast, scary, and disorienting. The top results were from public health bodies -- an expected finding after

Facebook’s decision

to direct users to more trustworthy pages -- but more questionable content started to appear near the bottom of the search results.

After scrolling past a few dozen results -- most of them

verified pages

with blue check-marks

6

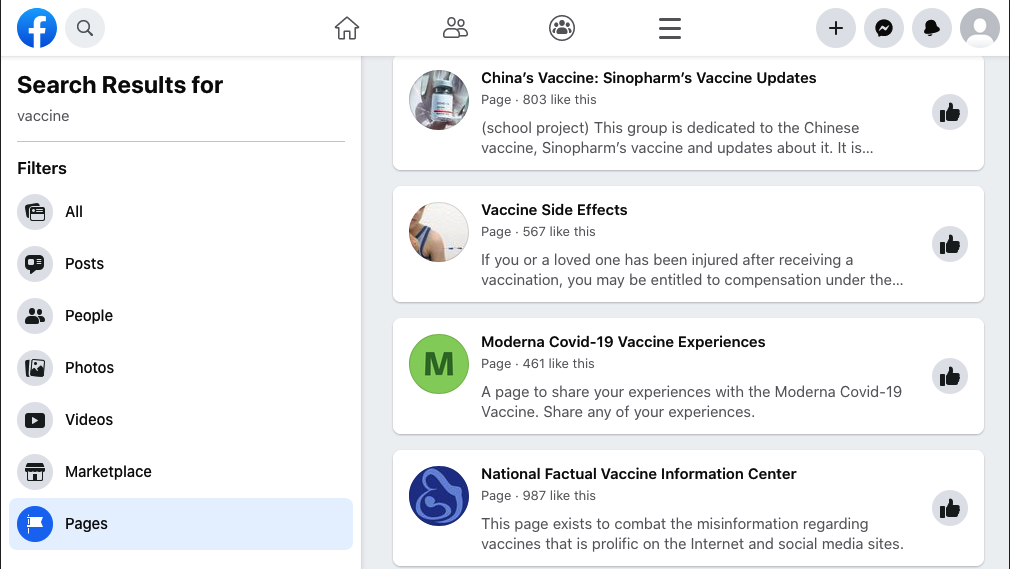

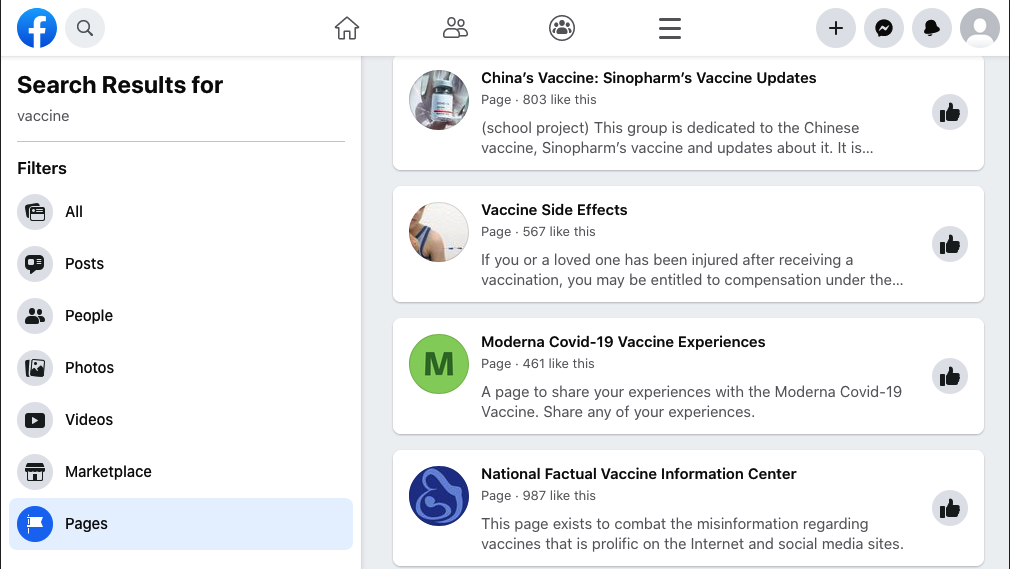

-- pages about vaccines that appeared to be low-quality and objectionable started to show up. These included pages such as “Vaccine Side Effects”, “Vaccine” (with the description “Many Links & sites on Bad Vaccines”), “RethinkVaccines”, “Informed Consent Action Network”, and “Vaccine Epidemic”.

Page results after searching for “vaccine” on Facebook

Page results after searching for “vaccine” on Facebook

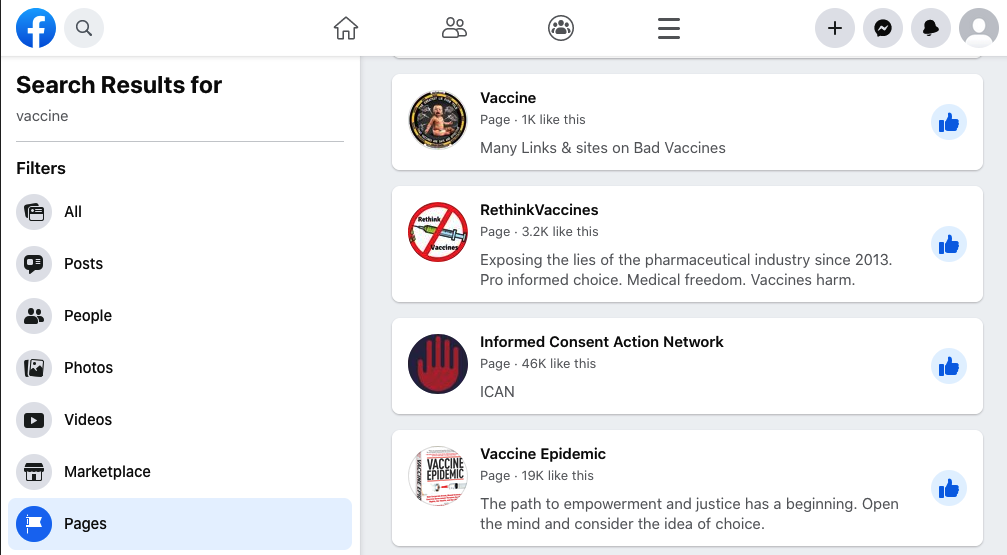

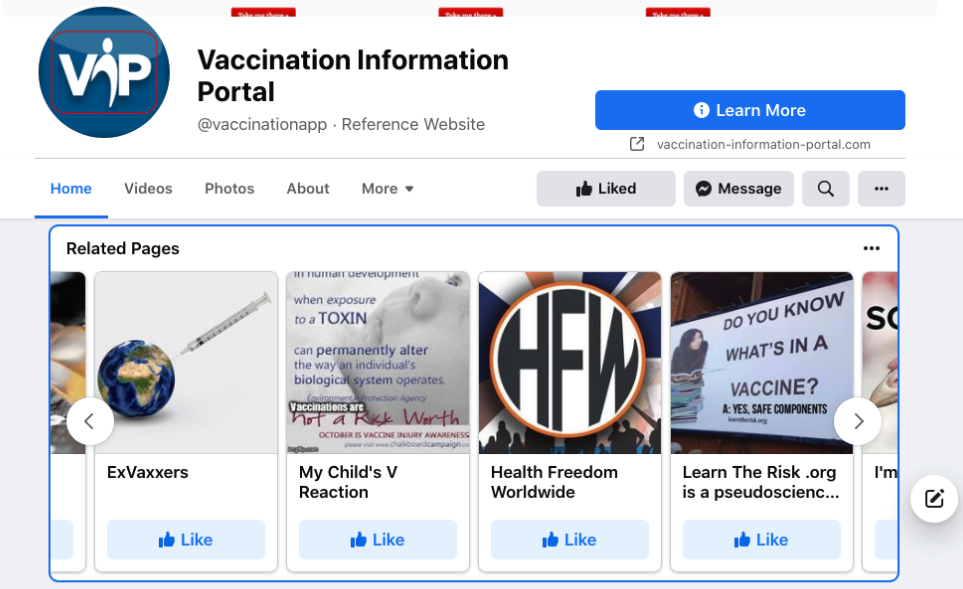

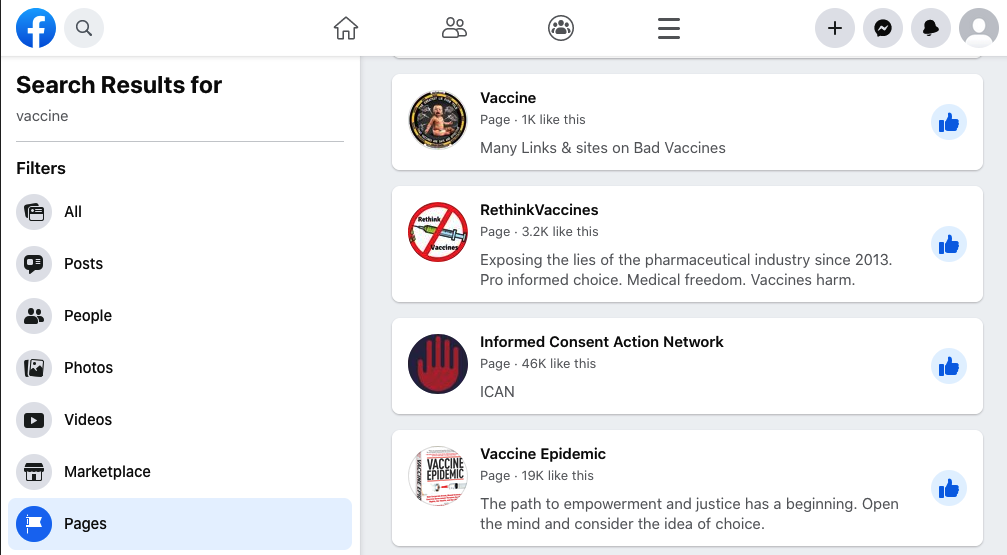

Any new mother would be curious about any potential side effects of vaccines. Following this instinct, we went to the page “Vaccine Side Effects.” While this particular page had no posts, it was our unknowing ticket into the rabbit hole. After liking it, Facebook immediately recommended more pages to follow that contained anti-vaccine content, including “Natural Ways to Keep Healthy”, “Vaccination Information Portal”, and “Autistic by Injection”. Autistic by Injection was a dead-end, meaning it had no related pages of its own.

7

Vaccination Information Portal sounds like it

could

be a trustworthy page, but includes posts like

this one

from anti-vaccine advocate Prof. Christopher Exley who

claims

aluminum in vaccines causes autism. Seeing such posts is confusing; we imagine it would be hard to know what information to trust, especially when the pages being suggested have benign names (like “Vaccine Information Portal”) and contain content bearing hallmarks of credibility like the title "Professor” (Prof. Exley, for instance, is a specialist in bioinorganic chemistry). “Vaccination Information Portal,” in turn, led to Facebook recommending more low-quality pages. The diagram below demonstrates our journey from liking the page “Vaccine Side Effects” to “Vaccination Information Portal” to nine more containing anti-vaccine content. This is just one “pathway” of many that this account followed, eventually being led to 85 pages total with objectionable content.

This “pathway tree” shows the chronological journey from the page “Vaccine Side Effects” to “Vaccination Information Portal” to nine more containing anti-vaccine content. (Note: the page “Natural Ways to Keep Healthy” also led to more problematic pages, but they are not shown here for lack of space. The page “Autistic by Injection” was a dead-end with no related pages.)

This “pathway tree” shows the chronological journey from the page “Vaccine Side Effects” to “Vaccination Information Portal” to nine more containing anti-vaccine content. (Note: the page “Natural Ways to Keep Healthy” also led to more problematic pages, but they are not shown here for lack of space. The page “Autistic by Injection” was a dead-end with no related pages.)

Researcher #2

Meanwhile, our other researcher started their journey slightly differently. They first liked the page

Energetic Health Institute

-- a page with nearly 100K followers belonging to a “holistic medicine” organization out of Oregon that has previously shared multiple pieces of misinformation. For example, they have claimed that data from the CDC’s Vaccine Adverse Event Reporting System (VAERS) proves that the

COVID-19 vaccine

is

killing thousands of Americans

. This is despite the CDC’s

VAERS disclaimer

, and

numerous

fact checks

, cautioning that: “The number of reports alone cannot be interpreted or used to reach conclusions about the existence, severity, frequency, or rates of problems associated with vaccines."

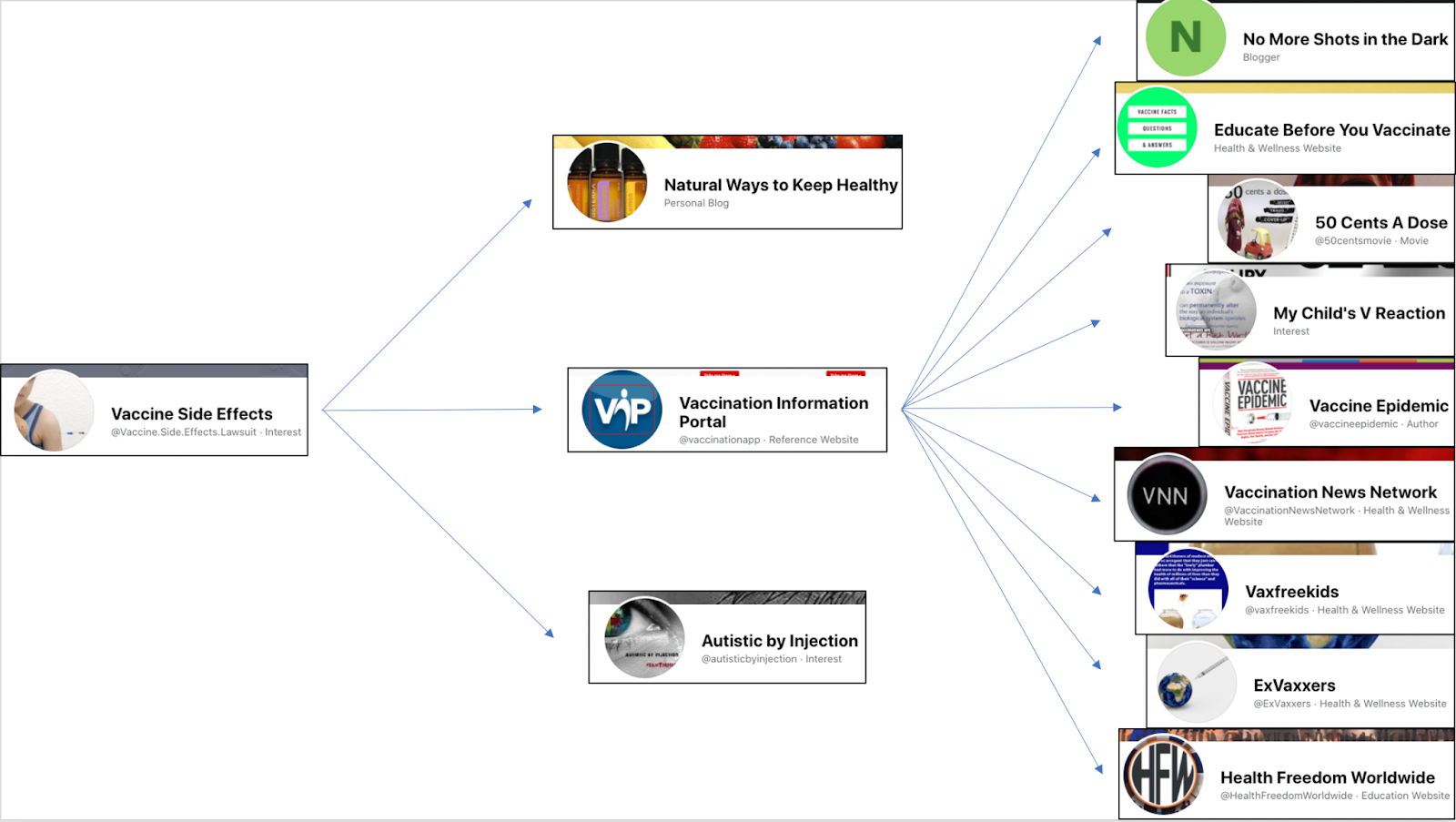

After liking the Energetic Health Institute, the Facebook algorithm immediately recommended the page “Del Bigtree”, a

well-known anti-vaccine advocate

who founded the organization Informed Consent Action Network (a page that Facebook also recommended to our

other

account when they searched “vaccine”). This particular page has not been active since 2018 (although Bigtree’s

personal page

still is), but its About section and older posts promote Bigtree’s movie “VAXXED”, leading us to a

website

where we could purchase the movie and “join the movement.”

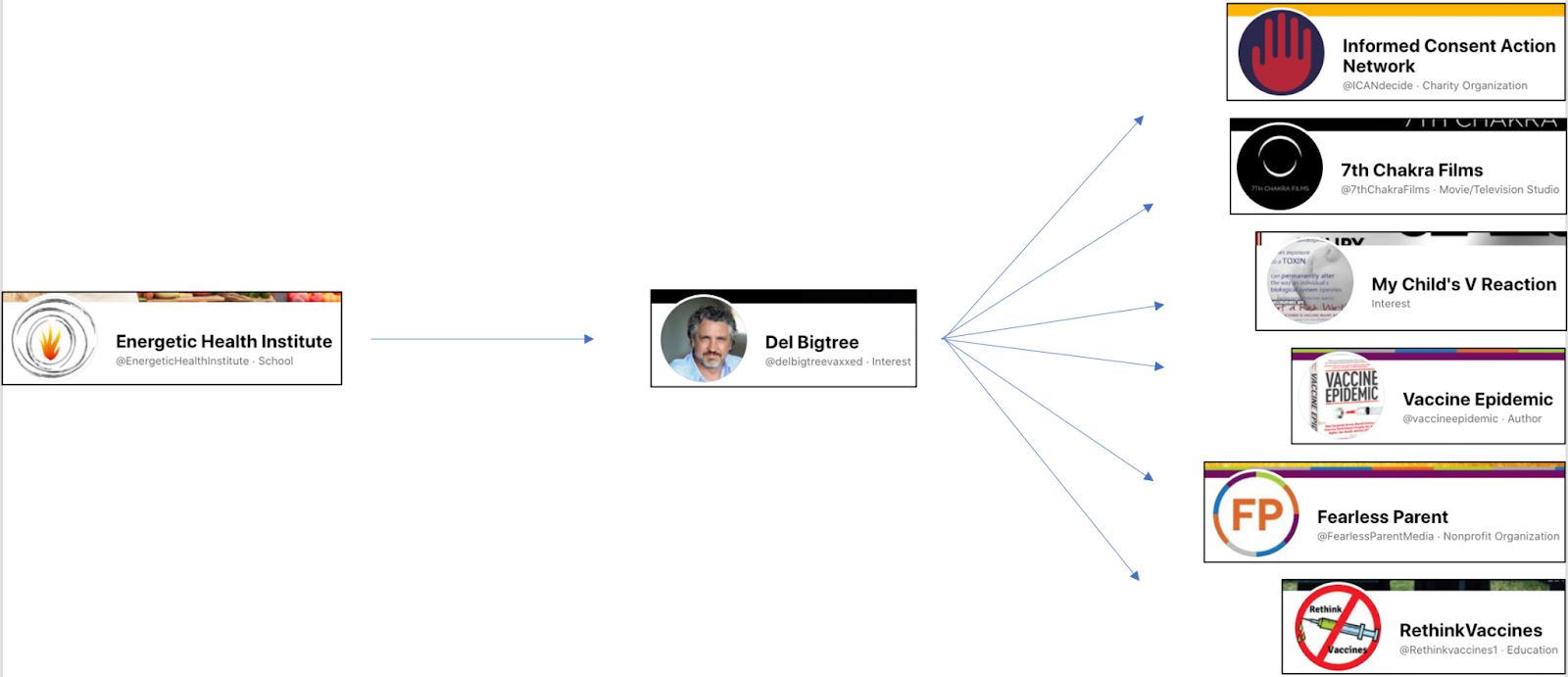

From Del Bigtree, the Facebook algorithm recommended six more pages containing anti-vaccine content, pictured in the diagram below.

This “pathway tree” shows the chronological journey from the page “Energetic Health Institute” to “Del Bigtree” to six more containing anti-vaccine content.

This “pathway tree” shows the chronological journey from the page “Energetic Health Institute” to “Del Bigtree” to six more containing anti-vaccine content.

Both Researchers

Between our two accounts, we liked and documented 109 unique pages containing anti-vaccine content, with a total of 1.4 million followers. Even though each researcher followed their own intuition and encountered their own pathways, we liked and documented 59 of the same pages. Over two days and in a dataset this small, we believe encountering 55% of the same pages is intriguing and could suggest that regardless of user behavior, Facebook’s recommendation algorithm has created an internal network of related pages containing anti-vaccine content that it “pulls” from to suggest new pages to users. If this is true, we can only assume that similar algorithmic “networks” might exist for other objectionable topics as well.

Pages of Note: Anti-Vaccine Advocates and Dead-Ends

As previously mentioned, in the course of our research the algorithm recommended to like pages that appear to be associated with known anti-vaccine advocates and organizations, including:

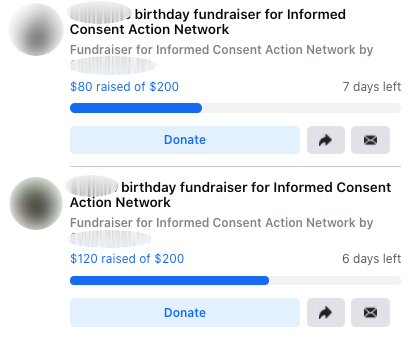

Combined, these 13 pages have over 439,000 followers. Perhaps more troubling is that Informed Consent Action Network, James Lyons-Weiler, PhD, and Institute for Pure and Applied Knowledge are still using Facebook to raise money, despite

Facebook’s statement

that removing access to fundraising tools is one way they could take action against pages spreading vaccine misinformation.

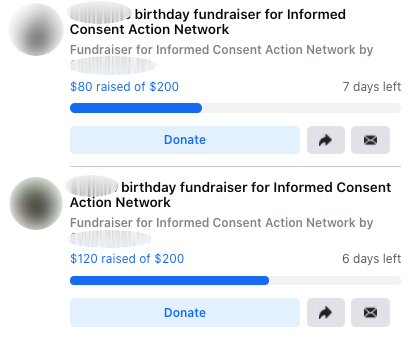

Examples of fundraisers for Informed Consent Action Network, Del Bigtree’s anti-vaccine organization

Examples of fundraisers for Informed Consent Action Network, Del Bigtree’s anti-vaccine organization

Additionally, Facebook recommended 18 pages containing anti-vaccine content that we describe as “dead-ends”: pages that, after liking, had no further related pages of their own. It is not clear why these pages have no recommended pages of their own, nor why the page recommendation algorithm served these pages to us in the first place. One possible explanation is that this is a type of action Facebook has taken against pages that have shared health misinformation; however, without transparency from Facebook, we cannot know this for sure. The “dead-end” pages we encountered, in order of followers from high to low, is as follows:

-

Learn the Risk

-

Hear This Well

-

The Thinking Moms' Revolution

-

The Drs. Wolfson

-

Informed Mothers

-

Freedom Angels

-

The Healthy Alternatives

-

1986: The Act

-

James Lyons-Weiler, PhD

-

The Untrivial Pursuit

-

Vaccine Injury Awareness Month Australia

-

Vaccine-Awareness

-

Vaccinations - Out of Control

-

Autistic by Injection

-

No More Shots in the Dark

-

International Advocates Against Mandates

-

7th Chakra Films

-

50 Cents A Dose

Algorithmic Association Between Vaccines and Autism

In the course of our research, Facebook suggested 11 pages related to autism. Three of them --

Autistic by Injection

,

End Autism Now

, and

TRUTH TIME

-- explicitly link vaccines to autism, a particularly upsetting finding given

Facebook’s pledge

to remove posts claiming that vaccines cause autism. On at least 7 of the remaining pages, our researchers could not find any anti-vaccine content. This is perhaps more troubling as it begs the question: Why has Facebook’s recommendation algorithm learned to associate anti-vaccine content with unrelated pages about autism?

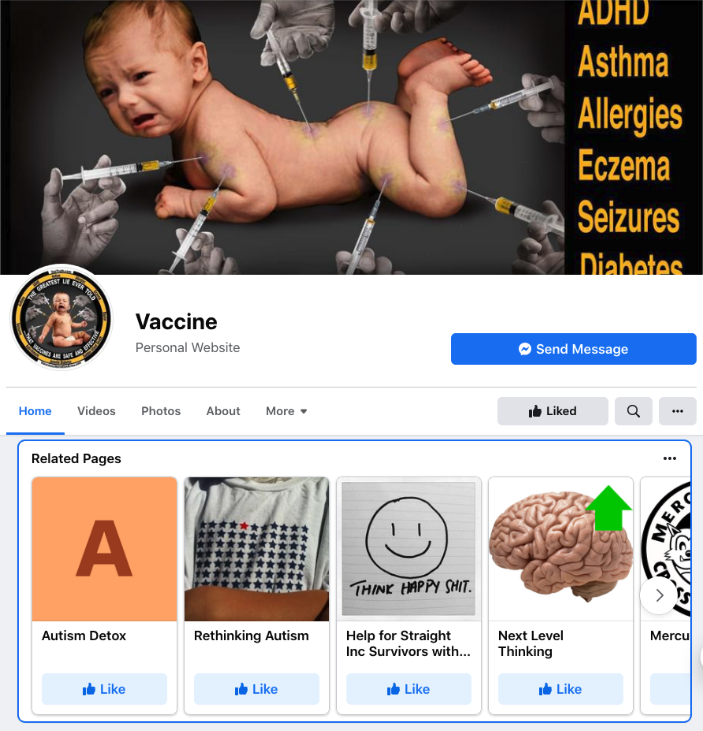

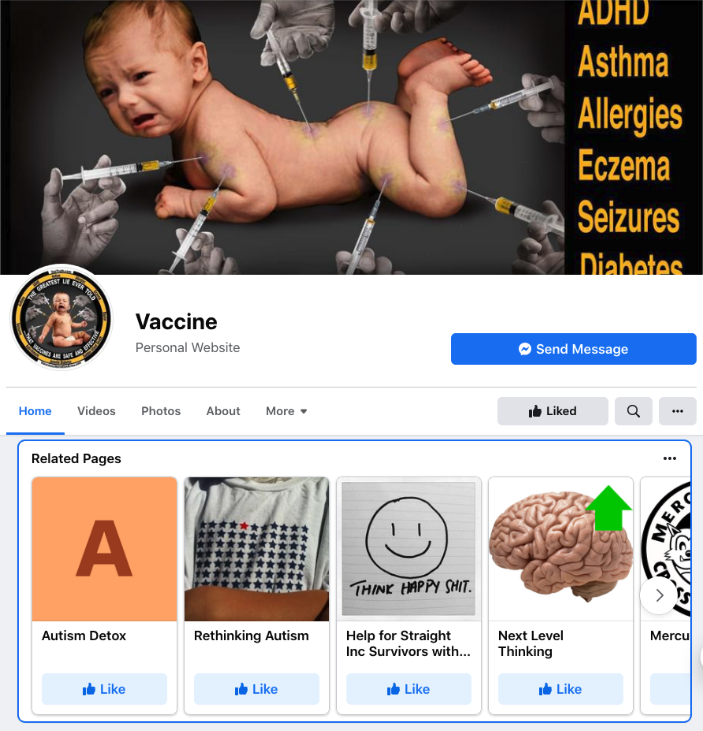

From the page “Vaccine,” Facebook suggested two autism-related pages to us: Autism Detox and Rethinking Autism. While the page “Autism Detox” seems to make the problematic suggestion that autism is something to be “detoxed”, neither of these pages appear to have any anti-vaccine content.

From the page “Vaccine,” Facebook suggested two autism-related pages to us: Autism Detox and Rethinking Autism. While the page “Autism Detox” seems to make the problematic suggestion that autism is something to be “detoxed”, neither of these pages appear to have any anti-vaccine content.

One could argue that the algorithmic link that Facebook appears to have created between vaccines and autism is a form of misinformation in and of itself: even if a user never visits the autism-related pages, by seeing their names appear in the Related Pages carousel they could start to associate anti-vaccine content with autism, a dangerous and bigoted falsehood.

Climbing Out of the Rabbit Hole

Facebook’s related pages algorithm could work to lead users

out

of the anti-vaccine rabbit hole, as opposed to into it. On a few occasions, our researchers were prompted to like pages that appeared to provide factual information about vaccines (such as “parody” accounts debunking the claims of prominent anti-vaccine advocates), or pages that were unrelated to vaccines altogether.

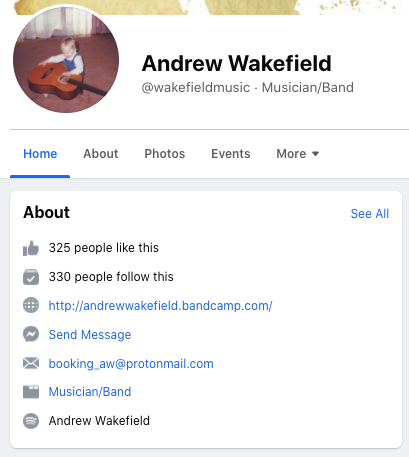

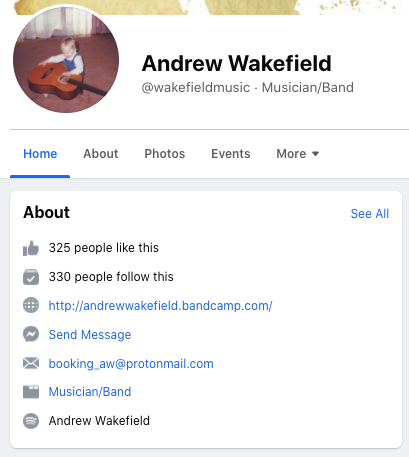

One intriguing example is that the Facebook page recommendation algorithm suggested the page

Andrew Wakefield

, belonging to a musician whose page is completely unrelated to vaccines. However, because this musician shares his name with the

other

Andrew Wakefield, the anti-vaccine advocate, we believe Facebook’s recommendation algorithm has learned to associate it with anti-vaccine content.

The Facebook algorithm recommended the page Andrew Wakefield, a musician unrelated to vaccine content but who shares a name with the anti-vaccine advocate Andrew Wakefield.

The Facebook algorithm recommended the page Andrew Wakefield, a musician unrelated to vaccine content but who shares a name with the anti-vaccine advocate Andrew Wakefield.

Tell Your Friends