Section One - From Election to Insurrection: How Facebook Failed American Voters

Just days before America went to the polls,

Mark Zuckerberg

said

that the US election was going to be a test for the platform.

After years of revelations about Facebook’s role in the 2016 vote, the election would showcase four years of work by the platform to root out foreign interference, voter suppression, calls for violence and more.

According

to Zuckerberg, Facebook had a “responsibility to stop abuse and election interference on [the] platform” which is why it had “made significant investments since 2016 to better identify new threats, close vulnerabilities and reduce the spread of viral misinformation and fake accounts.”

Mark Zuckerberg: "Election integrity is and will be an ongoing challenge. And I’m proud of the work that we have done here.”

But how well did Facebook do?

In a Senate

hearing

in November 2020, Zuckerberg highlighted some of the platform’s key steps:

“

...we introduced new policies to combat voter suppression and misinformation [...] We worked with the local election officials to remove false claims about polling conditions that might lead to voter suppression. We partnered with Reuters and the National Election Pool to provide reliable information about results. We attached voting information to posts by candidates on both sides and additional contexts to posts trying to delegitimize the outcome.

”

In January of this year, in an interview for Reuters after the election, Sheryl Sandberg

said

that

the platform’s efforts “paid off in the 2020 election

when you think about what we know today about the difference between 2016 and 2020.”

In very specific areas, such as

Russian disinformation campaigns,

Ms. Sandberg may be right, but when one looks at the bigger disinformation and misinformation picture, the data tells a very different story. In fact, these comments by Mr. Zuckerberg and Ms. Sandberg point to

a huge transparency problem at the heart of the world’s largest social media platform

- Facebook only discloses the data it wants to disclose and therefore evaluates its own performance, based on its own metrics. This is problematic. Put simply,

Facebook should not score its own exam.

This report aims to provide a more objective assessment. Based on our investigations during the election and post-election period, and using hard evidence,

this report shows clearly how Facebook did not live up to its promises to protect the US elections.

The report seeks to calculate the scale of Facebook’s failure

- from its seeming unwillingness to prevent billions of views on misinformation-sharing pages, to its ineffectiveness at preventing millions of people from joining pages that spread violence-glorifying content. Consequently, voters were left dangerously exposed to a surge of toxic lies and conspiracy theories.

But more than this,

its failures helped sweep America down the path from election to insurrection.

The numbers in this section show

Facebook’s role in providing fertile ground for and incentivizing a larger ecosystem of misinformation and toxicity,

that we argue contributed to radicalizing millions and helped in creating the conditions in which the storming of the Capitol building became a reality.

Facebook could have stopped nearly 10.1 billion estimated views of content from top-performing pages that repeatedly shared misinformation

Polls show that around seven-in-ten (69%) American adults

use

Facebook, and over half (52%)

say

they get news from the social media platform. This means

Facebook’s algorithm has significant control over the type of information ecosystem saturating American voters

. Ensuring that the algorithm was not polluting this ecosystem with a flood of misinformation should have been a key priority for Facebook.

The platform could have more consistently followed

advice

to limit the reach of misinformation by ensuring that such content and repeat sharers of “fake news”

are downgraded

in users’ News Feeds. By tweaking its algorithm transparently and engaging with civil society to find effective solutions, Facebook could have ensured it didn’t amplify falsehoods and conspiracy theories, and those who repeatedly peddled them, and instead detoxed its algorithm to ensure authoritative sources had a better chance at showing up in users’ News Feeds.

Yet after tracking top-performing pages that repeatedly shared misinformation on Facebook

3

, alongside the top 100 media pages

4

, our research reveals that

Facebook failed to act for months

before the election. This was

in spite of

clear warnings

from organisations, including

Avaaz,

that there was a real danger this could become a misinformation election.

Such a divisive and politically-charged election was almost guaranteed to be the perfect environment for misinformation-sharing pages because their provocative style of content is

popular

and Facebook’s algorithm learns to

privilege

and

boost

such content in users’ News Feed.

In August, our investigations team began reporting to the platform, and sharing with fact-checkers, key misinformation content and inauthentic behavior we had identified that had avoided detection by the platform - highlighting to Facebook how their systems were failing.

It had become clear that Facebook would not act with the urgency required to protect users against misinformation by proactively detoxing its algorithm

5

. Our strategic approach was to fill the gaps in Facebook’s moderation policies and its misinformation detection methods and assisting fact-checkers in finding and flagging more misinformation content from prominent misinformers, we aimed to help ensure that Facebook’s “downranking”

policy

for

repeat misinformers

would kick in early enough to protect voters.

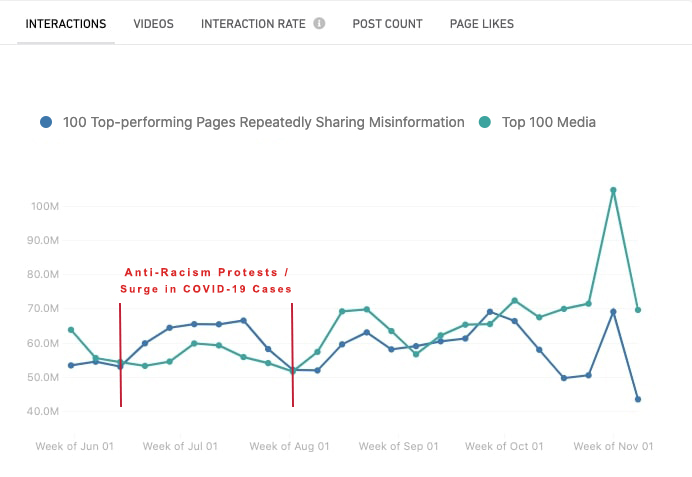

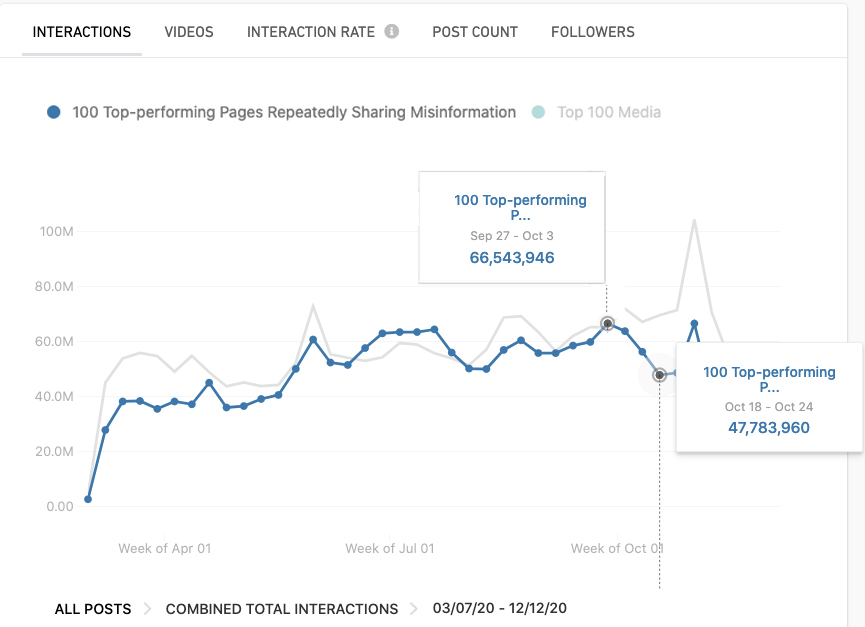

According to our findings, it wasn’t until October 2020, after mounting evidence that the election was being pummeled with misinformation, and with an expanded effort across civil society for finding and reporting on misinformation, that Facebook’s systems began to kick in and the platform took action to reduce the reach of repeat sharers of misinformation, seemingly adding friction on content from the pages we had identified.

Why we consider total estimated views from the top-performing pages that repeatedly shared misinformation

The Facebook pages and groups identified are not solely spreading verifiable misinformation, but also other types of content, including general non-misinformation content.

There are two main reasons that we’re focusing on the total estimated views:

-

Misinformation is spread by an ecosystem of actors.

Factually inaccurate or misleading content doesn’t spread in isolation: it’s often shared by actors who are spreading other types of content, in a bid to build followers and help make misinformation go viral. A recent study published in Nature showed how clusters of anti-vaccination pages managed to become highly entangled with undecided users and share a broader set of narratives to draw users into their information ecosystem. Similarly, research by Avaaz conducted ahead of the European Union elections in 2019 observed that many Facebook pages will share generic content to build followers, while sharing misinformation content sporadically to those users. Hence, understanding the estimated views garnered by these top-performing pages that repeatedly share misinformation during a specific period can provide a preview of their general reach across the platform.

-

One click away from misinformation content.

When people interact with such websites and pages, those who might have been drawn in by non-misinformation content can end up being exposed to misinformation, either with a piece of false content being pushed into their News Feed or through misinformation content that’s highlighted on the page they may click on. Our methodology of measuring views is highly dependent on interactions (likes, comments, shares), but many users who visit these pages and groups may see the misinformation content but not engage with it. Furthermore, many of these pages and groups share false and misleading content at a scale that cannot be quickly detected and fact-checked. Until the fact-check of an article with false information is posted, a lot of the damage is already done and unless the fact-check is retroactive, the correction may go unseen by millions of affected viewers.

Consequently, understanding the impact these pages and groups can have on America’s information ecosystem is more accurately reflected by the overall amount of views they are capable of generating. Moreover, this measurement allows us to better compare the overall views of these pages to the views of authoritative news sources.

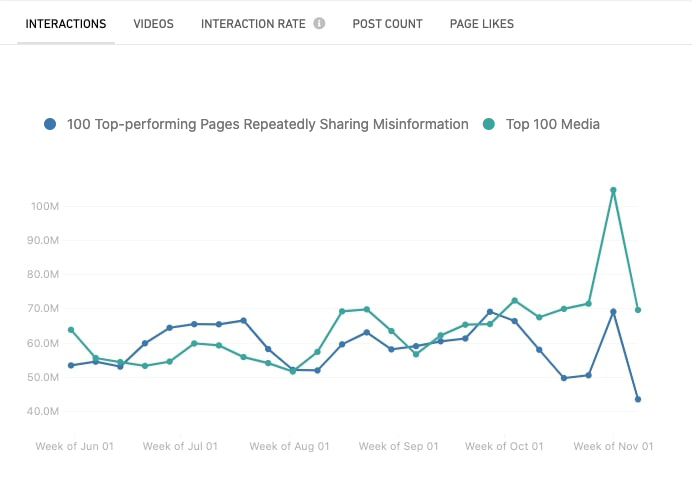

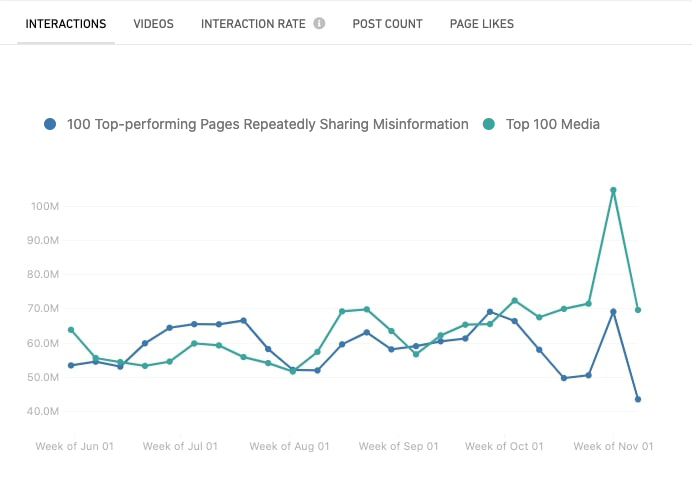

Specifically, our analysis shows how,

from October 10, 2020, there was a sudden decline in interactions on some of the most prominent pages that had repeatedly shared misinformation.

This analysis was supported by posts made by many of the pages we were monitoring and reporting, which started to announce that they were being ‘suppressed’ or ‘throttled’ (see below for examples). In contrast, the top 100 media outlet pages on Facebook remained at a steady pace

6

.

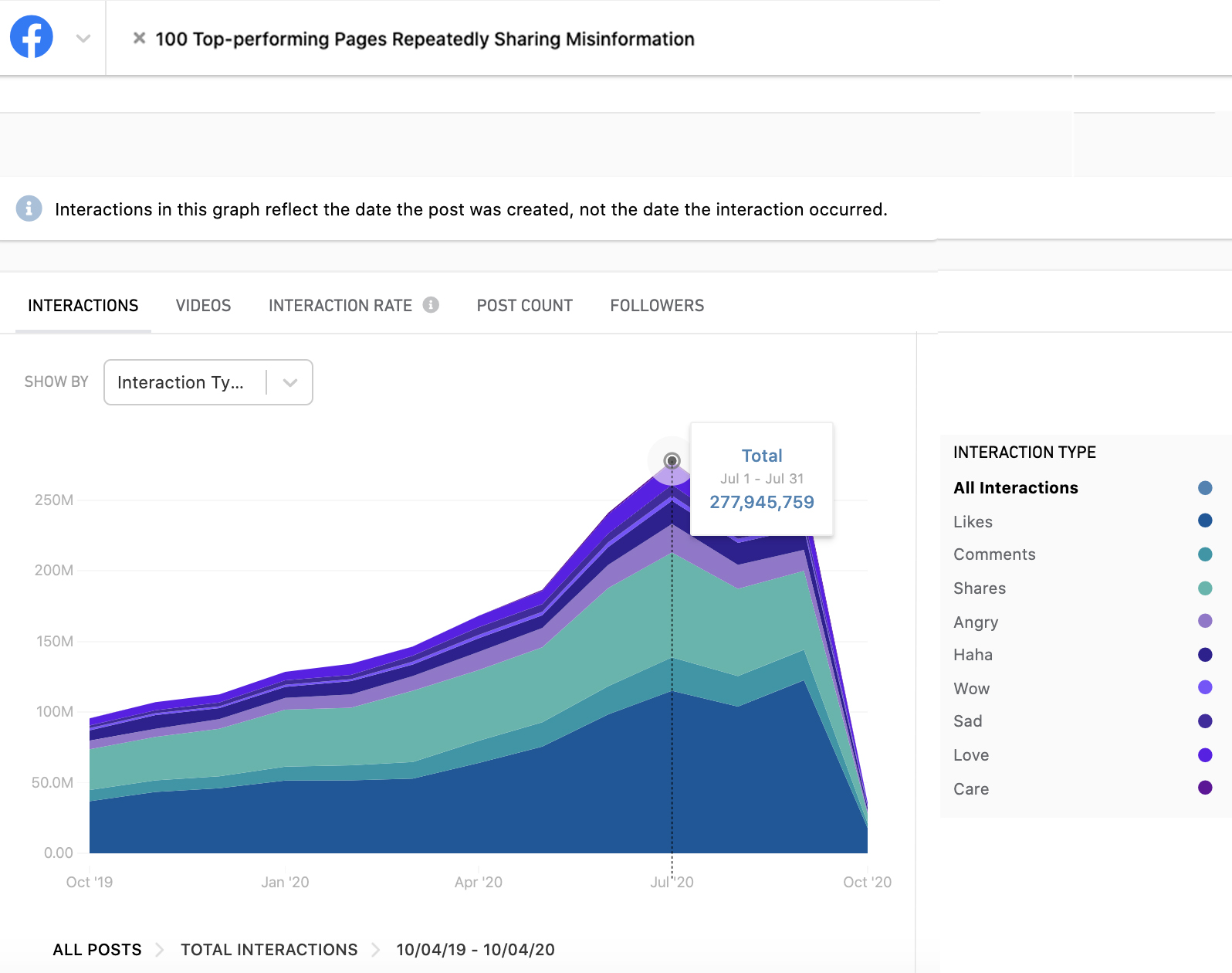

Figure 1: June, 2020 to November, 2020 (Decline in monthly interactions of most prominent pages that had shared misinformation)

Graph generated using CrowdTangle7. The graph shows the comparative interactions of the top-performing pages that repeatedly shared misinformation and the Top 100 Media pages in our study over a period of five and a half months. The clear decline in engagement takes effect on the week of October 10. The high peak on the week of November 1 to November 8 is an outlier of interactions on a few posts confirming the winner of the elections on November 7.

Figure 1: June, 2020 to November, 2020 (Decline in monthly interactions of most prominent pages that had shared misinformation)

Graph generated using CrowdTangle7. The graph shows the comparative interactions of the top-performing pages that repeatedly shared misinformation and the Top 100 Media pages in our study over a period of five and a half months. The clear decline in engagement takes effect on the week of October 10. The high peak on the week of November 1 to November 8 is an outlier of interactions on a few posts confirming the winner of the elections on November 7.

Post by Hard Truth, one of the pages in our study, in which they report a one million decrease in post reach.

Post by Hard Truth, one of the pages in our study, in which they report a one million decrease in post reach.

(Screenshot taken on Nov 3, 2020, stats provided by CrowdTangle8 at the bottom of the post are applicable to this time period)

Post by EnVolve, one of the pages in our study, in which they report a decrease in post reach. In addition, underneath the post the statistics provided by CrowdTangle show that it received 88.1% fewer interactions (likes, comments and shares) than the page’s average interaction rate.(Screenshot taken on Nov 3, 2020, stats provided by CrowdTangle9 at the bottom of the post are applicable to this time period)

Post by EnVolve, one of the pages in our study, in which they report a decrease in post reach. In addition, underneath the post the statistics provided by CrowdTangle show that it received 88.1% fewer interactions (likes, comments and shares) than the page’s average interaction rate.(Screenshot taken on Nov 3, 2020, stats provided by CrowdTangle9 at the bottom of the post are applicable to this time period)

What the experts say about downranking untrusted sources

A number of studies and analyses have shown that platforms can utilize different tactics to fix their algorithms by downranking untrusted sources instead of amplifying them, decreasing the amount of users that see content from repeat misinforming pages and groups in their newsfeeds.

Facebook has also spoken about “

Break-Glass tools

” which the platform can deploy to “

effectively throw a blanket over a lot of content”

. However, the platform is not transparent about how these tools work, their impact, and why, if they can limit the spread of misinformation, they have not been adopted as consistent policy.

As a recent commentary from

Harvard’s Misinformation Review

highlights, more transparency and data from social media platforms is needed to better understand and thus design the most optimal algorithmic downranking policies. However, here are some recommended solutions:

-

Avaaz recommends downgrading based on content fact-checked by independent fact-checkers, where pages, groups and websites flagged frequently for misinformation are down-ranked in the algorithm. This is the process Facebook partially adopts, but with minimal transparency.

-

A recent

study

at MIT shows that downgrading based on users’ collective wisdom, where trustworthiness is crowdsourced from users, would also be effective. Twitter has

announced

it will pilot a product to test this.

-

Downgrading based on a set of trustworthiness standards, such as those identified by Newsguard. Google, for example, now uses thousands of human raters, clear guidelines, and a rating system that

helps inform its algorithm’s

search results.

Based on our observations of the impact of downranking of pages we monitored, and Facebook’s own claims about the impact of downranking content, action to detox Facebook’s algorithm can

reduce

the reach of misinformation sharing pages by 80%.

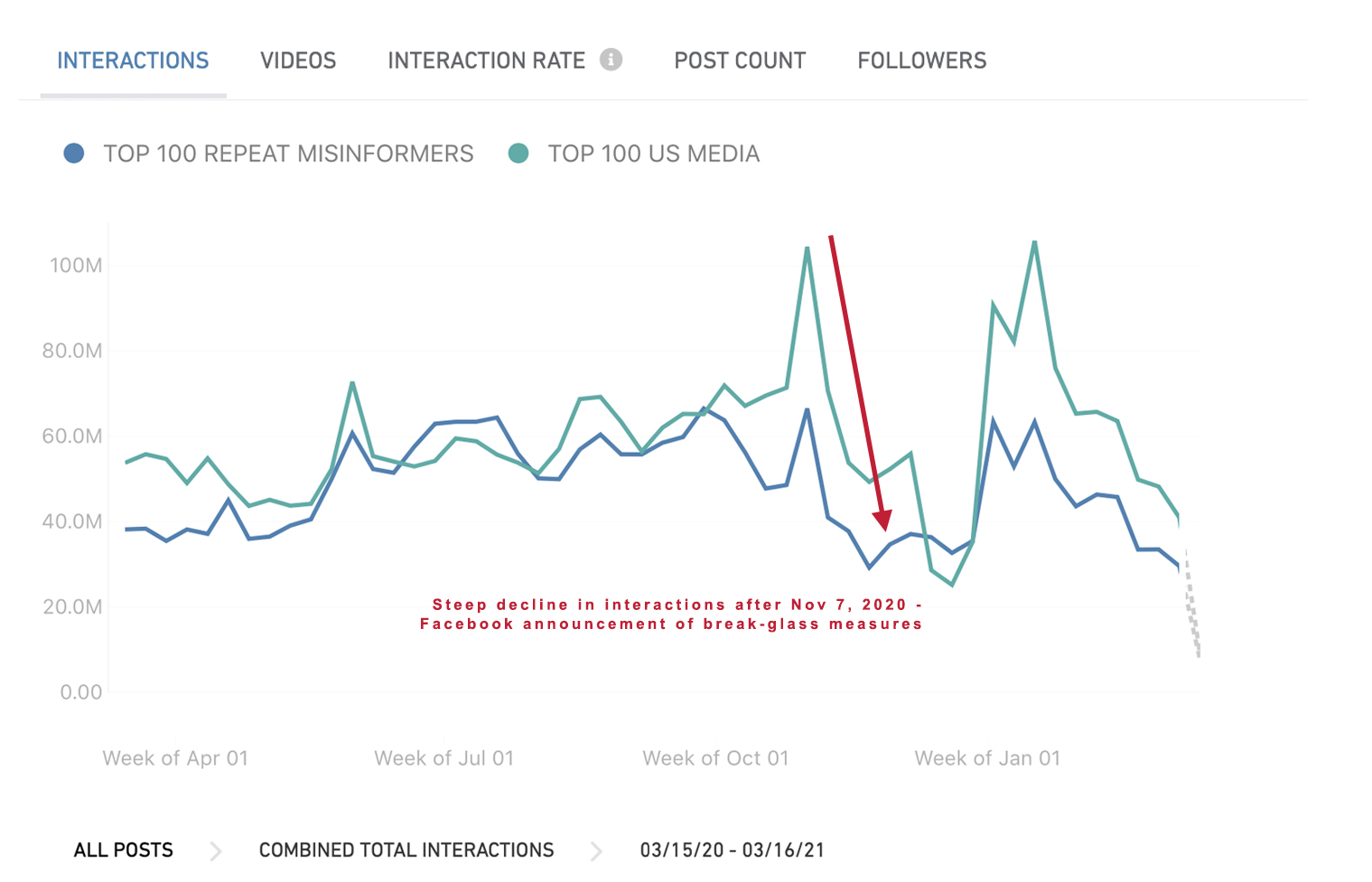

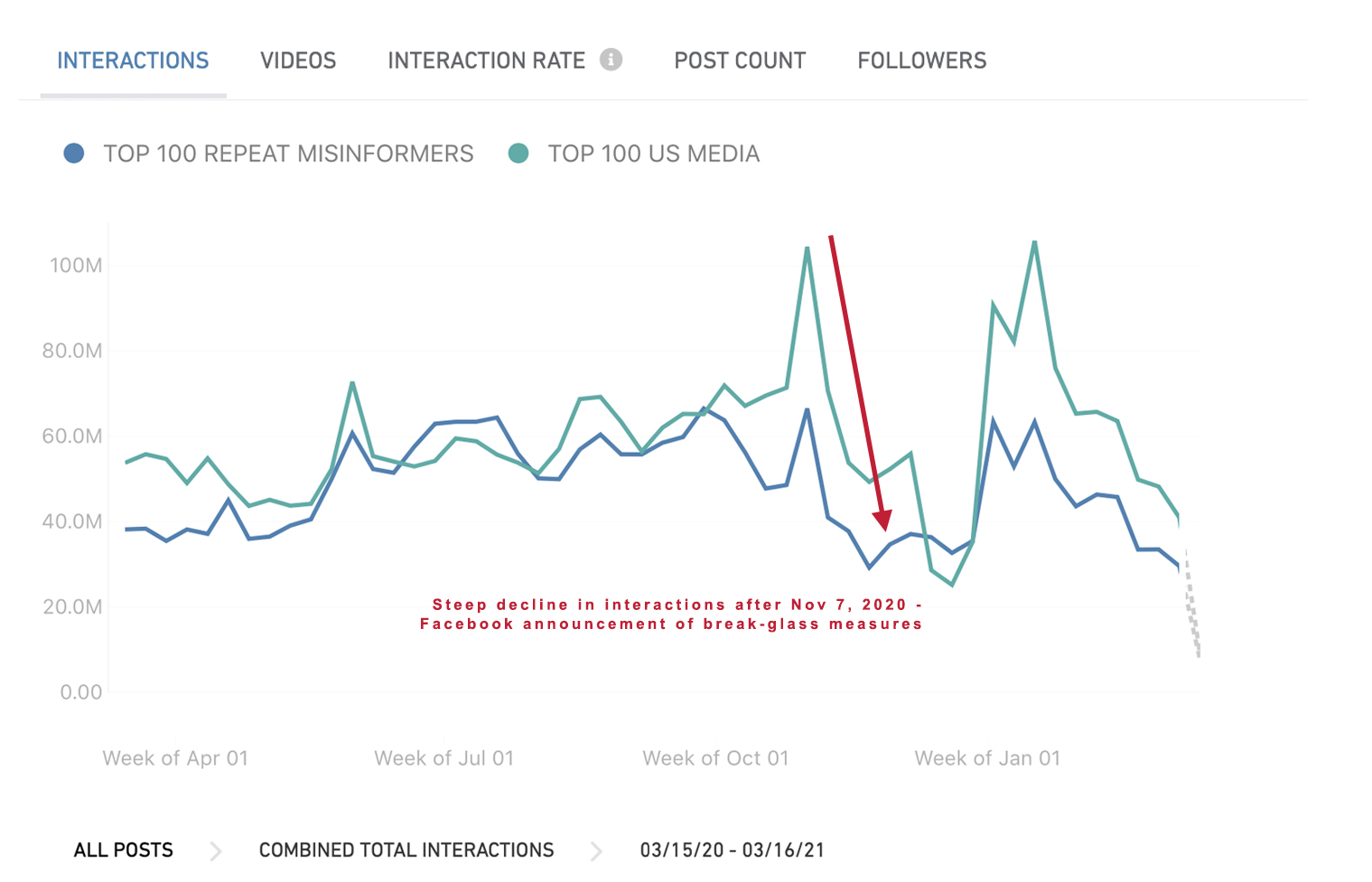

It is also important to note the further steep decline for both the top-performing pages in our study that repeatedly shared misinformation, and authoritative media outlets, following November 7, 2021 after the media networks made the call that President Biden had won the elections.

This steep decline likely reflects the introduction of what Facebook itself termed “Break Glass” measures, which further

tweaked

the algorithm to increase “News Ecosystem Quality”, thus reducing the reach of repeat misinformers. Moreover, a number of misinformation-sharing pages, which Avaaz discovered in a separate investigation were connected to Steve Bannon, were

removed

from the platform mid-November after we reported to Facebook that they were using inauthentic behavior to amplify “Stop the Steal” content. This removal likely also played a role in the steep decrease after Election Day, highlighted in figure 2 below.

Figure 2: March 15, 2020 - March 16, 2021

Graph generated using CrowdTangle10 (Avaaz edits in red)

Figure 2: March 15, 2020 - March 16, 2021

Graph generated using CrowdTangle10 (Avaaz edits in red)

The data we present above and the potential consequences for the quality of Americans’ information ecosystem in terms of the reach and saturation of content from these sources, highlight how the platform always had the power to tweak its algorithm and policies to ensure that misinformation is not amplified. Only Facebook can confirm the exact details of the changes it made to its algorithm and the impact those actions had beyond the list of actors Avaaz monitored.

Importantly, these findings also suggest that Facebook made a choice not to proactively and effectively detox its algorithm at key moments in the election cycle, allowing repeat misinformer pages the opportunity to rack up views on their content for months,

saturating American voters with misinformation up until the very final weeks of the election.

According to our analysis,

had Facebook tackled misinformation more aggressively and when the pandemic first hit in March 2020 (rather than waiting until October), the platform could have stopped 10.1 billion estimated views of content on the top-performing pages that repeatedly shared misinformation ahead of Election Day.

Our analysis also showed that this would have ensured American voters saw more authoritative and high quality content.

Compare this number to the actions the company said it took to protect the election (below). In this light, the platform’s positive reporting on its impact appears to be biased, particularly since the platform does not highlight what it could have done, it does not highlight how many people saw the misinformation before it had acted on it, and does not provide any measure for the harms caused by a lack of early action. The lack of transparency, and the findings in the report, suggest that the platform did not do enough to protect American voters.

Infographic from Facebook to highlight the measures they were taking to prepare for election day.

Infographic from Facebook to highlight the measures they were taking to prepare for election day.

It is important to note that the pages we identified spanned the political spectrum, ranging from far-right pages to libertarian and far-left pages. Of the 100 pages, 60 leaned right, 32 leaned left, and eight had no clear political affiliation. Digging further into the data, we were able to analyse the breakdown of the misinformation posts that were shared by these pages and we found that 61% were from right-leaning pages. This misinformation content from right-leaning pages also secured the majority of views on the misinformation we analysed - securing 62% of the total interactions on that type of content. What is important to emphasize is that the sharing of misinformation content targets all sides of the political spectrum, influencing polarization on both sides of the aisle, and that Facebook is failing to protect Americans from prominent actors that repeatedly share this type of content.

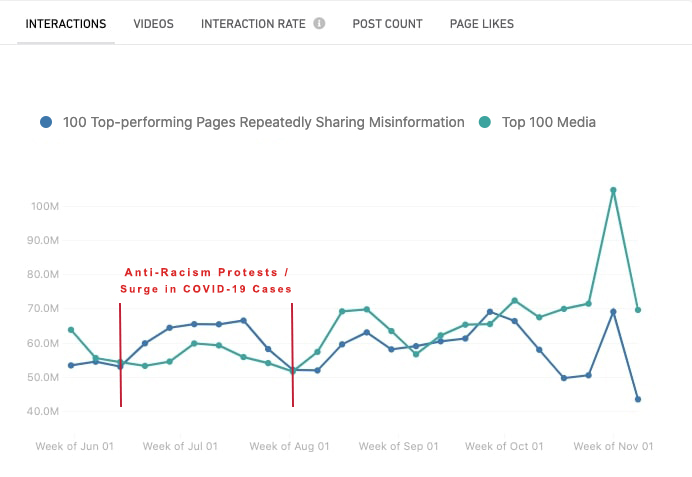

How the top-performing pages that repeatedly shared misinformation rivaled authoritative sources in the amount of interactions they received

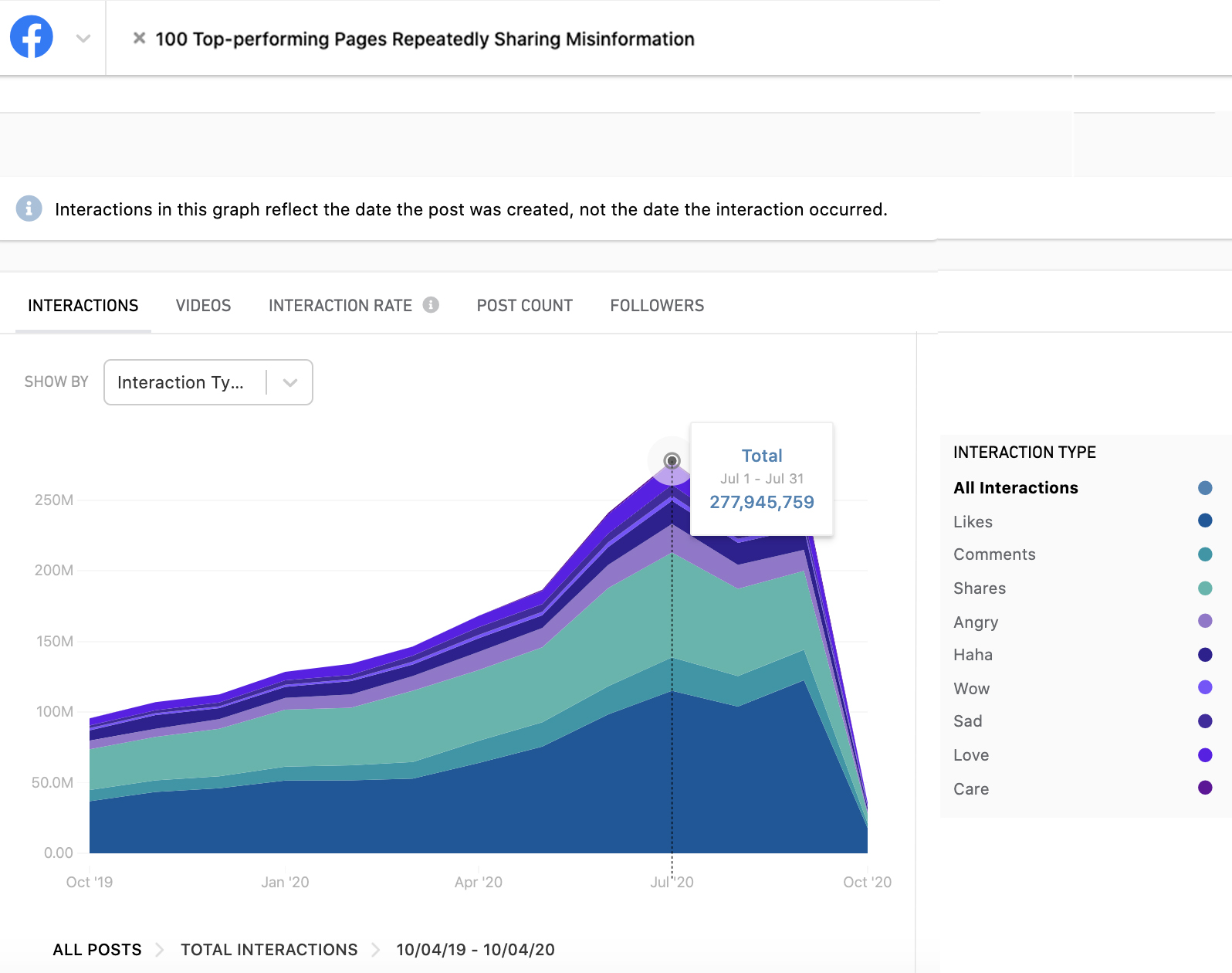

Facebook’s refusal to adopt stronger ‘Detox the Algorithm’ tools and processes did not just allow these repeat misinformers to spread their content, but they actually allowed their popularity to skyrocket. According to our research, the top-performers collectively

almost tripled their monthly interactions - from 97 million interactions in October 2019 to 277.9 million interactions

in July 2020, catching up with the top 100 US media pages on Facebook

. At the exact moment when American voters needed authoritative information, Facebook’s algorithm was boosting pages repeatedly sharing misinformation.

Figure 3: Oct 4, 2019 - Oct 4, 2020 (monthly interactions on the top-performing pages that repeatedly shared misinformation in our study)

Graph generated using CrowdTangle11. The graph shows total interactions for the 100 pages, each color represents a different type of reaction (see color code). Interactions peaked in July 2020 at almost 278 million interactions.

Figure 3: Oct 4, 2019 - Oct 4, 2020 (monthly interactions on the top-performing pages that repeatedly shared misinformation in our study)

Graph generated using CrowdTangle11. The graph shows total interactions for the 100 pages, each color represents a different type of reaction (see color code). Interactions peaked in July 2020 at almost 278 million interactions.

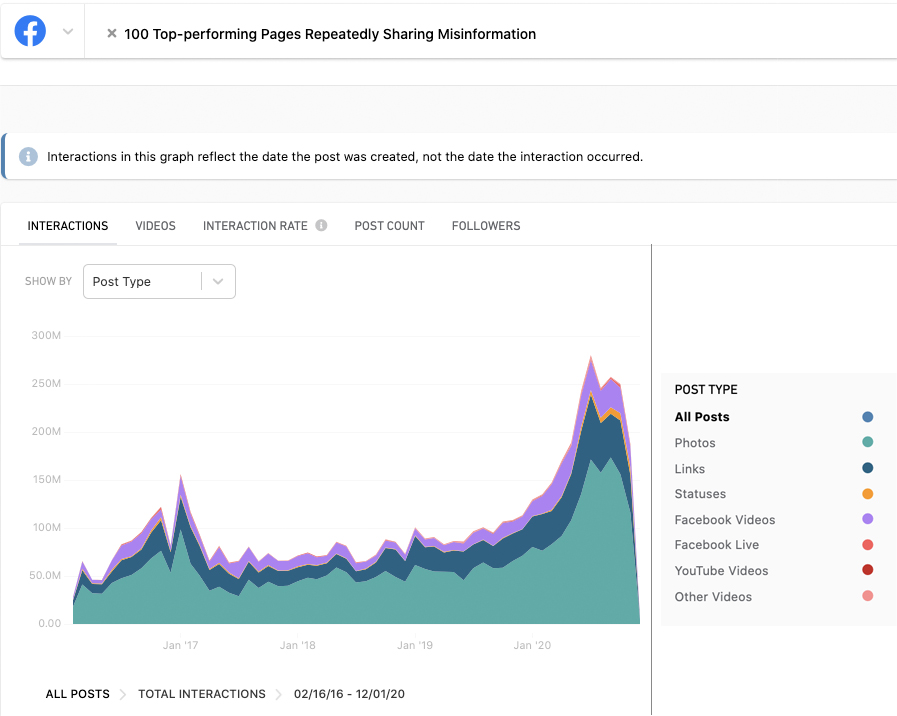

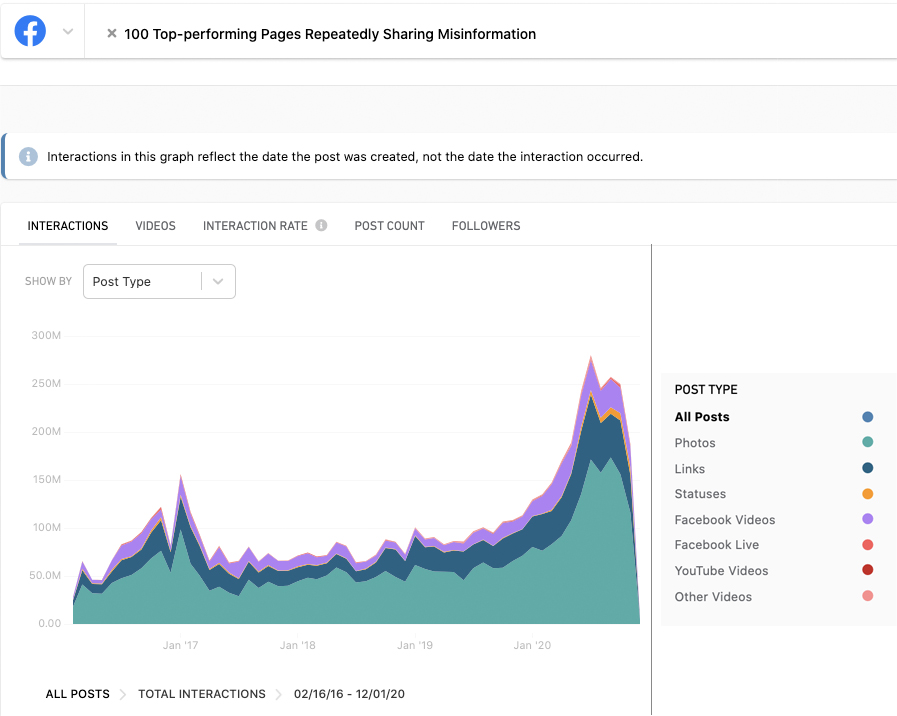

Figure 4: Feb 16 2016 - Dec 1 2020 (monthly interactions)

Graph generated using CrowdTangle12. This graph shows total interactions on content posted by the top-performing pages that repeatedly shared misinformation in our study over roughly a four-year period. Each color represents a different type of post and is sized by the amount of interactions it received. Here we can see that photos received the most amount of interactions. We can also see clear peaks ahead of November 2016 and again before November 2020.

Figure 4: Feb 16 2016 - Dec 1 2020 (monthly interactions)

Graph generated using CrowdTangle12. This graph shows total interactions on content posted by the top-performing pages that repeatedly shared misinformation in our study over roughly a four-year period. Each color represents a different type of post and is sized by the amount of interactions it received. Here we can see that photos received the most amount of interactions. We can also see clear peaks ahead of November 2016 and again before November 2020.

Figure 5: June, 2020 to November, 2020 (Monthly interactions)

Figure 5: June, 2020 to November, 2020 (Monthly interactions)

Graph generated using CrowdTangle13 (Avaaz edits in red). The graph shows the comparative interaction rate of the top-performing pages that repeatedly shared misinformation and the Top 100 Media pages in our study over a period of just over five months. The period between the end of May 2020, and August 1, 2020 saw significant anti-racism protests after the murder of George Floyd on May 25, 2020, as well as a surge in COVID-19 cases.

In fact, in terms of the amount of interactions garnered in July/August, 2020,

during the anti-racism protests, the top-performing pages that repeatedly shared misinformation actually surpassed the top US media pages in terms of engagement (reaction, comments and shares on posts).

This is despite the top 100 US media pages

having about four times as many followers.

Our analysis of these pages shows that Facebook allowed them to pump out misinformation on a wide number of issues, with misinformation stories often receiving more interactions than some stories from legitimate news sources. This finding brings to the forefront the question of whether or not Facebook’s algorithm amplifies misinformation content and the pages spreading misinformation, something only an independent audit of Facebook’s algorithm and data can fully confirm. The scale at which these pages appear to have gained ground on authoritative news pages on the platform, despite the platform’s declared efforts to moderate and downgrade misinformation, suggests that Facebook’s moderation tactics are not keeping up with the amplification the platform provided to misinformation content and those spreading it.

On the role of Facebook’s algorithm in amplifying election misinformation

Facebook’s algorithm decides the content users see in their News Feeds based on a broad range of variables and calculations, such as the amount of reactions and comments a post receives, whether the user has interacted with the content of the group and page posting, and a set of other signals.

This placement in the News Feed, which Facebook refers to as “ranking”, is determined by the algorithm, which in turn learns to prioritize content based on a number of variables such as user engagement, and can provide significant amplification to a piece of content that is provocative. As Mark Zuckerberg has highlighted:

“One of the biggest issues social networks face is that, when left unchecked, people will engage disproportionately with more sensationalist and provocative content (...) At scale it can undermine the quality of public discourse and lead to polarization.”

A heated election in a politically-divided country was always going to produce sensationalist and provocative content. That type of content receives significant engagement. This engagement will, in turn, be interpreted by the algorithm as a reason to further boost this content in the News Feed, creating a vicious cycle where the algorithm is consistently and artificially giving political misinformation not yet flagged, for example, an upper hand over authoritative election content within the information ecosystem it presents to Facebook users.

Facebook initially designed its algorithms largely based on what serves the platform’s business model, not based on what is optimal for civic engagement. Moreover, the staggering number of about 125 million fake accounts that Facebook admits are still active on the platform are also ongoingly skewing the algorithm in ways that are not representative of real users.

Furthermore, the platform’s design creates a vicious cycle where the increased engagement with the political misinformation content generated by the algorithm will further increase the visibility and amount of users who follow the pages and websites sharing the misinformation.

In 2018, after the Cambridge Analytica scandal and the disinformation crisis it faced in 2016, Facebook publicly declared that it had re-designed its ranking measurements for what it includes in the News Feed “so people have more opportunities to interact with the people they care about”. Mark Zuckerberg also revealed that the platform tried to moderate the algorithm by ensuring that “posts that are rated as false are demoted and lose on average 80% of their future views”.

These were welcome announcements and revelations, but our findings in a previous report on health misinformation, in August 2020, indicated that Facebook was still failing to prevent the amplification of misinformation and the actors spreading it. The report strongly suggested that Facebook’s current algorithmic ranking process was either potentially being weaponized by health misinformation actors coordinating at scale to reach millions of users, and/or that the algorithm remains biased towards the amplification of misinformation, as it was in 2018. The findings also suggest that Facebook’s moderation policies to counter this problem are still not being applied proactively and effectively enough. In that report’s recommendation section, we highlight how Facebook can begin detoxing its algorithm to prevent a disproportionate amplification of health misinformation. This report suggests Facebook did not take sufficient action.

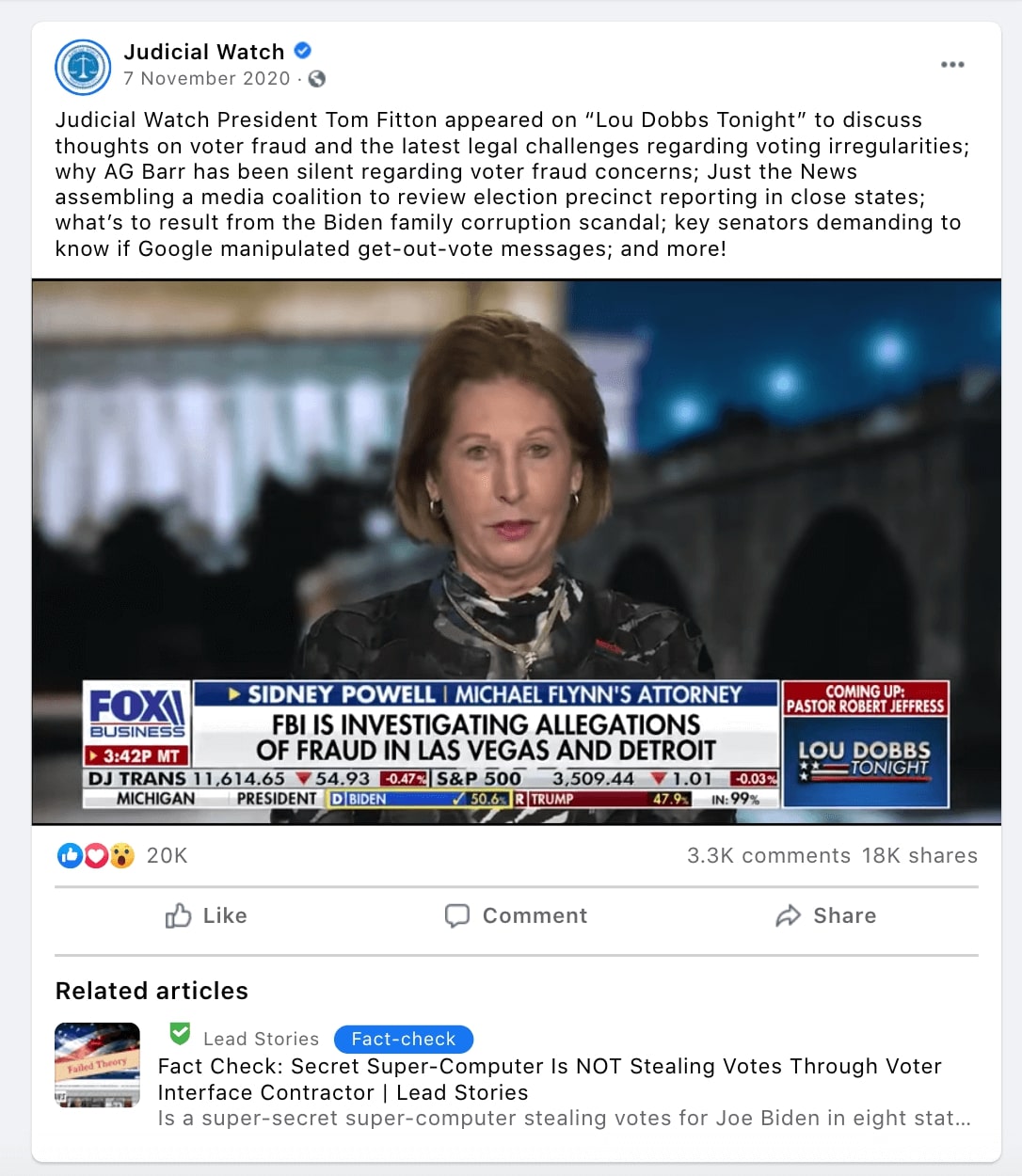

Examples of misinformation spread by some of the top-performing pages

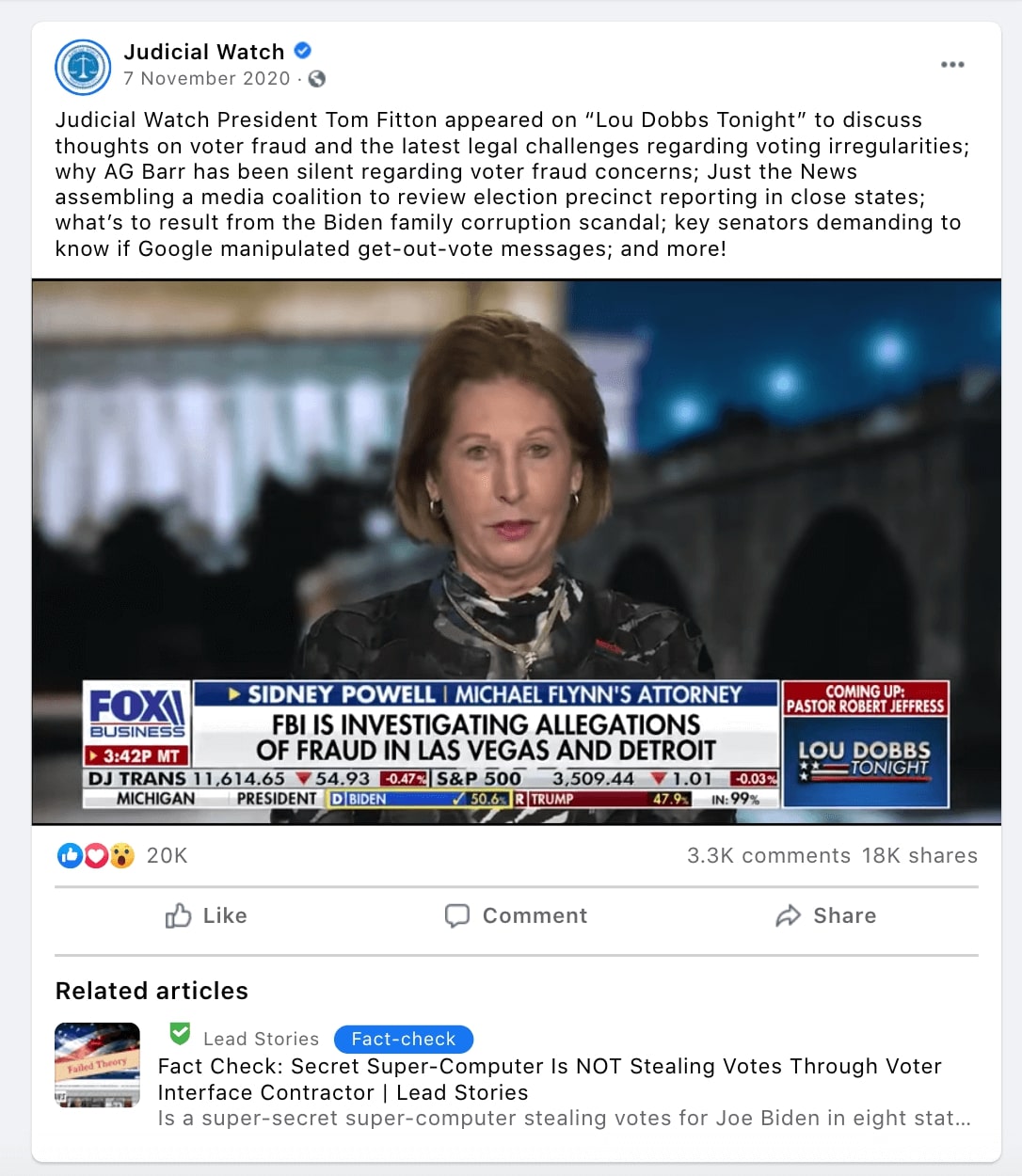

Claims of election fraud,

including this

video

that contains the false claim that votes were changed for Joe Biden using the HAMMER program and a software called Scorecard [00.36]. The post received 41,300 interactions and 741,000 views, despite the voter fraud claim being debunked by

Lead Stories.

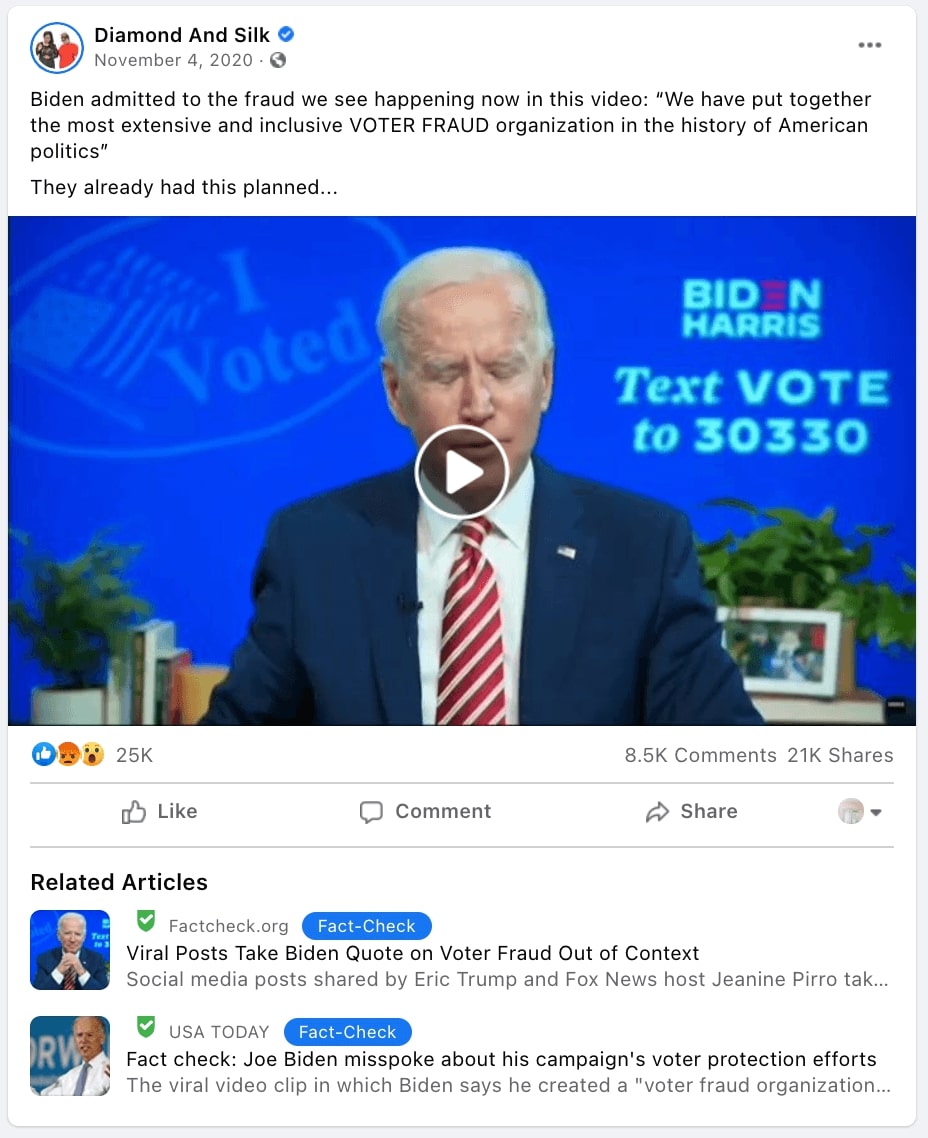

Claims that the Democrats were planning to steal the election

Claims that the Democrats were planning to steal the election

, including this

video

purportedly showing Biden announcing that he had created the largest voter fraud organization in history. This video was

debunked

by Lead Stories, but despite this, this post alone racked up almost 54,500 interactions. Furthermore, Avaaz

analysis

using CrowdTangle

14

, conducted in addition to the research used to identify the top performing pages, showed that the video was shared by a wide range of actors outside of the pages in this study. Across Facebook, it garnered

197,000 interactions and over one million views

on Facebook in the week before election day. The video was reported to Facebook and fact-checks were applied, but only after hundreds of thousands had watched it.

Candidate-focused misinformation,

Candidate-focused misinformation,

including the claim that Joe Biden stumbled while answering a question about court-packing and had his staff escort the interviewers out (included in the following

post,

which plucks one moment of a real interview out of context, receiving around 43,800 interactions and 634,000 video views).

Politifact rated

the claims as false, clarifying that: “

President Donald Trump’s campaign is using a snippet of video to show a moment when Joe Biden ostensibly stumbled when asked about court packing. The message is that Biden’s stumble was his staff’s cue to hustle reporters away.

In reality, the full video shows that Biden gave a lengthy answer, and then Biden’s staff began moving members of the press to attend the next campaign event.

”

The post published on October 27 remains unlabelled despite this precise post being fact-checked by one of Facebook’s fact-checking partners.

Claims that President Trump is the only President in US history

Claims that President Trump is the only President in US history

to simultaneously hold records for the biggest stock market drop, the highest national debt, the most members of his team convicted of crimes, and the most pandemic-related infections globally. This claim, for example in this

post,

was fact-checked by

LeadStories,

a Facebook fact-checking partner

, which highlighted that it is not true:

“The first two claims in this post are not false, but they are missing context. The second two claims are false.”

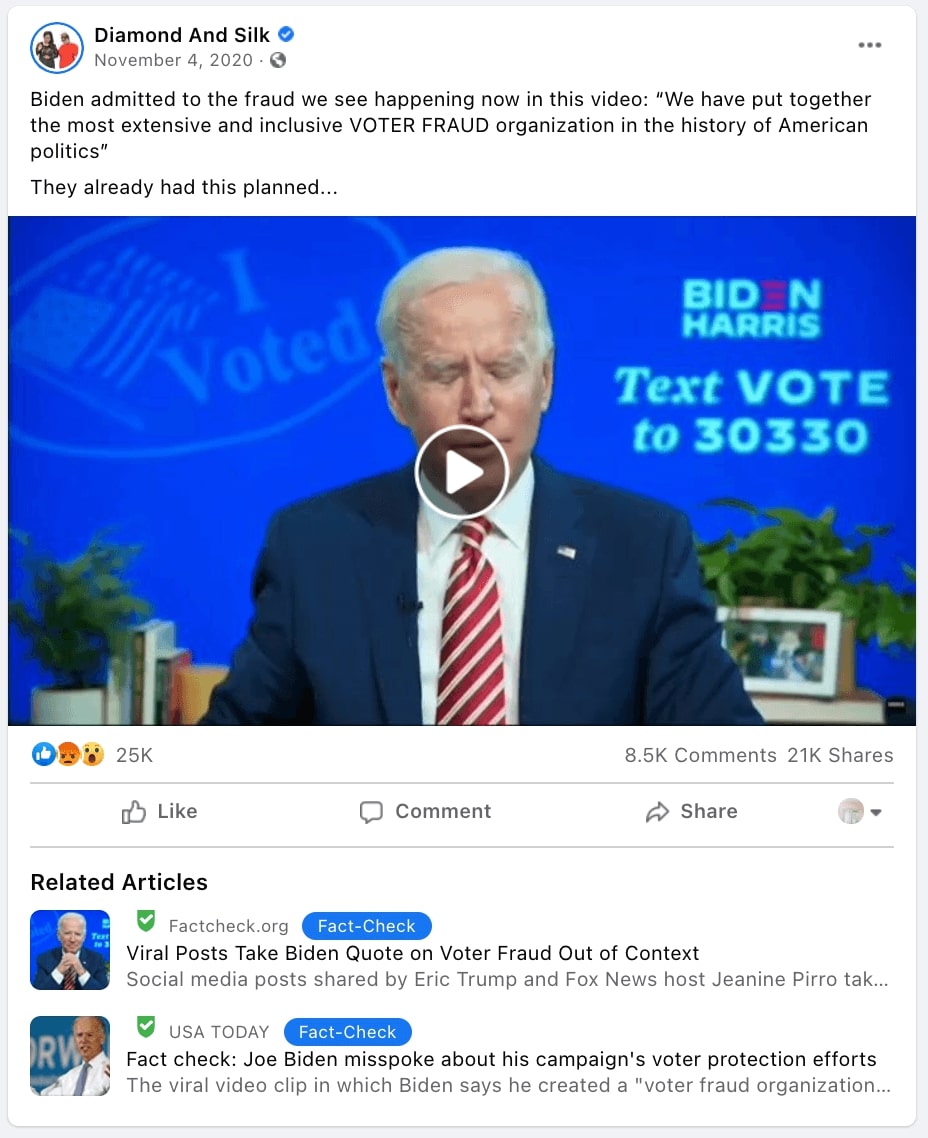

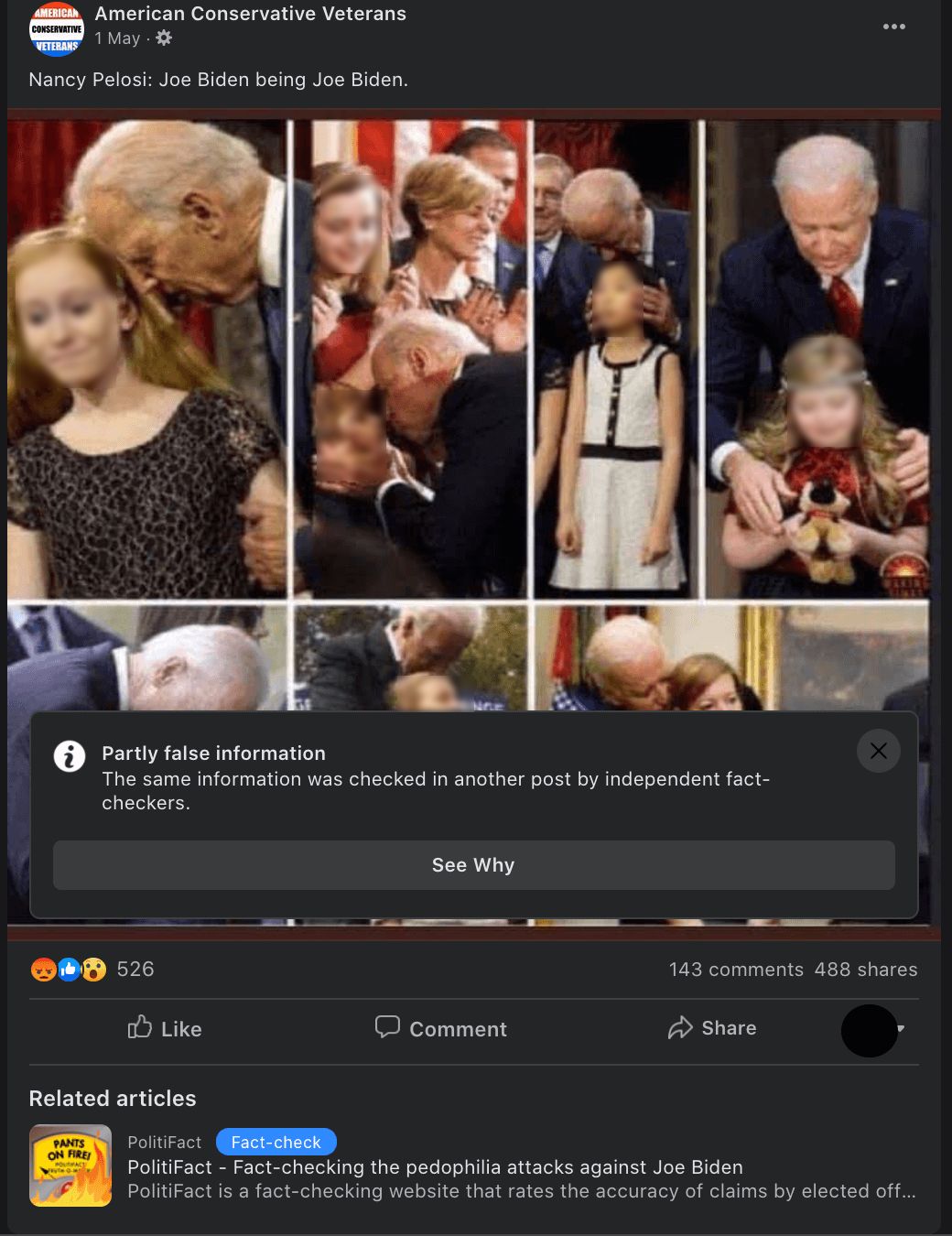

Candidate-focused misinformation,

Candidate-focused misinformation,

including the claim that Joe Biden is a pedophile (included in the following post, which received 1,157 total interactions and an estimated 22,839 views, despite the claim being

debunked

by PolitiFact).

In addition, our research also identified multiple variations of this post outside of our pages study

without

a fact-checking label.

Misinformation about political violence,

for example this

post,

originating on Twitter, claims that Black Lives Matter (BLM) protesters blocked the entrance of a hospital emergency room to prevent police officers from being treated (the post received over 56,000 interactions despite the narrative being

debunked

by Lead Stories).

How Facebook’s last-minute action missed prominent misinformation sharing actors

Avaaz analysis shows, even though Facebook eventually took action that decreased the interactions received by the top-performing pages that had repeatedly shared misinformation, it appears that this action did not affect all of them. According to our investigation, they completely ignored or missed some of the most high-profile offenders.

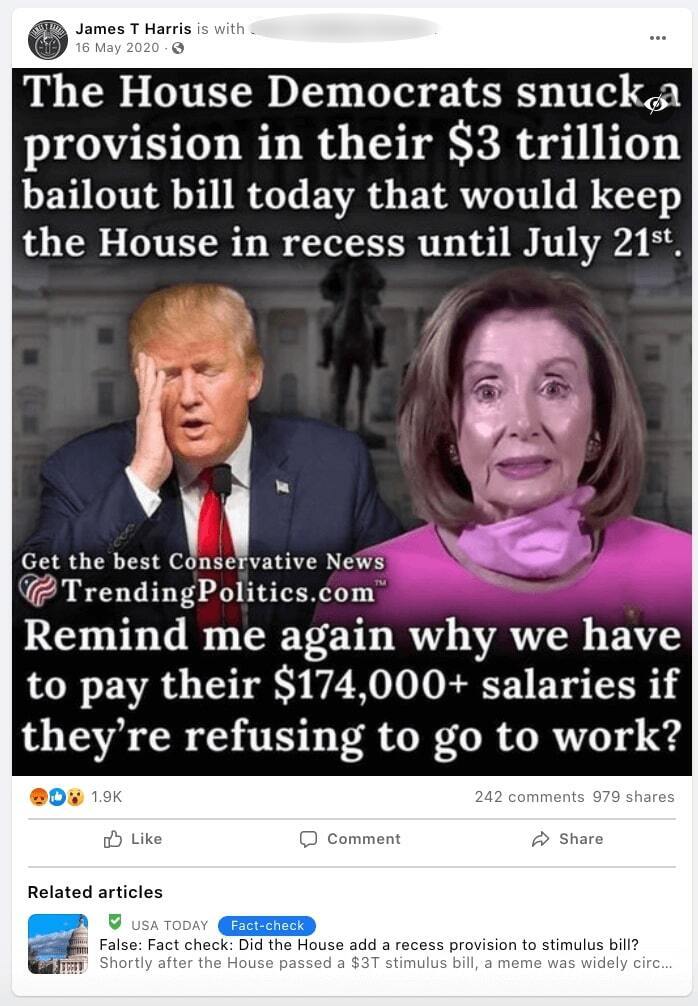

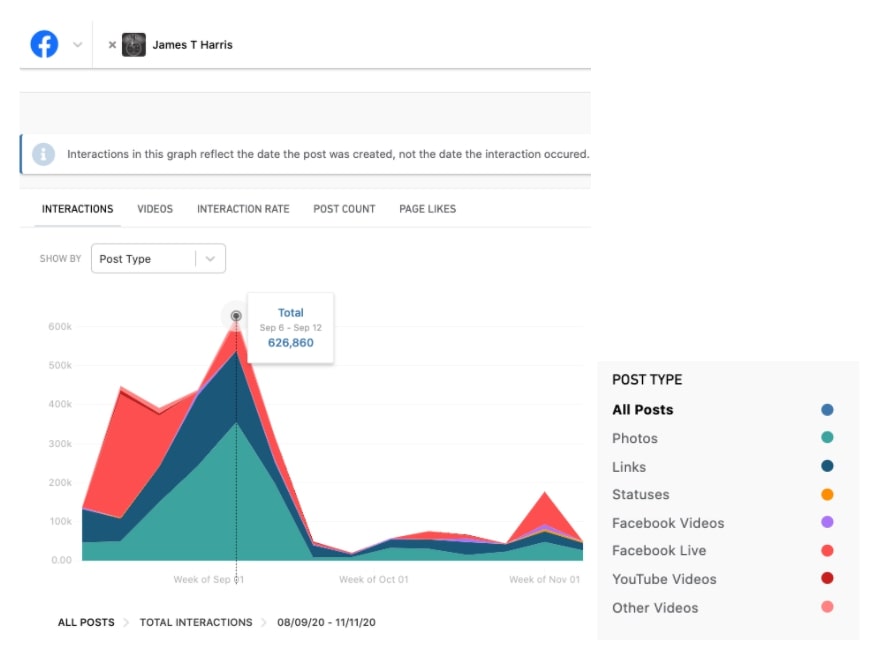

As an example of what Facebook was able to achieve when it tweaked its algorithm, consider a repeat misinformer Avaaz had detected in early September:

James T. Harris.

His page saw a significant downgrade in its reach from a peak of 626,860 interactions a week, to under 50,000 interactions a week after Facebook took action:

Figure 6: Aug 9, 2020 - Nov 11, 2020

Graph generated using CrowdTangle15. This graph shows the total interactions content posted by the Facebook page of James T. Harris over roughly three months. The different colors show which types of post received the most engagement. Here you can see that photos received the most engagement.

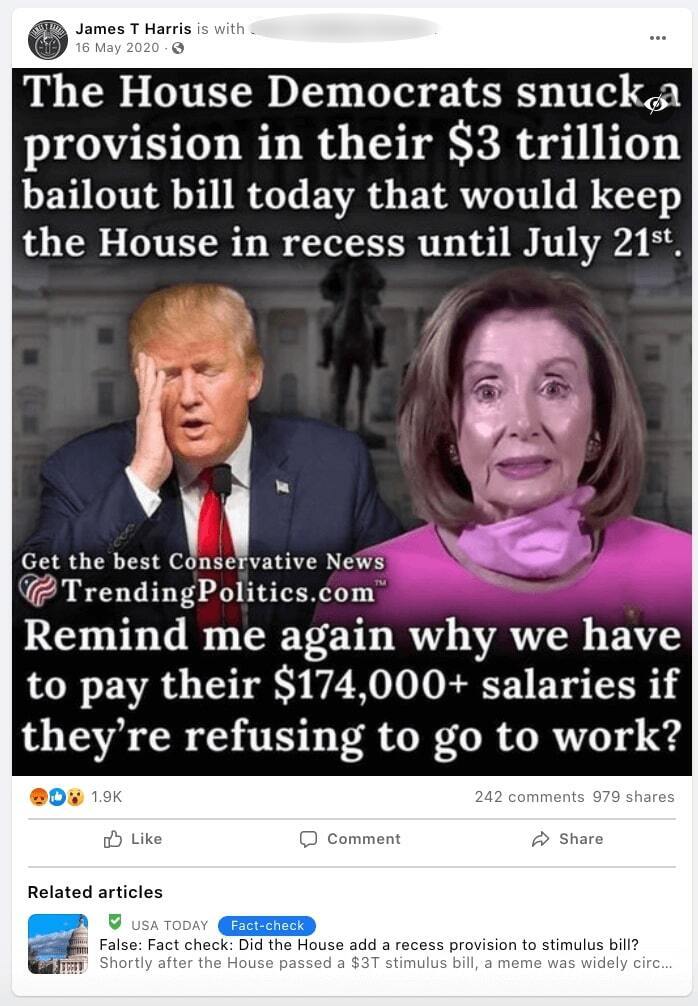

This is one

example

of a fact-checked misinformation post shared on James T. Harris’ page. The post contains the false claim that the House Democrats created a provision in the

HEROES Act

to keep the House in recess, garnered over 3,121 interactions and an estimated 61,608 views, despite being

debunked

by PolitiFact.

However, another page we found having repeatedly shared misinformation,

Dan Bongino,

did not see a steep decline. In fact he saw a rise in engagement:

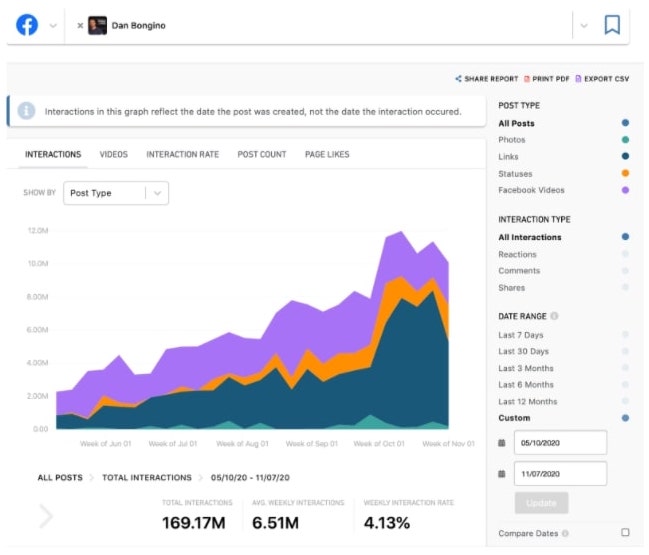

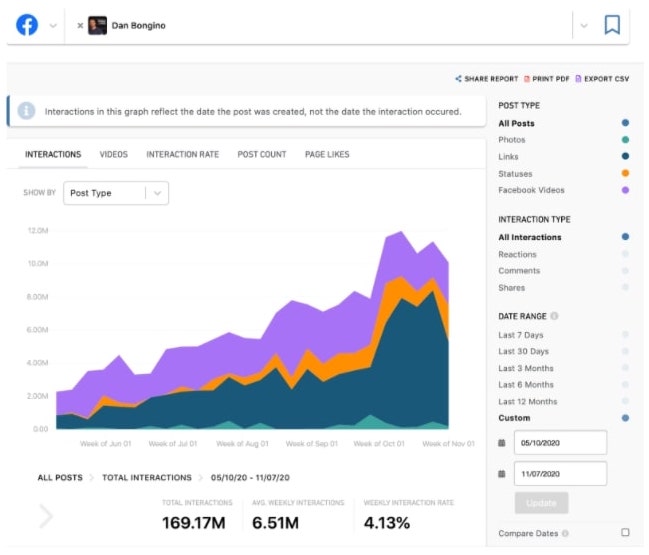

Figure 7: Jun 6, 2020 - July 11, 2020

Graph generated using CrowdTangle16. This graph shows the total interactions content posted by the Facebook page of Dan Bongino over roughly three months. The different colors show which types of post received the most engagement. Here you can see that links received the most engagement.

Figure 7: Jun 6, 2020 - July 11, 2020

Graph generated using CrowdTangle16. This graph shows the total interactions content posted by the Facebook page of Dan Bongino over roughly three months. The different colors show which types of post received the most engagement. Here you can see that links received the most engagement.

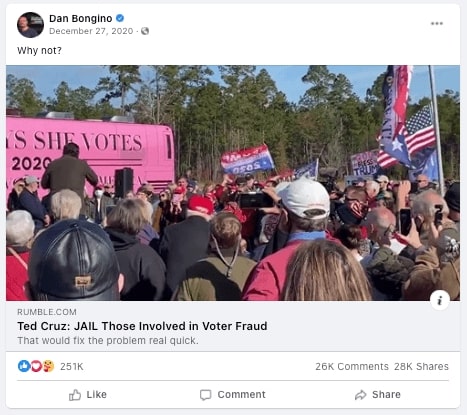

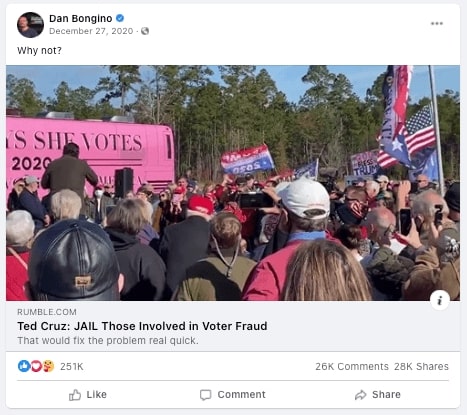

In fact, just 10 days before the storming of the Capitol, this page shared a speech by Senator Ted Cruz calling to defend the nation and prosecute and jail all those involved in voter fraud. This

post

alone garnered 306,000 interactions and an estimated 6,040,440 views.

It is unclear to us how Facebook made the decision to downgrade James T Harris, but not to downgrade Dan Bongino, despite both being repeat sharers of misinformation.

Facebook failed to prevent violence-glorifying pages and groups - including those aligned with QAnon and militias - from gaining a following of nearly 32 million

17

Facebook’s platform was

reportedly

used to incite the

assault

on the Capitol, and was used to promote and drive turnout to the events that preceded the riots.

More worryingly however, for many months prior to the insurrection, Facebook was home to a set of specific pages and groups that mobilized around the glorification of violence and dangerous conspiracy theories that we believe helped legitimize the type of chaos that unfolded during the insurrection. Many of these pages and groups may have not repeatedly shared disinformation content or built up millions of followers, and so would not have appeared in our most prominent pages analysis above, but they can have a devastating effect on the followers they do attract.

Facebook’s action to moderate and/or remove many of these groups came too late, meaning they had already gained significant traction using the platform. Moreover, Facebook again prioritized piece-meal and whack-a-mole approaches - on individual content for example - over structural changes in its recommendation algorithm and organizational priorities, thus not applying more powerful tools available to truly protect its users and democracy. For example, Facebook only stopped its recommendation system from sending users to political groups in

October of 2020,

even though Avaaz and

other experts

had highlighted that these recommendations were helping dangerous groups build followers.

The violence that put lawmakers, law enforcement and others’ lives in harm’s way on Jan 6 was primed not just by viral, unchecked voter fraud misinformation (as described above), but by an

echo chamber

of

extremism,

dangerous political

conspiracy theories,

and rhetoric whose violent overtones Facebook allowed to grow and thrive for years.

In the heat of a critical and divisive election cycle, it was imperative that the company take aggressive action on all activity that could stoke offline political violence. The company promised to

prohibit

the glorification of violence and took steps between July and September 2020 to expand its “Dangerous Individuals and Organizations”

policy

to address “militarized social movements” and “violence-inducing conspiracy networks”, including those with ties to

Boogaloo

and

QAnon

movements. However, a significant universe of groups and pages that Avaaz analyzed since the summer of 2020 that regularly violated the aforesaid Facebook policies was allowed to remain on the platform for far too long.

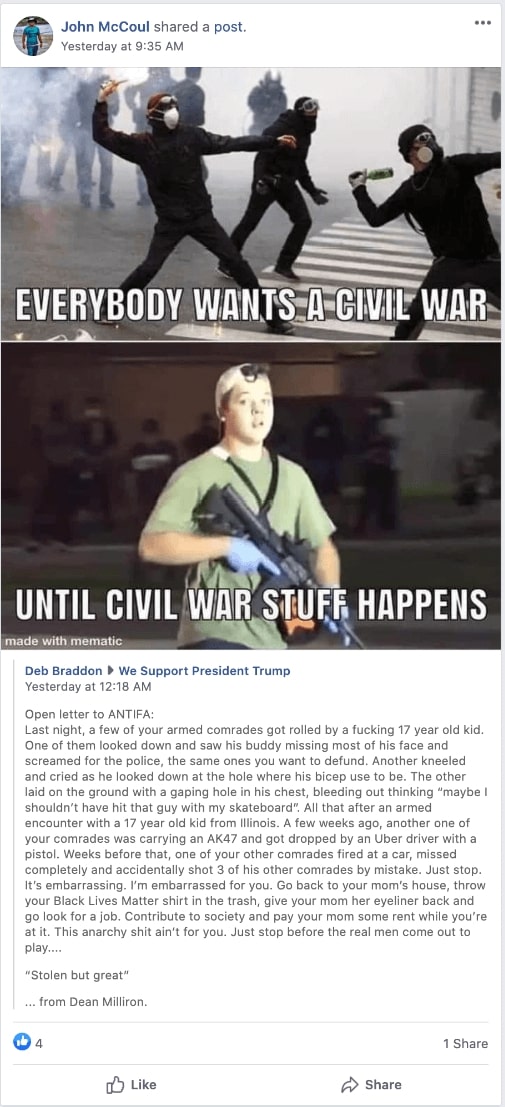

In addition to the “Stop the Steal” groups noted in the previous section, since June 2020,

Avaaz identified 267 pages and groups with a combined following of 32 million

that spread violence-glorifying content in the heat of the 2020 election.

Out of the 267 identified pages and groups, as of December 23, 2020,

183

(68.7%) had Boogaloo, QAnon, or militia-aligned names and shared content promoting movement imagery and conspiracy theories.

The remaining 31.3 percent were pages and groups with no identifiable alignment with violent, conspiracy-theory-driven movements, but were promoters of memes and other content that glorified political violence ahead of, during, and in the aftermath of high profile instances of such violence, including in

Kenosha,

Wisconsin, and throughout the country during anti-racism

protests.

18

As of February 24, 2021, despite clear violations of Facebook’s policies, out of the 267 identified pages and groups,

118 are still active, of which 58 are Boogaloo, QAnon, or militia-aligned, and 60 are non-movement aligned pages and groups

that spread violence-glorifying content. Despite Facebook’s removal of 56% of these pages and groups, their action inoculated only a small fraction (16%) of the total number of followers from the violence glorifying content they were spreading.

By February 24, 2021, Facebook had also removed 125 out of the 183 Boogaloo, QAnon, and militia-aligned pages and groups identified by Avaaz. However, similarly, this accounted for a mere 11% (914,194) of their total followers. The 58 pages and groups that remain active account for 89% (7.75m) of the combined following of these Boogaloo, QAnon, and militia-aligned pages and groups.

How Facebook missed pages and groups that simply changed their names

Although Facebook

promised

to take more aggressive action against violent, conspiracy-fueled movements in September, our research shows it failed to enforce its expanded policies against movement pages and groups that had changed their names to avoid detection and accountability, including those associated with the

Three Percenters

movements - leaders and/or followers of which have been

charged

in relation to the Jan. 6 insurrection. For example, on September 3, 2020, Facebook page “III% Nation” (which identifies with the militia

Three Percenters movement

) changed its name to “

Molon Labe Nation

”. Molon Labe is

reportedly

an expression which translates to “come and take [them]” and is used by those associated with the Three Percenters movement. Under its previous name (“III% Nation”), the page

had a link

to an online store selling t-shirts with "III% Nation" imprints. Upon changing its name, the owners of the page still have a

Teespring

account where they continue to sell t-shirts and mugs, but the imprints have changed from “III% Nation” to “Molon Labe Nation".

To date, this page is still active

.

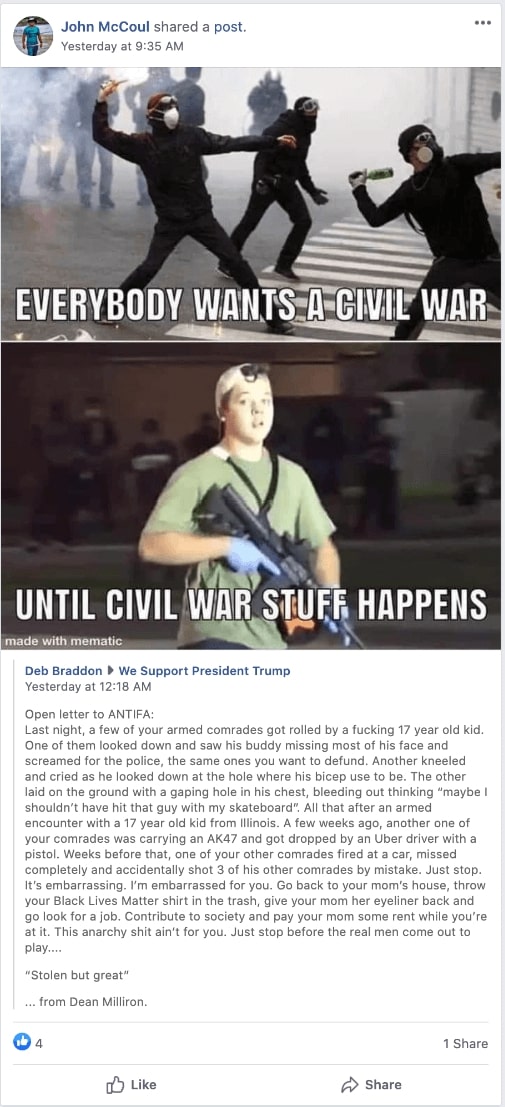

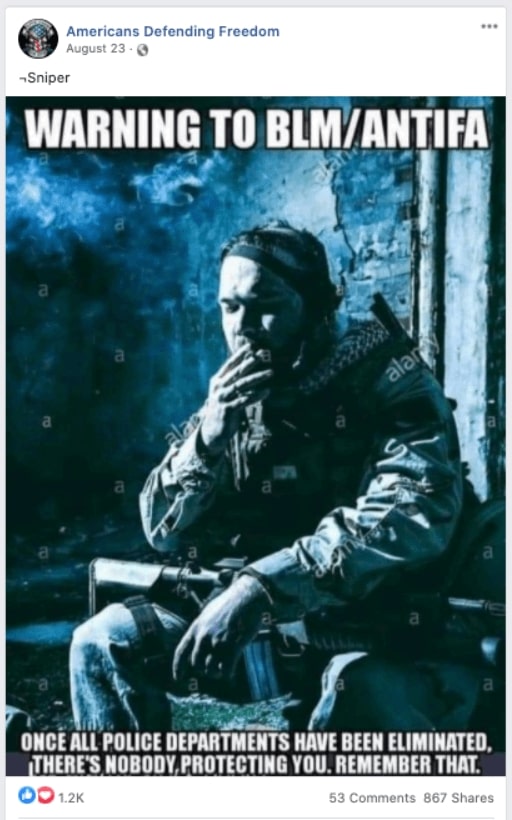

Among these pages and groups were a few particularly frightening posts including calls for taking up arms and civil war, and content that glorified armed violence, expressed support or praise for a mass shooter, and/or made light of the suffering or deaths of victims of such violence, all of which violates

Facebook Community Standards.

Here are some examples:

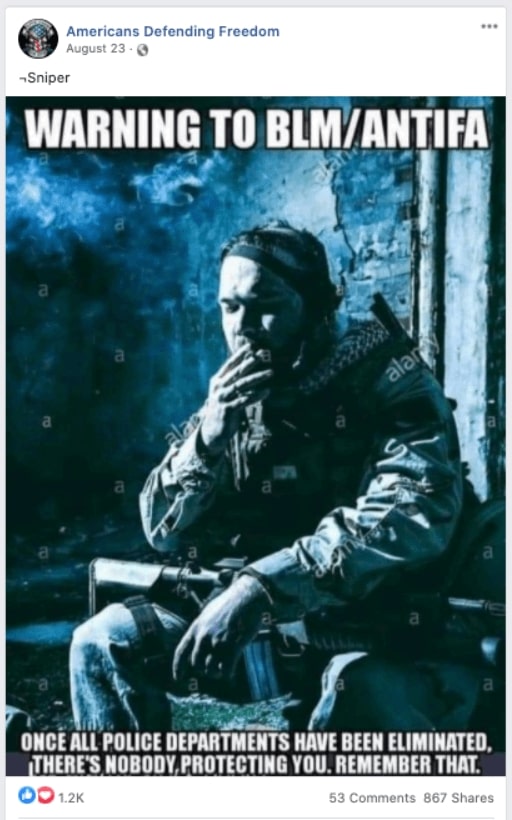

Shared to “Swamp Drainer Disciples: The Counter-Resistance”

Shared to “Swamp Drainer Disciples: The Counter-Resistance”

Post calling for an armed revolt posted on a Boogaloo-aligned page19.

Post calling for an armed revolt posted on a Boogaloo-aligned page19.

Post threatening violence against local national guards posted on a Boogaloo-aligned page.

Post threatening violence against local national guards posted on a Boogaloo-aligned page.

Shared by “American’s Defending Freedom”

Shared by “American’s Defending Freedom”

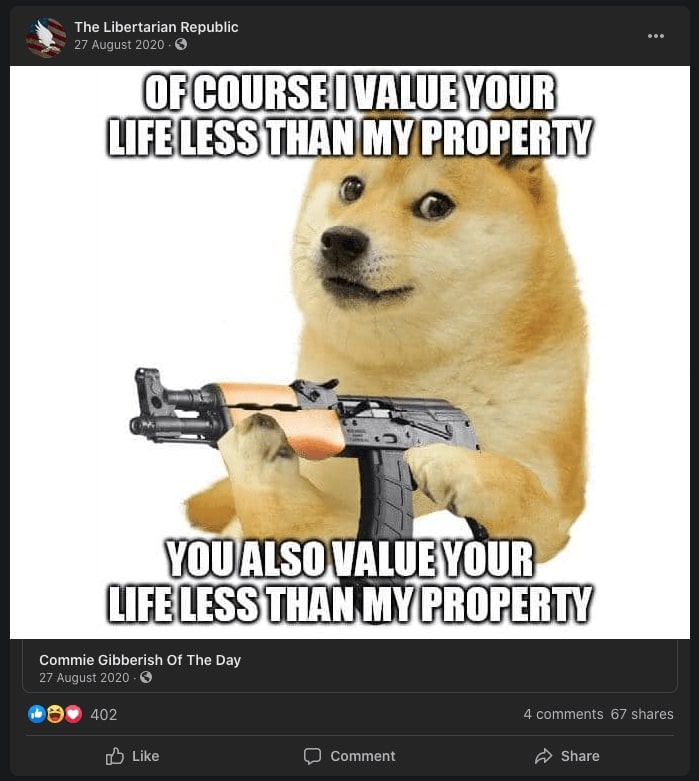

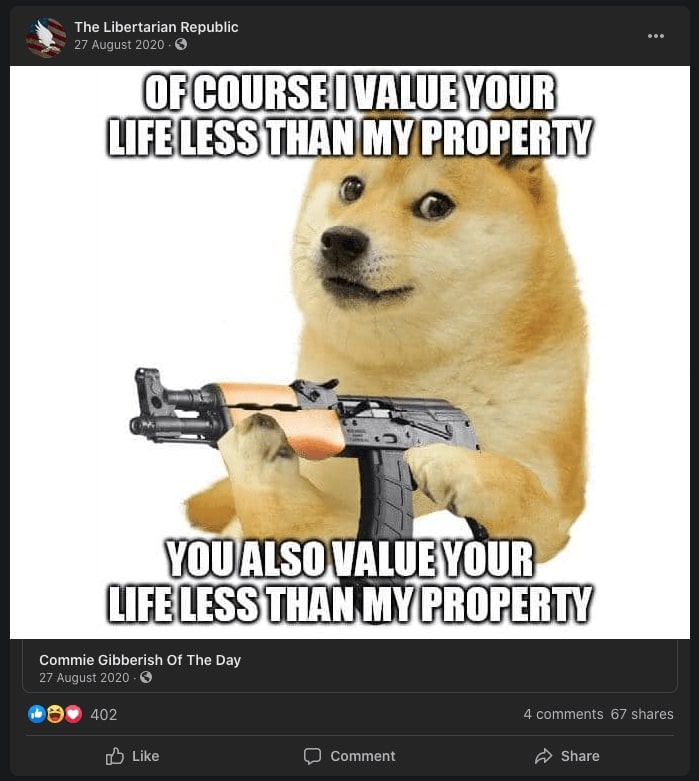

Shared by “The Libertarian Republic”

Shared by “The Libertarian Republic”

Had Facebook acted sooner, it could have prevented these pages and groups from exposing millions of users to dangerous conspiracy theories and content celebrating violence. Instead it allowed these groups to grow.

It is not as if Facebook was unaware of the problem. In 2020, internal documents

revealed

that executives were warned by their own data scientists that blatant misinformation and calls for violence plagued groups. In one presentation in August 2020, researchers said roughly “70% of the top 100 most active US Civic Groups are considered non-recommendable for issues such as hate, misinfo, bullying and harassment.” In 2016, Facebook researchers

found

that “64% of all extremist group joins are due to our recommendation tools”.

Facebook has allowed its platform to be used by malicious actors to spread and amplify such content, and that has created a rabbit hole for violent

radicalization.

Academic

research

shows that “ideological narrative provides the moral justifications rendering violence acceptable and even desirable against outgroup members”, demonstrating just how dangerous it is for content glorifying violence or praising mass shooters to spread without consequence on the world’s largest social media platform.

Facebook is a content accelerator, and the company’s slow reaction to this problem allows violent movements to grow and gain mainstream attention - by which stage any

action

is too little too late. For example, by the time Facebook

announced

and started to implement its ban on activity representing QAnon, the movement’s following on the platform had grown too large to be contained. As pages, groups, and content were removed under this expanded policy, users

migrated

some of their activity over to smaller, ideologically-aligned platforms to continue recruitment and mobilization, but many

remained

on the platform sharing content from their personal accounts or in private groups, which are harder to monitor.

This is significant given that, according to recent

reports

from the court records of those involved in the insurrection, “an abiding sense of loyalty to the fringe online conspiracy movement known as QAnon is emerging as a common thread among scores of the men and women from around the country arrested for their participation in the deadly U.S. Capitol insurrection”.

Facebook failed to prevent 162m estimated views on the top 100 most popular election misinformation posts/stories

Back in November 2019 - a year before the 2020 elections - Avaaz

raised

the alarm on the US 2020 vote, warning that it was at risk of becoming another “disinformation election”. Our investigation found that Facebook's political fake news problem had surged to over 158 million estimated views on the top 100 fact-checked, viral political fake-news stories on Facebook. This was over a period of 10 months in 2019 and was before the election year had even started.

We called on Facebook to urgently redouble its efforts to curb misinformation

.

However,

our investigation shows that, when comparing interactions to the year before, Facebook’s measures failed to reduce the spread of viral misinformation on the platform

.

Avaaz identified the top 100 false or misleading stories related to the 2020 elections, which we estimated were the most popular on Facebook

20

. All of these stories were debunked by fact-checkers working in partnership with Facebook.

According to our analysis, these top 100 stories alone were viewed an estimated 162 million times in a period of just three months,

showing a much higher rate of engagement on top misinformation stories than the year before, despite all of Facebook’s promises to act effectively against misinformation that is fact-checked by independent fact-checkers. Moreover, although Facebook claims to slow down the dissemination of fake news once fact-checked and labeled, this finding clearly shows that

its current policies are not sufficient to prevent false and misleading content from going viral and racking up millions of views.

In fact, to understand the scale and significance of the reach of those top 100 misleading stories, compare the estimated 162 million views of disinformation we found with the 159m people who

voted

in the election. Of course, individuals on Facebook can “view” a piece of content more than once, but what this number does suggest is that a significant subset of American voters who used Facebook were likely to have seen misinformation content.

It is also worth pointing out that the 162 million figure is just the tip of the iceberg, given that this just relates to the top 100 most popular stories. Also, we only analyzed content that was flagged by fact-checkers, we did not calculate numbers for the content that slipped under the radar but was clearly false. In short, unless

Facebook is fully transparent with researchers, we will never know the full scale of misinformation on the platform

and how many users viewed it. Facebook does not report on how much misinformation pollutes the platform, and does not allow transparent and independent audits to be conducted.

Part of the problem, identified previously by Avaaz

research

published almost a year ago, is that Facebook can be slow to apply a fact-checking label after a claim has been fact-checked by one of its partners. However, we have also found that Facebook can even fail to add a label to fact-checked content at all. Of the top 100 stories we analyzed for this report, Avaaz found that 24% of the stories (24) had no warning labels to inform users of falsehoods, even though they had a fact-check provided by an organization working in partnership with Facebook. So while independent fact-checkers moved quickly to respond to the deluge of misinformation around the election, Facebook did not match these efforts by ensuring that fact-checks reach those who are exposed to the identified misinformation.

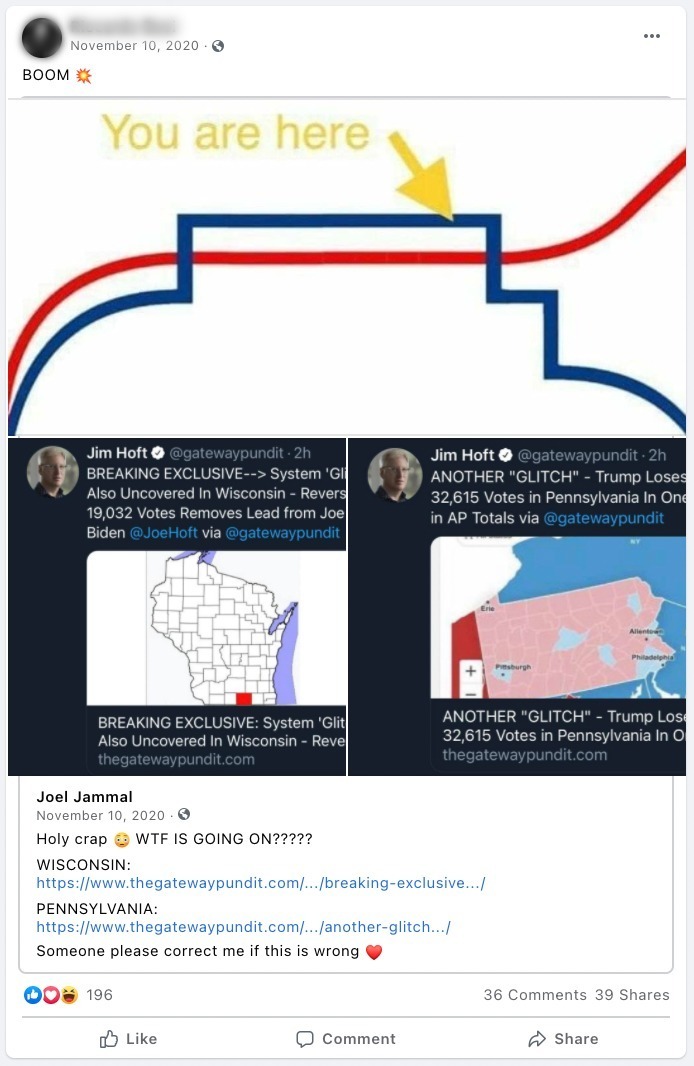

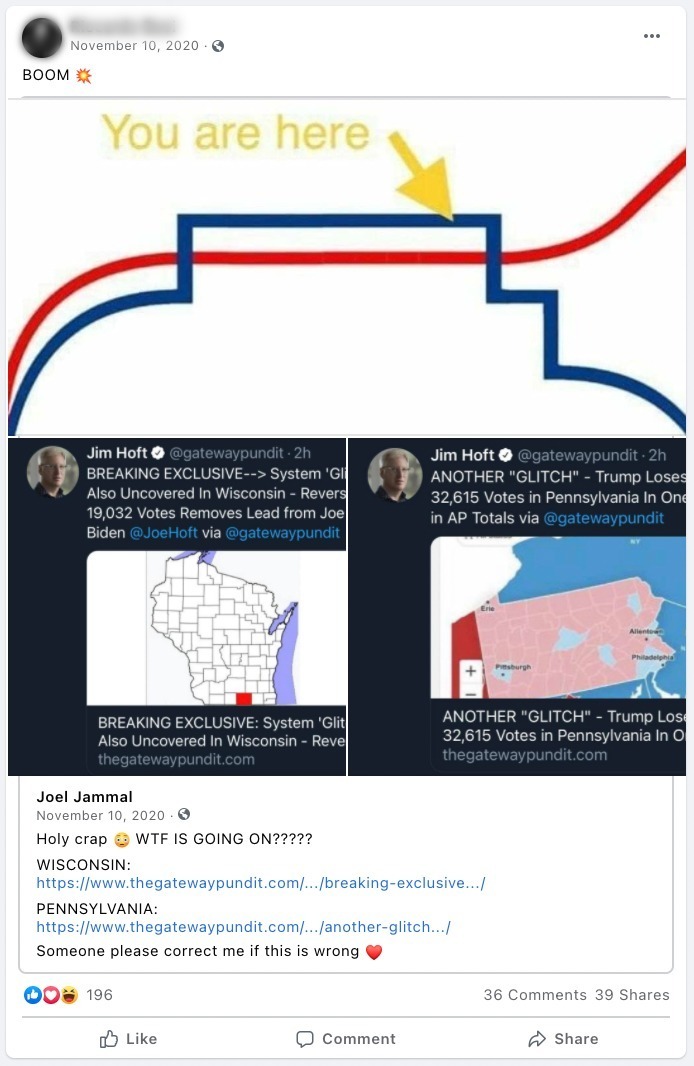

Unlabeled posts among those 100 stories included debunked claims surrounding voter fraud. The

post

below, which remains live on Facebook and unlabeled, includes the following claim: “System ‘Glitch’ Also Uncovered In Wisconsin – Reversal of Swapped Votes Removes Lead from Joe Biden”. The post links to a

Gateway Pundit article,

which alleges that it “identified approximately 10,000 votes that were moved from President Trump to Biden in just one Wisconsin County”. Despite the article and claim being

fact-checked

by USA Today (which found that “There was no glitch in the election system. And there’s no “gotcha” moment or evidence that will move votes over to Trump’s column. The presidential totals were transposed for several minutes because of a data entry error by The Associated Press, without any involvement by election officials.”), the post garnered 271 interactions, while the article has accumulated 82,611 total interactions on the platform.

An additional problem is that Facebook’s AI is not fit for purpose. As Avaaz

revealed

in October 2020, variations of misinformation already marked false or misleading by Facebook can easily slip through its detection system and rack up millions of views. Flaws in Facebook's fact-checking system mean that even simple tweaks to misinformation content are enough to help it escape being labeled by the platform. For example, if the original meme is marked as misinformation, it's often sufficient to choose a different background image, slightly change the post, crop it or write it out, to circumvent Facebook's detection systems.

However, according to our research, sometimes it does not even require a minor change; whilst one post might receive a fact-checking label, an identical post might not be detected thus avoiding being labelled.

Moreover, this research showed other nearly-identical misinformation posts continue to receive different treatments from Facebook. Below is an example of a story

debunked

by Lead Stories, which simultaneously appears on the platform in a

post

without any kind of label, in a

post

with an election information label, and in a

post

with a fact-check label.

These findings seriously undermine Facebook’s

recent claim

from May 2020 that its AI is already “able to recognize near-duplicate matches” and “apply warning labels”, regarding Covid-19 misinformation and exploitative content, noting that “for each piece of misinformation [a] fact-checker identifies, there may be thousands or millions of copies.”

Facebook’s detection systems need to be made much more sophisticated to prevent misinformation going viral.

Another worrying failing from Facebook on the issue of viral misinformation in this election concerns retroactive corrections. Even if fake stories slipped through cracks in its policies, the platform

could have taken action to ensure that everyone who saw these fake stories were shown retroactive corrections offering them factually correct information on the issue concerned

. This solution is called

‘Correct the Record’

, and Facebook could have started implementing it years ago. This would have prevented voters from being repeatedly primed with viral fake news that eroded trust in the democratic process.

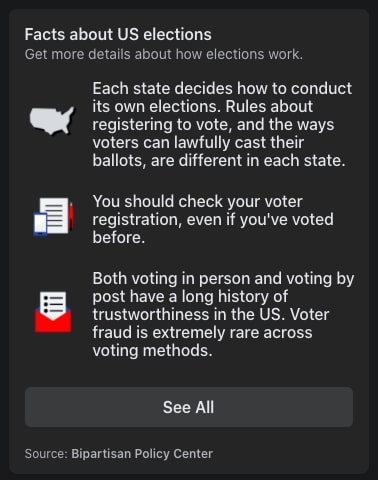

Again, Facebook very belatedly, and temporarily, applied a light version of corrections by including fact-checks about the elections in users’ news feeds (see screenshots below), but the platform only took this step in the days after the election, after months during which millions of Americans were flooded with election-fraud and other forms of misinformation.

Moreover, Facebook rolled back this policy a few weeks after it instituted it. The question is, again, why doesn’t Facebook commit to including fact-checks from independent fact-checkers that it partners with in the news feeds of users on the platform who have seen misinformation?

An independent study conducted by researchers at George Washington University and the Ohio State University, with support from Avaaz,

showed

that retroactive corrections can decrease the number of people who believe misinformation by up to 50%.

An independent study conducted by researchers at George Washington University and the Ohio State University, with support from Avaaz,

showed

that retroactive corrections can decrease the number of people who believe misinformation by up to 50%.

As a result of Facebook’s unwillingness to adopt this proactive solution,

the vast majority of the millions of US citizens exposed to misinformation will never know that they have been misled - and misinformers continue to be incentivized to make their content go viral as quickly as possible.

How Facebook can start getting serious about misinformation by expanding its COVID-19 misinformation policy

Back in April 2020, an

investigation

by Avaaz uncovered that millions of Facebook’s users were being exposed to coronavirus misinformation without any warning from the platform. On the heels of reporting these findings to Facebook, the company

decided

to take action by issuing retroactive alerts: "We’re going to start showing messages in News Feed to people who have liked, reacted or commented on harmful misinformation about COVID-19 that we have since removed. These messages will connect people to COVID-19 myths debunked by the WHO including ones we’ve removed from our platform for leading to imminent physical harm".

In December 2020 this was updated so that people: “Receive a notification that says we’ve removed a post they’ve interacted with for violating our policy against misinformation about COVID-19 that leads to imminent physical harm. Once they click on the notification, they will see a thumbnail of the post, and more information about where they saw it and how they engaged with it. They will also see why it was false and why we removed it (e.g. the post included the false claim that COVID-19 doesn’t exist)”.

The New York Times

reported

that Facebook was considering applying "Correct the Record" during the US elections. Facebook officials informed Avaaz that although they considered this solution, other projects such as Facebook’s Election Information Center took priority and the platform had to make “hard choices” about what solutions to focus on based on their assessed impact. Facebook employees who spoke to the New York Times, however, claimed that “Correct the Record” was: “vetoed by policy executives who feared it would disproportionately show notifications to people who shared false news from right-wing websites”.

Regardless of the reasons behind why Facebook did not implement “Correct the Record” during the US elections, the platform has an opportunity to implement this solution moving forward, and to do so in an effective way that curbs the spread of misinformation. To not take this action would be negligence. Misinformation about the vaccines, the protests in Burma, the upcoming elections in Ethiopia, and elsewhere remains rampant on the platform.

Nearly 100 million voters saw voter fraud content on Facebook, designed to discredit the election

Those who stormed the Capitol building on Jan. 6 had at least one belief in common: the false idea that the election was fraudulent and stolen from then-President Trump. While Trump was one of the highest profile and most powerful purveyors of this

falsehood

(on and offline), the rioters’ belief in this alternate reality was shaped and weaponized by more than just the former president and his allies. As in 2016, according to our research,

American voters were pummeled online every step of the 2020 election cycle, with viral false and misleading information about voter fraud and election

rigging designed to seed distrust in the election process and suppress voter turnout.

Facebook and its algorithm were one of the lead culprits.

Despite the company’s

promise

to curb the spread of misinformation ahead of the election, misinformation flooded millions of voters’ News Feeds daily, often unchecked.

According to

polling

commissioned by Avaaz in the run-up to Election Day, 44% of registered voters, which approximates to

91 million registered voters in the US, reported seeing misinformation about mail-in voter fraud on Facebook, and 35%, which approximates to 72 million registered voters in the US, believed it.

It is important to note that polls relying on self-reporting often exhibit bias, and hence these numbers come with a significant uncertainty.

However, even with this uncertainty, the magnitude of these findings strongly suggests that Facebook played a role in connecting millions of Americans to misinformation about voter fraud. The best way to assess this would be to conduct an independent audit of Facebook, investigating the platform’s role by requesting access to key pieces of data not available to the public. This can be done while also respecting users’ privacy rights.

In addition, our research provided further evidence of Facebook’s role in accelerating voter-fraud misinformation. As

reported

in the New York Times, we found that in a four-week period starting in mid-October 2020,

Donald Trump and 25 other repeat misinformers which the New York Times termed “superspreaders”

, including

Eric Trump,

Diamond & Silk,

and

Brandon Stratka,

accounted for 28.6 percent of the interactions people had with such content overall on Facebook

. The influencers making up that “superspreader” list posted various false claims, some said that

dead people voted

, others that

voting machines had technical glitches,

or that

mail-in ballots were not correctly counted.

This created fertile ground for conspiracy movements such as “Stop the Steal” to grow, both on and offline. On November 4, 2020, the day after Election Day, public and private Facebook “

Stop the Steal

” group membership proliferated by the hour.

The largest group “quickly became one of the

fastest-growing

in the platform's history,” adding more than 300,000 members by the morning of November 5th

. Shortly thereafter, after members issued calls to violence, this same group was

removed

by Facebook.

Despite the group’s removal and the company’s promise to Avaaz to investigate “Stop the Steal” activity further, we found that there were approximately 1.5 million interactions on posts using the hashtag #StoptheSteal to spread election fraud claims, which we estimate received 30 million estimated views during the week after the 2020 election (November 3 to November 10).

And on November 12, just days after the presidential election was called for Biden, we found that 98 public and private “Stop the Steal” groups still remained on the platform with a combined membership of over 519,000. Forty six of the public groups had garnered over 20.7 million estimated views (over 828,000 interactions) between November 5 and November 12.

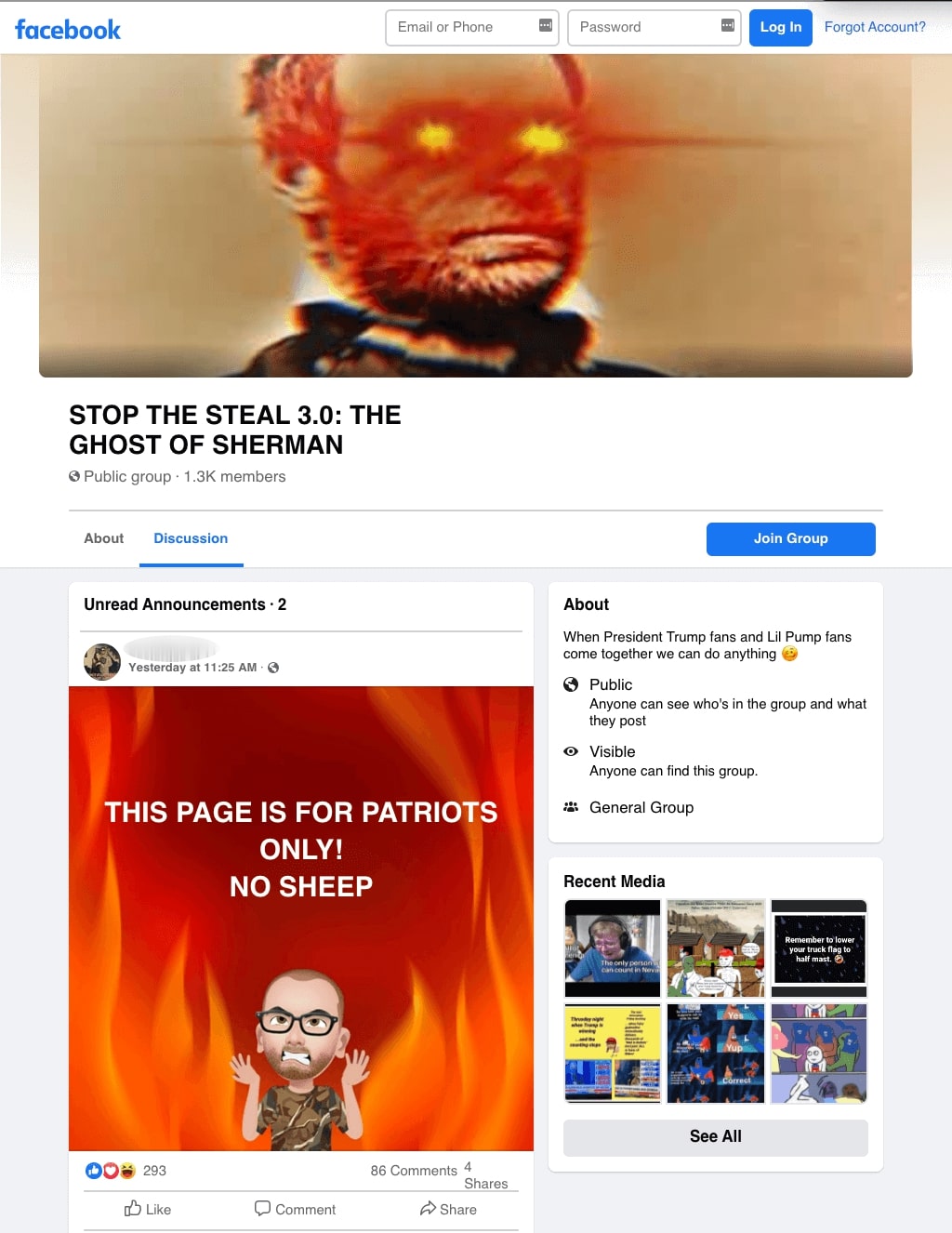

This group called Stop the Steal 3.0: The Ghost of Sherman had over 1,300 members before being closed down. Dozens of similar groups had popped up across the country.

This group called Stop the Steal 3.0: The Ghost of Sherman had over 1,300 members before being closed down. Dozens of similar groups had popped up across the country.

It took the deadly Capitol riots on Jan. 6 for Facebook to

announce

a ban on “Stop the Steal” content across the platform once and for all. As our

research,

which was reported on by CNN, highlighted:

this ban didn’t address the wider universe of groups and content that sought to undermine the legitimacy of the election

- and didn’t account for groups that had been renamed (from “Stop the Steal”) to avoid detection and accountability. This included one group called “

Own Your Vote

” which we identified was administered by

Steven Bannon’s

Facebook page

21

.

Facebook’s piecemeal actions came too late to prevent millions of Americans from believing that voter fraud derailed the legitimacy of this election, a misinformation narrative that was being seeded on the platform for months

. According to a

poll

from YouGov and The Economist conducted between 8 and 10 November among registered voters, 82 percent of Republicans said they did not believe that Biden had legitimately won the election, even though he had. And even after the election results were

certified

by Congress, polls continued to show voter fraud misinformation’s firm grip on many American voters. One

poll

released on January 25, 2021 from Monmouth showed that one in three Americans believe that Biden won because of voter fraud.

It is too late for Facebook to repair the damage

it has done in helping to spread the misinformation that has led to these sorts of polling numbers, and the widespread belief in claims of voter fraud that have polarized the country and cut into the legitimacy of President Biden in the eyes of millions of Americans.

However, the platform can and must now proactively adopt policies such as “Detox the Algorithm” and “Correct the Record” that will prevent future dangerous misinformation narratives from taking hold in a similar way. Instead of moving in this direction, the platform has largely rolled back many of its policies, again putting Americans at risk.

Tell Your Friends