Methodology and Data Set

The investigative team analysed misinformation content about the coronavirus posted between December 7, 2020, and February 7, 2021, that met the following criteria:

-

Were fact checked by Facebook’s third-party fact-checking partners or other reputable fact-checking organisations.

25

26

-

Were rated “false” or “misleading,” or any rating falling within these categories, according to the tags used by the fact-checking organisations in their fact-check article.

-

The variation of ratings description is quite broad. Here are some examples (please contact us to see the full list we used):

-

Disinformation - Factually Inaccurate - Cherry-Picking - False - Mostly False - Hoax - Incorrect - Misleading - No Evidence - Not True - Wrong

-

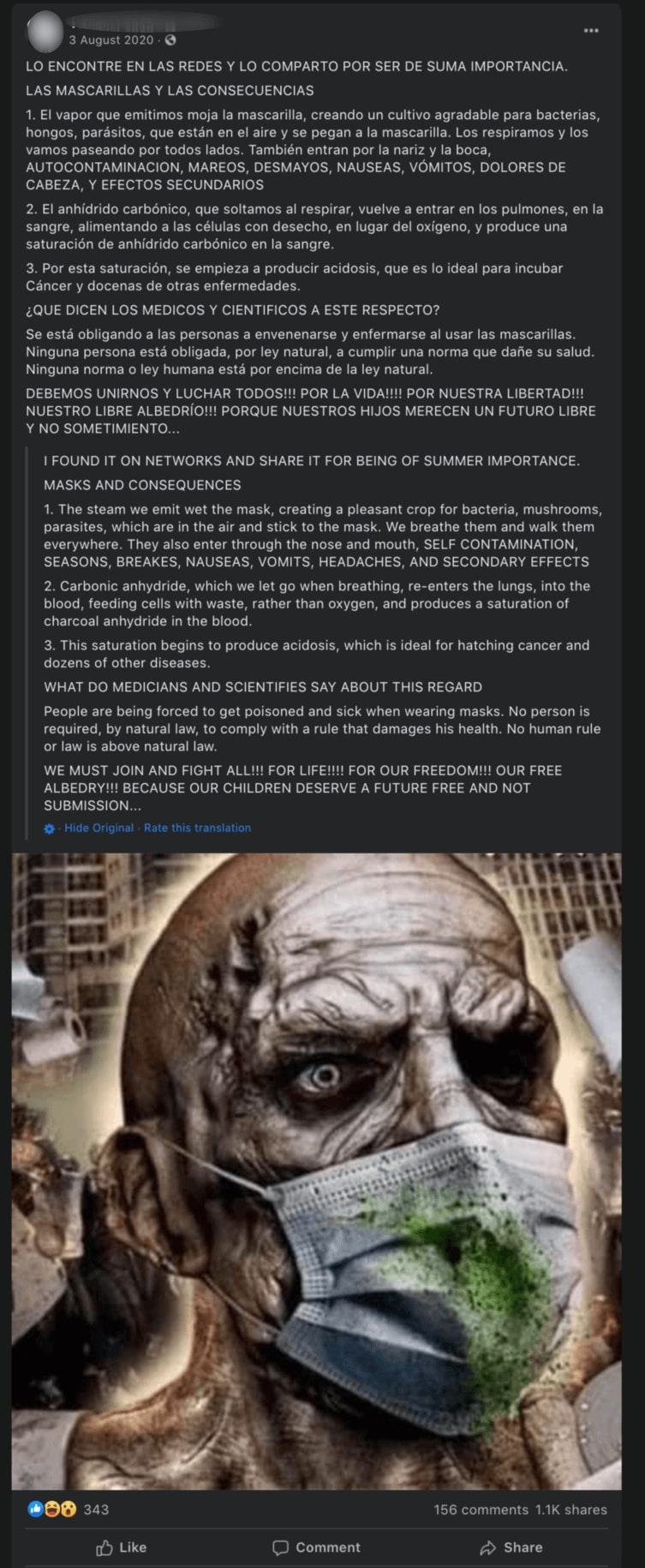

Could cause public harm by undermining public health. Avaaz has included content that impacts public health in the areas of:

-

Preventing disease

: e.g., false information on diseases, epidemics and pandemics and anti-vaccination misinformation.

-

Prolonging life and promoting health

: e.g., bogus cures and/or encouragement to discontinue recognised medical treatments.

-

Creating distrust in health institutions, health organisations, medical practice and their recommendations

: e.g., false information implying that clinicians or governments are creating or hiding health risks.

-

Fearmongering

: health-related misinformation that can induce fear and panic, e.g., misinformation stating that the coronavirus is a human-made bio-weapon being used against certain communities or that Chinese products may contain the virus.

Methodology for measuring Facebook labelling and removals (Figures 1, 2, 3, 4, 7)

For the purpose of measuring Facebook’s stated claims about its fact-checking efforts, the investigative team analysed a sample of 135 posts about the coronavirus based on the above criteria that were comparable to the sample analysed in our 2020 study. In order to allow a precise comparison with our 2020 study on coronavirus-related misinformation we selected only content that was published in the five languages previously covered in our 2020 report

27

: English, Italian, Spanish, French and Portuguese.

For each of the false and misleading posts and stories sampled based on the above criteria, Avaaz researchers recorded and analysed, using both direct observation and CrowdTangle

28

:

-

The total number of interactions it received;

-

The total number of views it received in the case of Facebook videos;

-

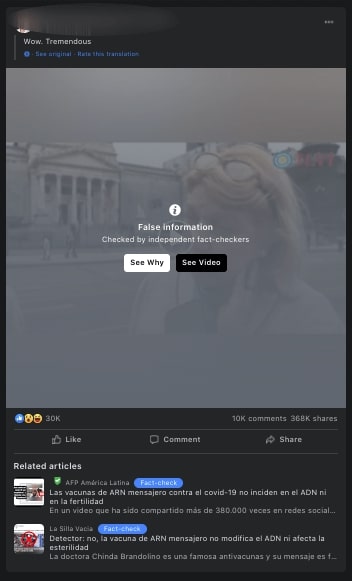

Whether each had a warning label as false or misleading

29

added to it by Facebook

30

;

-

When misinformation posts would receive a fact-check warning label or be removed

31

; and

-

The delay between when the misinformation content was posted and the publication of a fact check by a reputable fact-checking organisation

32

;

Methodology for measuring labelling and removal delays (Figures 5 and 6)

For the purpose of measuring Facebook’s delay between when the original misinformation content was posted and the date Facebook first applied its moderation policies on the post by either flagging the content as misinformation or removing it from the platform, our team analysed a data set of 26 posts. These were all the posts for which our team, accessing them on a daily basis for 43 days, was able to manually document when a label was first applied, or the post was removed.

In order to collect a significant sample, given the difficulty in recording exactly the day when a measure is applied, we considered posts in English, Italian, Spanish, French and Portuguese, but also German.

In our previous discussions with Facebook, we had requested that the platform provide more access to its systems or provide transparency on both the average time between when misinformation is posted, and when a fact-check label is applied or the content is removed, as well as the number of users that view misinformation content before it is labelled. These data points are important as a means of analysing the platform’s effectiveness in combating misinformation. We continue to urge Facebook to be more transparent about these numbers more broadly, as it is difficult for researchers to continue to manually conduct such an analysis.

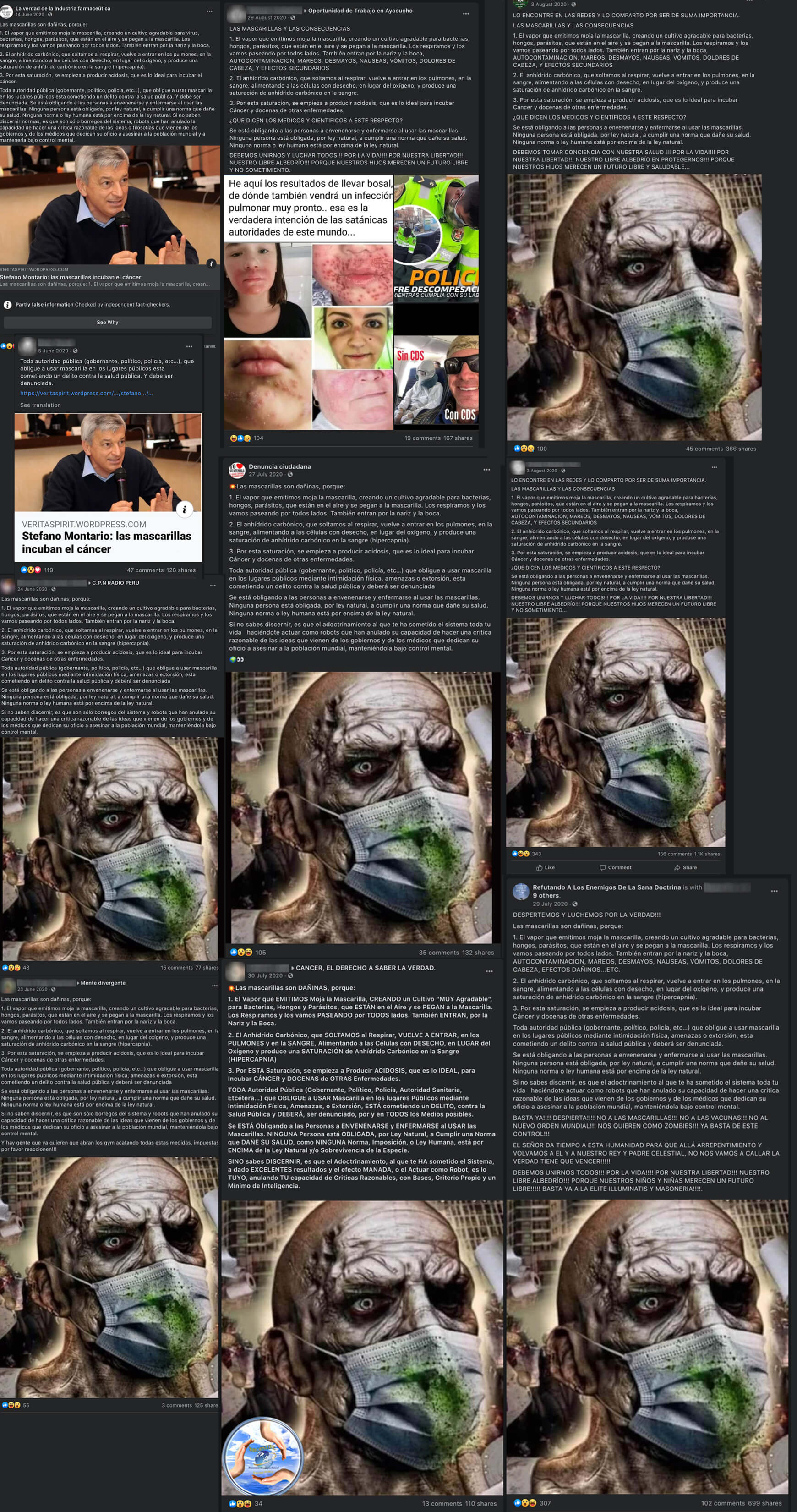

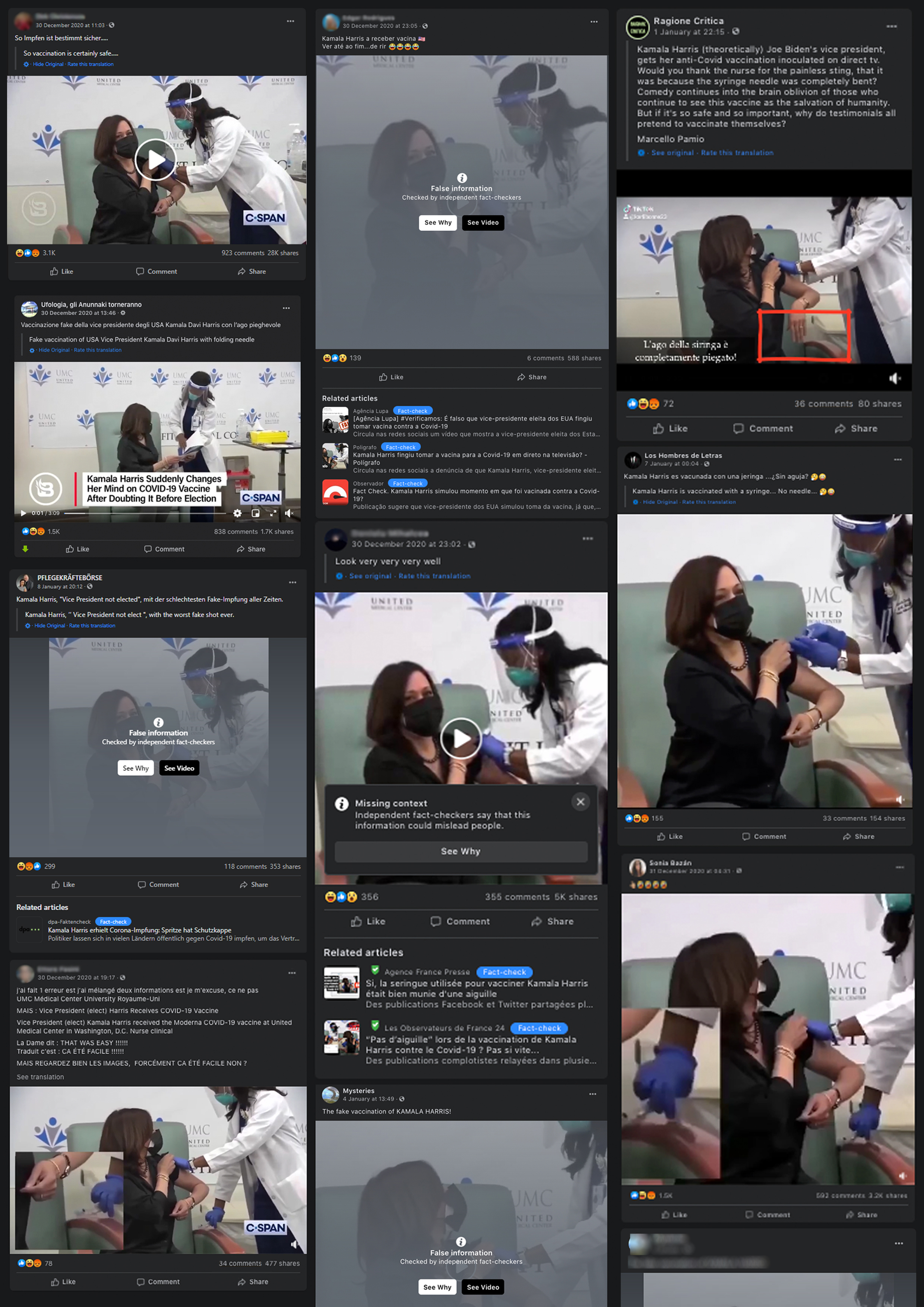

Methodology for identifying “clones” and “variants”

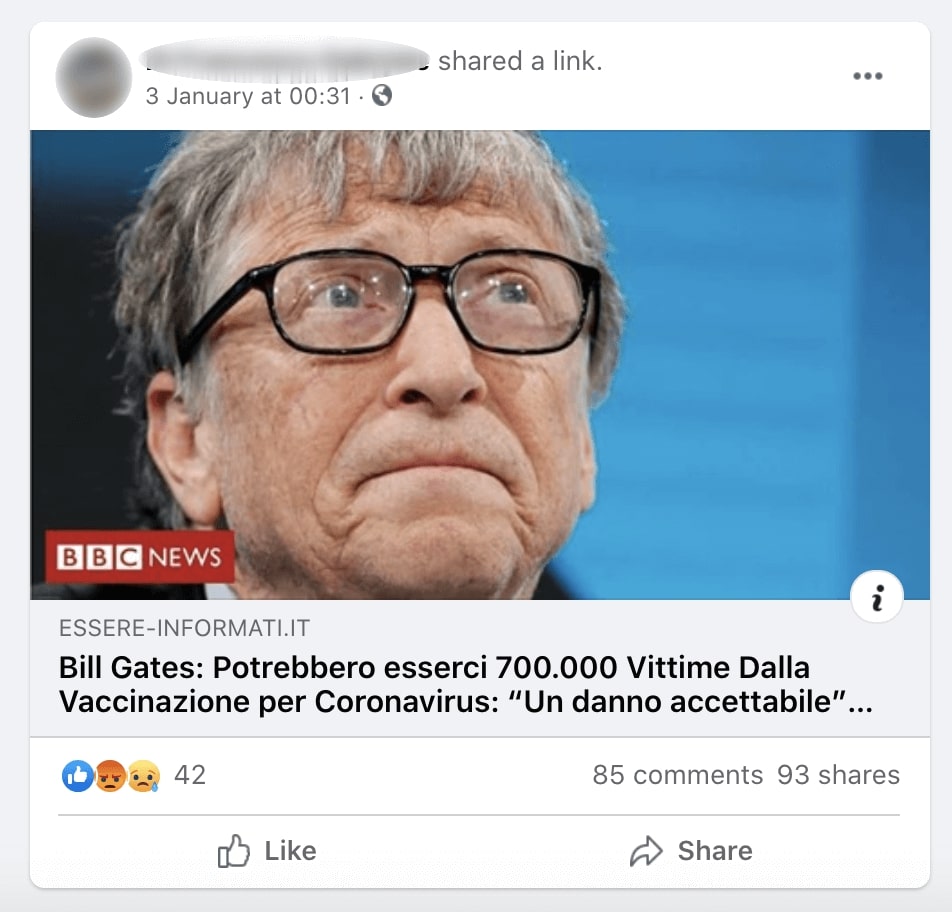

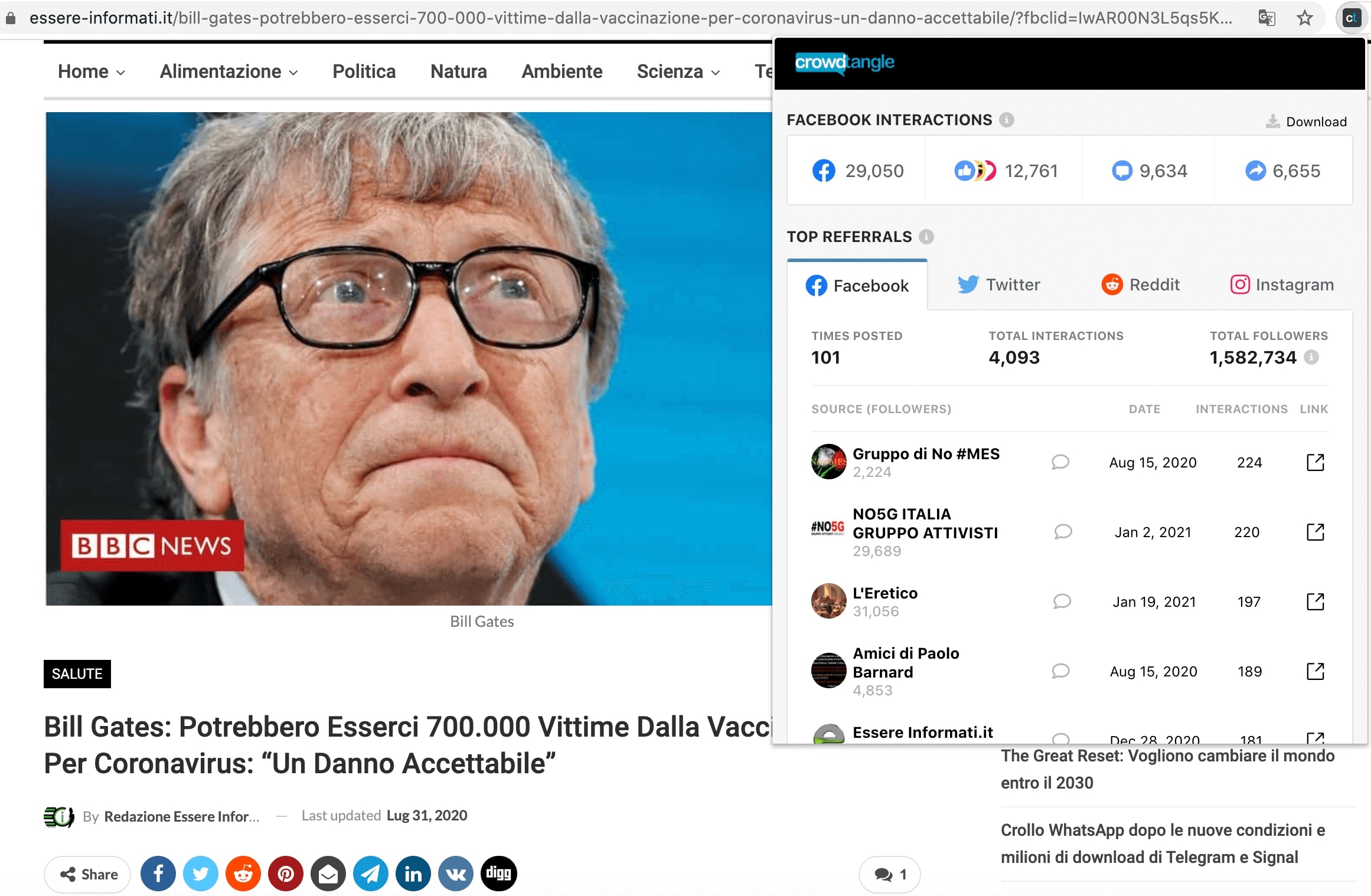

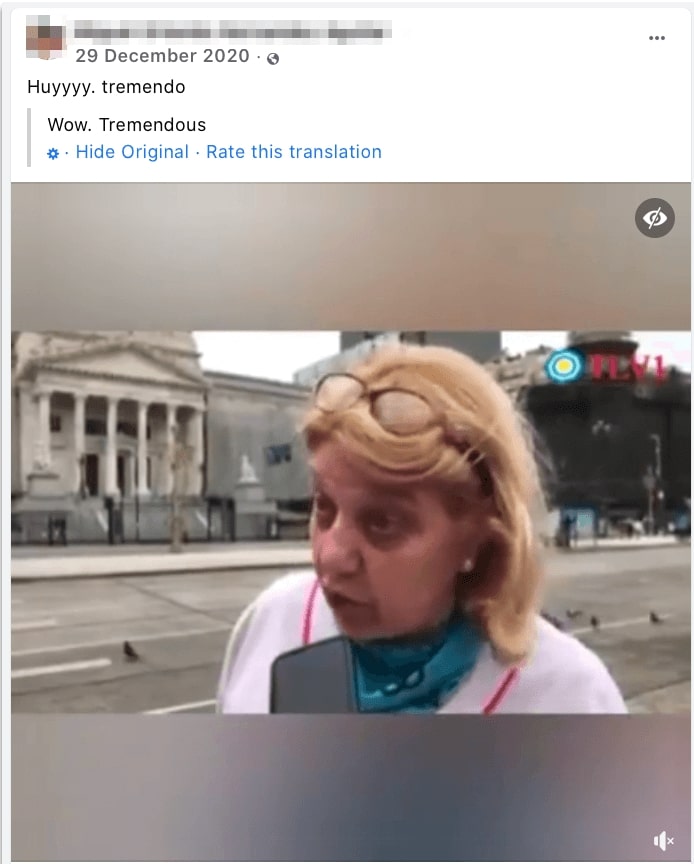

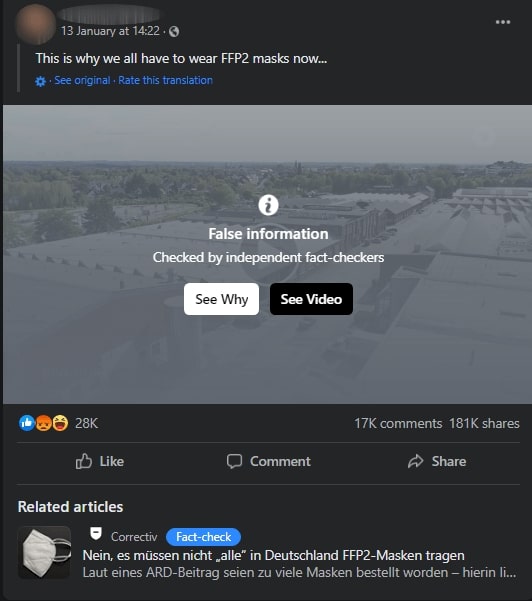

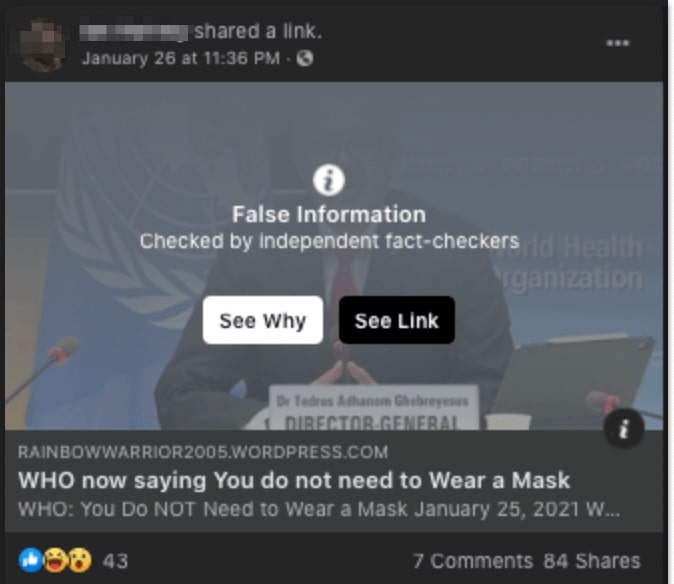

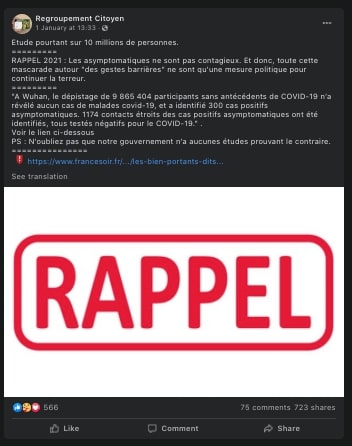

During the research process, our investigative team noticed that posts previously documented were spreading in different languages in an exact, or slightly altered, fashion, and were collecting a large amount of interactions. Our team further investigated seven narratives from our sample of 135 posts, to conduct a dedicated research of the spread of such "clones" and "variants".

We used CrowdTangle

33

to search text from the original post we had documented to identify public shares of the same content - or variations of it - shared by Facebook pages, public groups or verified profiles.

We only included posts when we were able to document at least one occurrence that had been labelled by Facebook but we could also find “clones” or “variants” of the same example that had not been labelled.

With such methodology our team was able to identify a total of 51 posts. The engagement data we estimate for our sample provides some indication of the relative reach of different claims.

General note on methodology

It is important to note that, while we collect data and compute numbers to the best of our ability, this analysis is not exhaustive as we looked only at a sample of fact-checked misinformation posts in five languages. Moreover, this research is made significantly more challenging because Facebook does not provide investigators with access to the data needed to measure the total response rate, moderation speed, number of fact checks and the amount of users who have seen or been targeted with misinformation.

Nonetheless, Facebook is becoming more cooperative with civil society organisations, and we hope the platform continues this positive trend. We also recognise the hard work of Facebook employees across different sub-teams, who have done their best to push the company to fix the platform’s misinformation problem. This report is not an indictment of their personal efforts, but rather highlights the need for much more proactive decisions and solutions implemented by the highest levels of executive power in the company.

This study achieved its purpose by taking a small step towards a better understanding of the scale and scope of the COVID-19 misinformation infodemic on Facebook.

Cooperation across fields, sectors and disciplines is needed more than ever to fight disinformation and misinformation. All social media platforms must become more transparent with their users and with researchers to ensure that the scale of this problem is measured effectively and to help public health officials respond in a more effectual and proportional manner to both the pandemic and the infodemic.

A list of the pieces of misinformation content referenced in this report can be found in the annex.

It is important to note that although fact checks from reputable fact-checking organisations provide a reliable way to identify misinformation content, researchers and fact checkers have a limited window into misinformation spreading in private Facebook groups, on private Facebook profiles and via Facebook messenger.

Similarly, engagement data for Facebook posts analysed in this study are only indicative of wider engagement with, and exposure to, misinformation. Consequently, the findings in this report are likely conservative estimates.

For more information and interviews:

More information about Avaaz’s disinformation work:

Avaaz is a global democratic movement with more than 66 million members around the world. All funds powering the organisation come from small donations from individual members.

This report is part of an ongoing Avaaz campaign to protect people and democracies from the dangers of disinformation and misinformation on social media. As part of that effort, Avaaz investigations have shed light on how Facebook was a

significant catalyst in creating the conditions that swept America down the dark path from election to insurrection

; how

Facebook’s AI

failed American voters ahead of Election Day in October 2020; exposed

Facebook's algorithms

as a major threat to public health in August 2020; investigated the US-based

anti-racism protests

where divisive disinformation narratives went viral on Facebook in June 2020; revealed a disinformation network with half a billion views ahead of the

European Union elections

in 2019; prompted Facebook to take down a network reaching 1.7M people in Spain days before the 2019

national election

; released a report on the fake news reaching millions that fuelled the

Yellow Vests crisis

in France; exposed a massive disinformation network during the Brazil presidential elections in 2018; revealed the role anti-vaccination misinformation is having on reducing the vaccine rate in Brazil; and released a report on how YouTube was driving millions of people to watch

climate misinformation videos

.

Avaaz’s work on disinformation is rooted in the firm belief that fake news proliferating on social media poses a grave threat to democracy, the health and well-being of communities, and the security of vulnerable people. Avaaz reports openly on its disinformation research so it can alert and educate social media platforms, regulators and the public, and to help society advance smart solutions to defend the integrity of our elections and our democracies. You can find our reports and learn more about our work by visiting:

https://secure.avaaz.org/campaign/en/disinfo_hub/

.

Tell Your Friends